You cannot open a news reader, a tech blog, a finance newsletter, or some Medium post written by a man who calls himself a “founder” without stumbling into yet another take on this thing. Everyone suddenly has a hot angle and is slicing this story into “dimensions” like they just discovered a new anime power system.

And somehow, magically, they all come at it from Oracle’s point of view.

Oracle’s debt. Oracle’s courage. Oracle’s risk appetite. Oracle’s bold infrastructure play. Oracle’s poor stock price having a little emotional moment. Oracle as the tragic hero carrying AI on its back while Wall Street misunderstands the genius.

Cool story. But wrong angle.

Because none of these write-ups talk about our point of view. The people who actually live under this thing, whose grid gets bent out of shape and whose towns suddenly become “strategic assets”. Yes, the same people who were were told for a decade that AI lives in the cloud, and now discover that the cloud apparently needs more power than Detroit.

So I thought, fine. Let’s do that writeup.

Not the Oracle investor deck version. And certainly not the OpenAI press release version. No, let’s do the version from ground zero, where AI is actually loud, hot, expensive, and very real.

More rants after the messages:

- Connect with me on Linkedin 🙏

- Subscribe to TechTonic Shifts to get your daily dose of tech 📰

- Please comment, like or clap the article. Whatever you fancy.

The moment the cloud hit the ground

AI is software in the purest sense. It is something abstract which lives in code, not in places. You write it, you train it somewhere out of sight, and you use it somewhere else. The hardware, the power, the location, the cost. None of that was supposed to be your concern. AI was sold as a service you consume, not an infrastructure you have to think about.

Then OpenAI signed a deal to pay Oracle more than three hundred billion dollars for computing power for this inferencing play, and suddenly the cloud needed land, permits, power lines, gas turbines, cooling water, debt financing, and a public relations team that specializes in smiling all the way through a stack of lawsuits.

From that moment on, AI had to be treated as infrastructure rather than as a piece of software, with physical limits that could no longer be ignored.

Infrastructure lives in the real world, where enthusiasm does not shorten delivery times and opinion pieces do not speed up permits. It unfolds on timelines shaped by concrete, steel, and components that arrive when they arrive, not when a roadmap says they should. These systems exist among people, and towns that were already busy long before AI showed up demanding priority access.

That is the context almost every Oracle-focused article ignores.

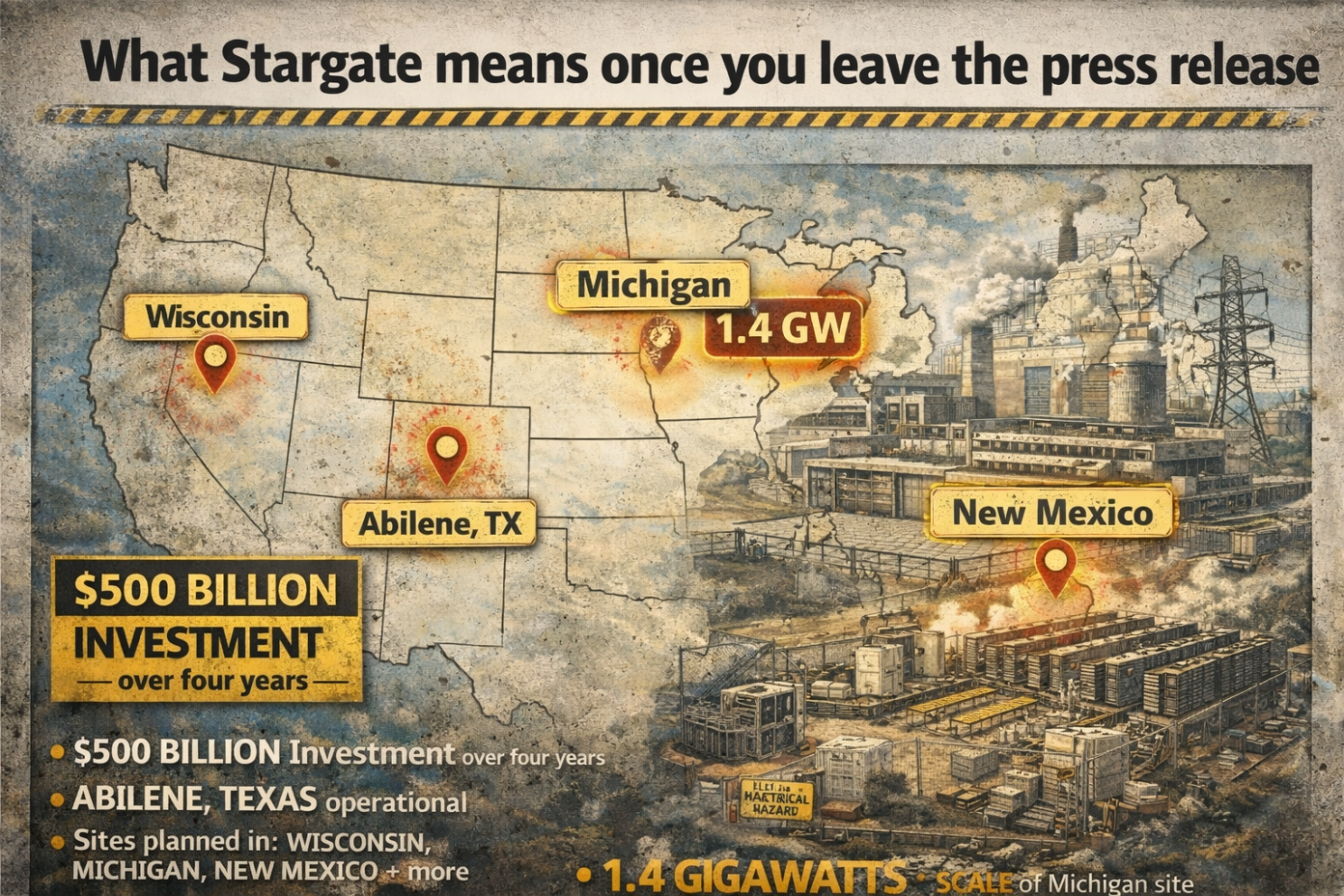

What Stargate means once you leave the press release

Stargate sounds like science fiction, and that is almost certainly intentional. It is a joint venture that was announced in January 2025, shortly after Trump returned to office, and it had a stated investment of five hundred billion dollars spread across four years. The ambition is simple enough to explain. Build enough data centers so that OpenAI can keep scaling its models without running into compute ceilings.

The physical implications requires reshaping large parts of the American energy system while it is still in use. The first Stargate facility is already operational in Abilene, Texas and additional sites are planned in Wisconsin, Michigan, New Mexico, and other locations, and several of these projects are not incremental expansions. For instance, one site in Michigan alone is planned at 1.4 gigawatts of capacity.

That figure deserves attention, since most people nod past it without grasping the scale involved. One point four gigawatts represents the electricity demand of a large city, concentrated into a fenced compound filled with servers, cooling equipment, and assumptions about uninterrupted growth.

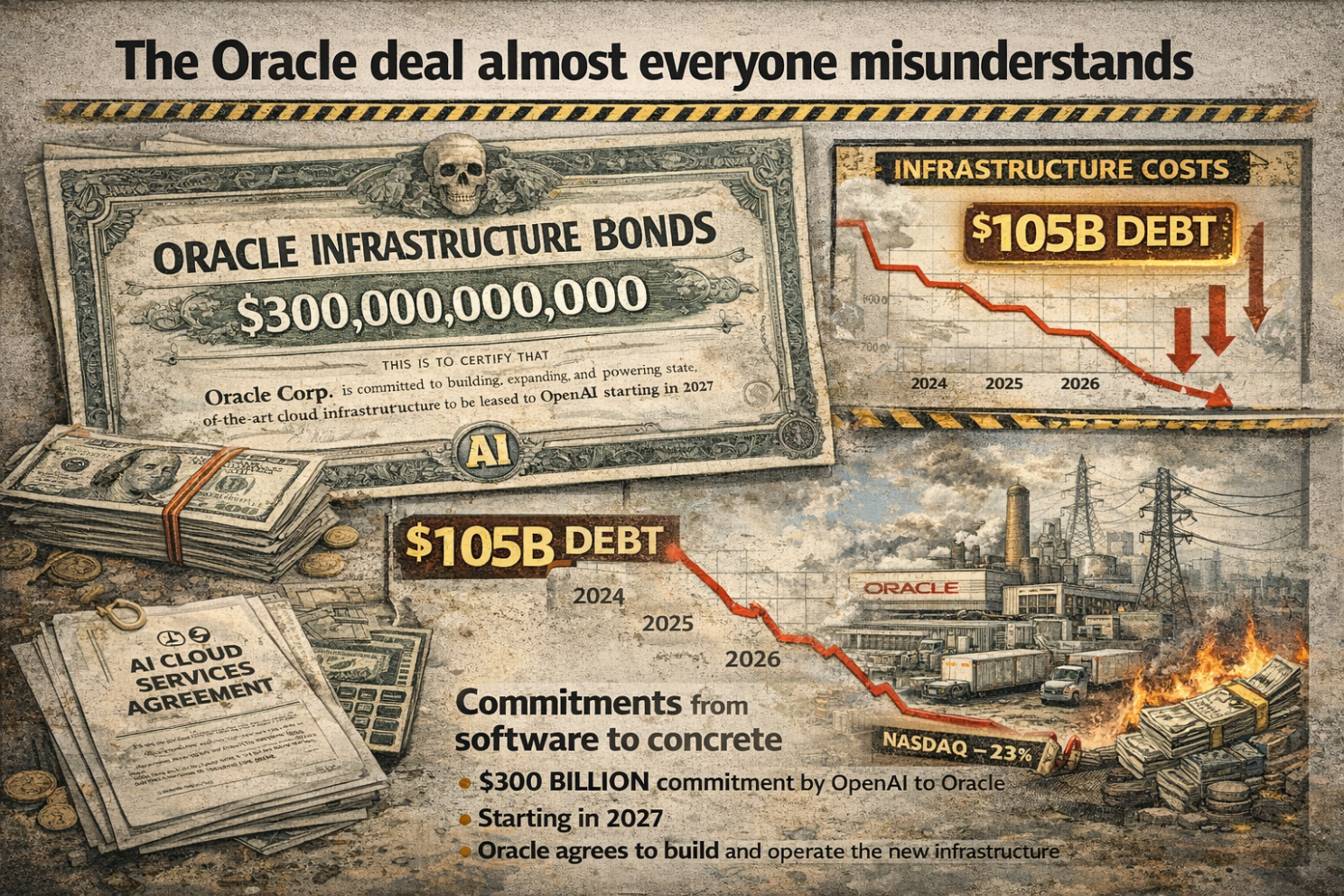

The Oracle deal almost everyone misunderstands

Oracle is not casually placing a three hundred billion dollar bet on OpenAI out of faith or enthusiasm, much of the public analysis loses the plot here. OpenAI committed to paying Oracle more than three hundred billion dollars over five years, starting in 2027, in exchange for cloud services. Oracle, in turn, agreed to build and operate the infrastructure required to deliver that compute.

The sequencing matters.

Oracle builds first, finances the buildout up front, and recovers the investment over time. The company has taken on enormous debt of roughly one hundred and five billion dollars to do this, and analysts are warning that further borrowing may be required. Of course, investors responded in predictable fashion once capital expenditure began to resemble national infrastructure spending. Oracle’s stock fell sharply, and it subsequently erased hundreds of billions in market value or Larry’s baby.

This reaction did not reflect confusion. Let that not be your conclusion. It reflected recognition that AI scaling had crossed from software economics into physical constraints.

They understood the move very well. What they reacted to was the realization that scaling AI no longer behaves like scaling software, the stuff they had experience with, investing for decades in Oracle. For years, software growth meant flexible capacity, and fast adjustments when something changed. But this deal showed there’s a different reality looming on the horizon. Scaling AI is depending on physical things like power generation, data centers, construction timelines, labor availability, debt financing, and so on. Those elements move slowly, cost a lot upfront, and carry risks that cannot be optimized away with better code.

In their eyes, investing in AI meant they had to abandon their mental model of a software business and had to adopt a mindset more like investing in heavy infrastructure. Markets know how to price software stories. However, they are far less comfortable pricing projects that collide with physics and energy grids, and long-term capital commitments.

That is the conclusion these investors came to, and it matters because it changes how risk is assessed.

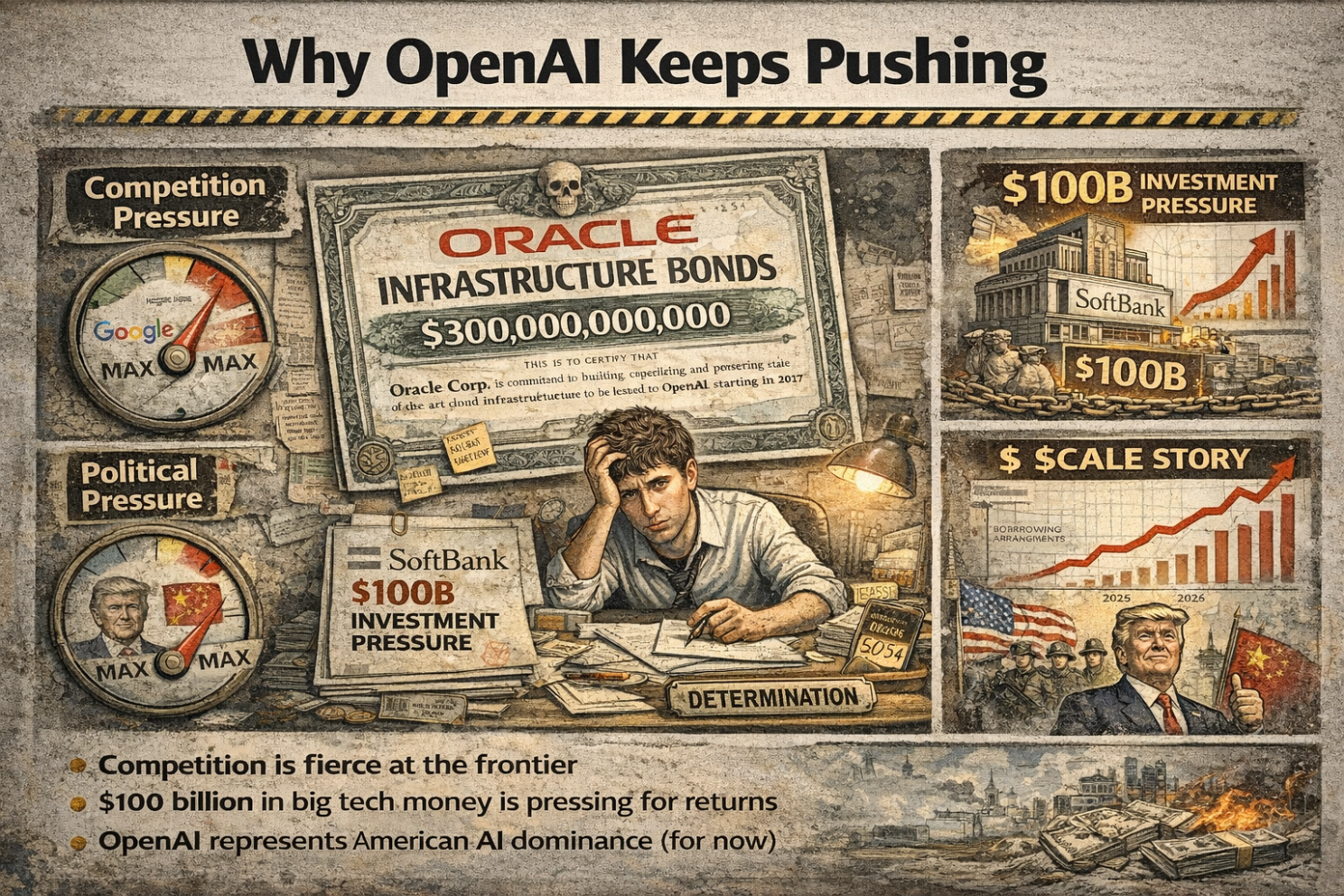

Why OpenAI keeps pushing

OpenAI has little room to slow down. Sam (the scam) has promised the world -read yesterday’s piece. Competition at the frontier is intense, and Google’s Gemini is now showing them that leadership in their market is not a guaranteed thing, and that staying ahead of the competition requires access to more computing power than exists today, and patience does not produce advantage in this market.

And financial pressure also reinforces the urgency.

OpenAI needed to move toward a for-profit structure by late 2025 to unlock major funding commitments, including SoftBank’s participation because without that capital, the Stargate vision contracts rapidly, and as a result, OpenAI continues to press forward, even though determination alone does not produce power plants.

And there are more reasons why the pressure on Sam is high. . .

Several other forces keep OpenAI pushing, and none of them involve calm reflection.

OpenAI has spent years telling investors, governments, partners, and customers that scale is unavoidable and that bigger models are the future. Those promises were not casual marketing lines. They shaped our expectations, and investor’s funding decisions, and also their political support, and slowing down would look like failure rather than caution. That outcome would corner Sam into admitting that his scale story depended on power availability, fast permitting, cheap capital, and smooth construction timelines, and none of those turned out to be guaranteed. And simply put, the company cannot afford to say out loud.

Once the scale story was out, expedited movement became mandatory.

Than there is platform pressure. ChatGPT sits inside products, and it has contracts with third parties. Their customers expect progress, new features, smarter models, upgrades, and reliability. And pausing development would break expectations that OpenAI itself created, and those expectations now exert more pressure than research goals ever did.

And geopolitics adds another layer. OpenAI is part of the national story about AI leadership. Trump want symbols of AI dominance, not restraint, and any visible slowdown weakens that narrative and invites political discomfort because the global attention is already shifting in China’s favor because of their more practical (and less costly) approach to AI.

Add all of them together, and at that point, their scaling narrative cannot be sustained.

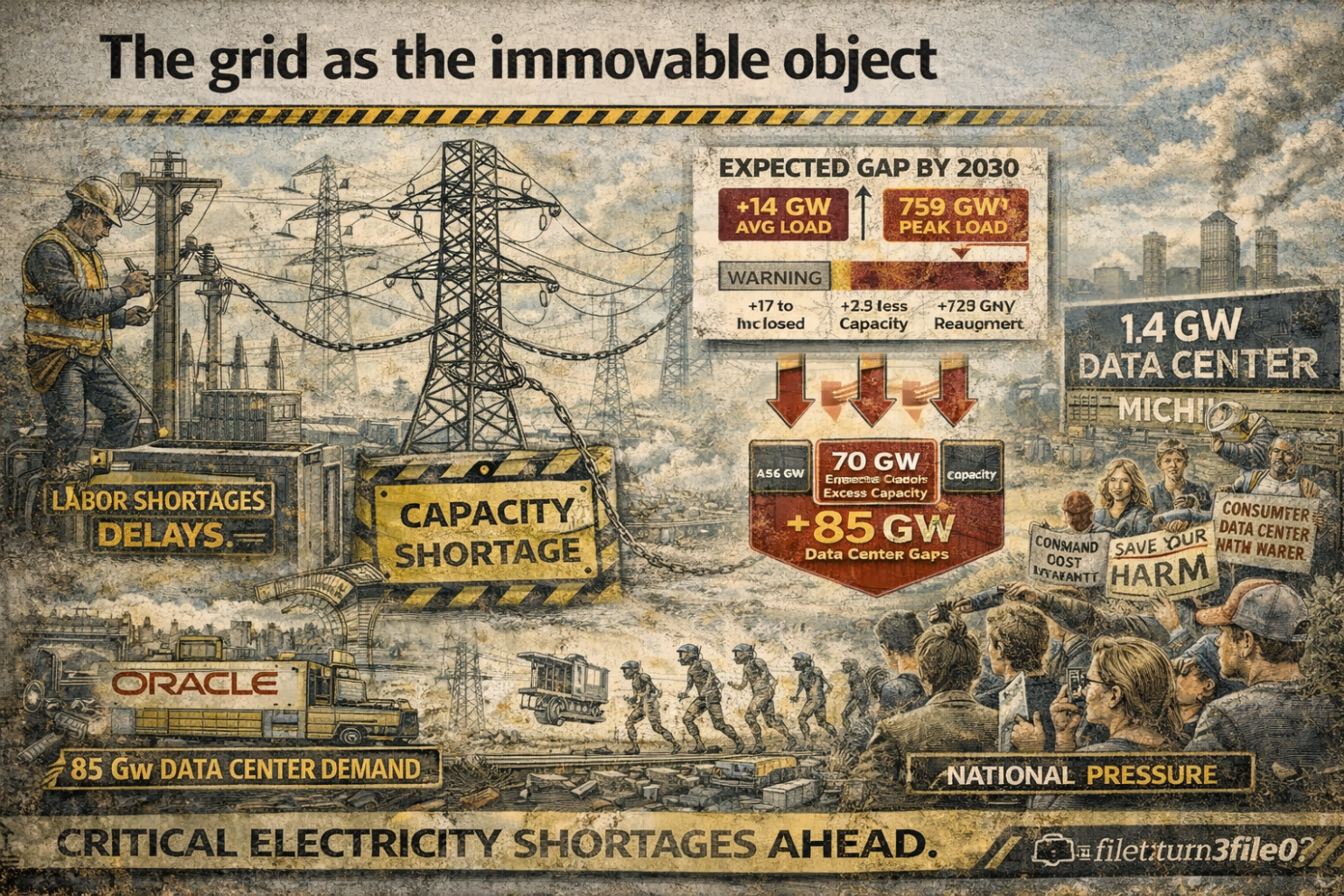

The grid as the immovable object

The most fundamental constraint sits far from the AI discourse. I am talking about the US power grid. The thing is that it lacks the spare capacity required to support the scale of data center expansion that is now being proposed. And while the debate continues, electricity demand is already rising, and large AI facilities only accelerate that trend.

Projection-making people say that US electricity consumption will increase by more than seventeen percent by 2030, and that will become much higher when data center plans proceed as Sam envisioned. The grid has roughly seventy gigawatts of excess capacity available during peak periods, and data center developers are already requesting closer to eighty-five gigawatts by the end of the decade.

The US is already an electricity leviathan. The Energy Information Administration’s 2024 US profile shows the total retail electricity sales at about 3,975,381,832 MWh, (4000 GWh) across the year. Now, spread that across 8,760 hours and you get an average load near 454 GW, but peak demand goes far higher, with the Lower 48 hitting 759,180 MW during the July 2025 heat event.

Now place the data center wish list next to that. S&P Global expects the US grid-based electricity consumption to rise 17 percent by 2030 versus 2025, with a 23 to 25 percent scenario if data center ambitions land as planned. During the most constrained hours, excess generating capacity across the US totals about 70 GW and on top of that, the pipeline of new data center capacity requests expected by 2030 sits around 85 GW, and S&P notes that reliably serving that 85 GW calls for about 100 GW of total capacity including surplus.

Put differently, that 85 GW request pipeline is about 19 percent of today’s average US load, and still landing around 11 percent of that July 2025 peak, and it’s also competing for capacity exactly when the grid has the least slack.

This gap reflects a structural mismatch rather than a negotiable disagreement. Timing makes the situation worse. The most severe constraints are expected at the end of the decade, precisely when OpenAI and Oracle expect these facilities to operate at full capacity.

Demand clusters where the grid is weakest

The situation deteriorates further due to geography. A significant share of planned data centers sits in regions where grid capacity is already under strain, including the PJM network covering much of the eastern United States and concentrating demand in these areas would also increase approval delays, and of course, resistance from people living in these areas.

When you look at it from the perspective of grid operators, the picture looks like a small number of extremely large customers that are requesting power that does not yet exist, and to make things worse, it is in locations where adding supply is slow and expensive.

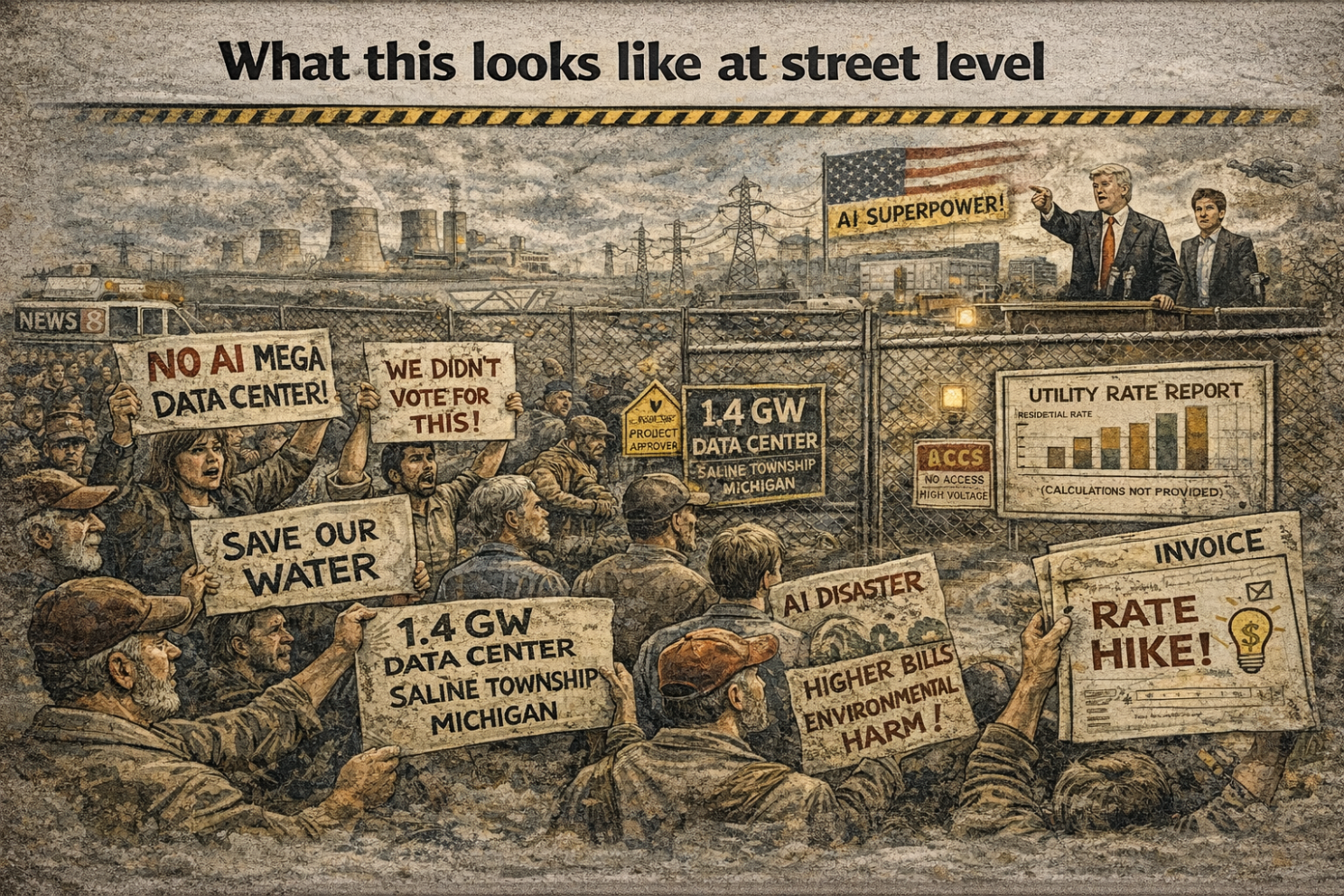

What this looks like at street level

The Michigan project in Saline Township is a good example of how these dynamics play out locally. The local regulators approved power delivery for a 1.4 gigawatt data center even though there was heavy opposition from residents. Their concerns were about the increase of electricity pricing (because consumers are now competing with AI data centers), it’s incredible water use, to the point that water for the residents can even become scarce, and the impact on the environment. But approval moved forward regardless due to federal and presidential pressure.

The local utility providers keep saying that residential rates will remain unaffected, but when you ask for detailed calculations, none of these companies ever comes forward. Viewed from outside, the project appears as progress, but experienced from within the community, it is more like they got conscripted into a national infrastructure effort without their consent.

And under those conditions, Sam and Trump’s talk of AI dominance acquires a very different tone.

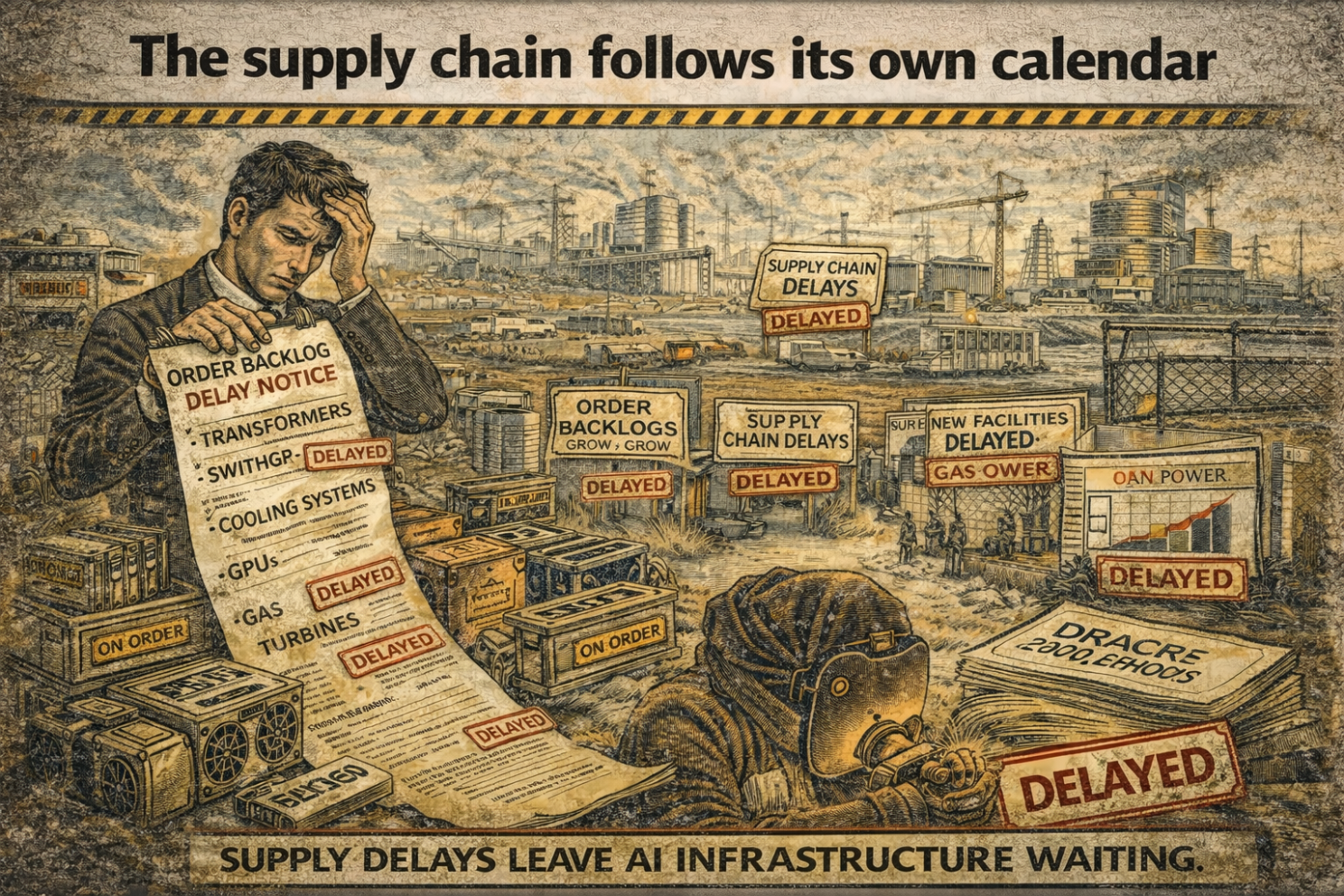

People remain a hard limit

Even with unlimited power, human capacity remains constrained. The construction industry faces a shortage of hundreds of thousands of skilled workers. Data center projects compete aggressively for electricians, engineers, and supervisors, and that is driving wages higher and it is also extending the delivery timelines.

Oracle themselves has already cited labor shortages as a reason for delaying certain projects, and this is not going to get solved on short notice because skilled trades require time to develop, and no amount of capital accelerates that process meaningfully.

The supply chain follows its own calendar

Equipment availability introduces another type of friction. I’m talking about hardware like transformers, and switchgear, high-capacity distribution hardware, and industrial cooling systems. They all face long lead times to produce and the global demand is surging. The pressure also extends upstream to GPUs and memory, and another side-effect is that large-scale purchases are affecting consumer markets prices of GPU.

Power generation equipment moves slowly as well. Demand for gas turbines has reached levels not seen in decades, and many new facilities will not come online until the late 2020s or early 2030s.

The thing is that when the industry made these promises of scaling AI, they had clearly not investigated how these energy systems are actually built. Reading this post could’ve brought Sam and Larry back down to earth: Icarus bought Wi-Fi and now we won’t have to worry about AI anymore

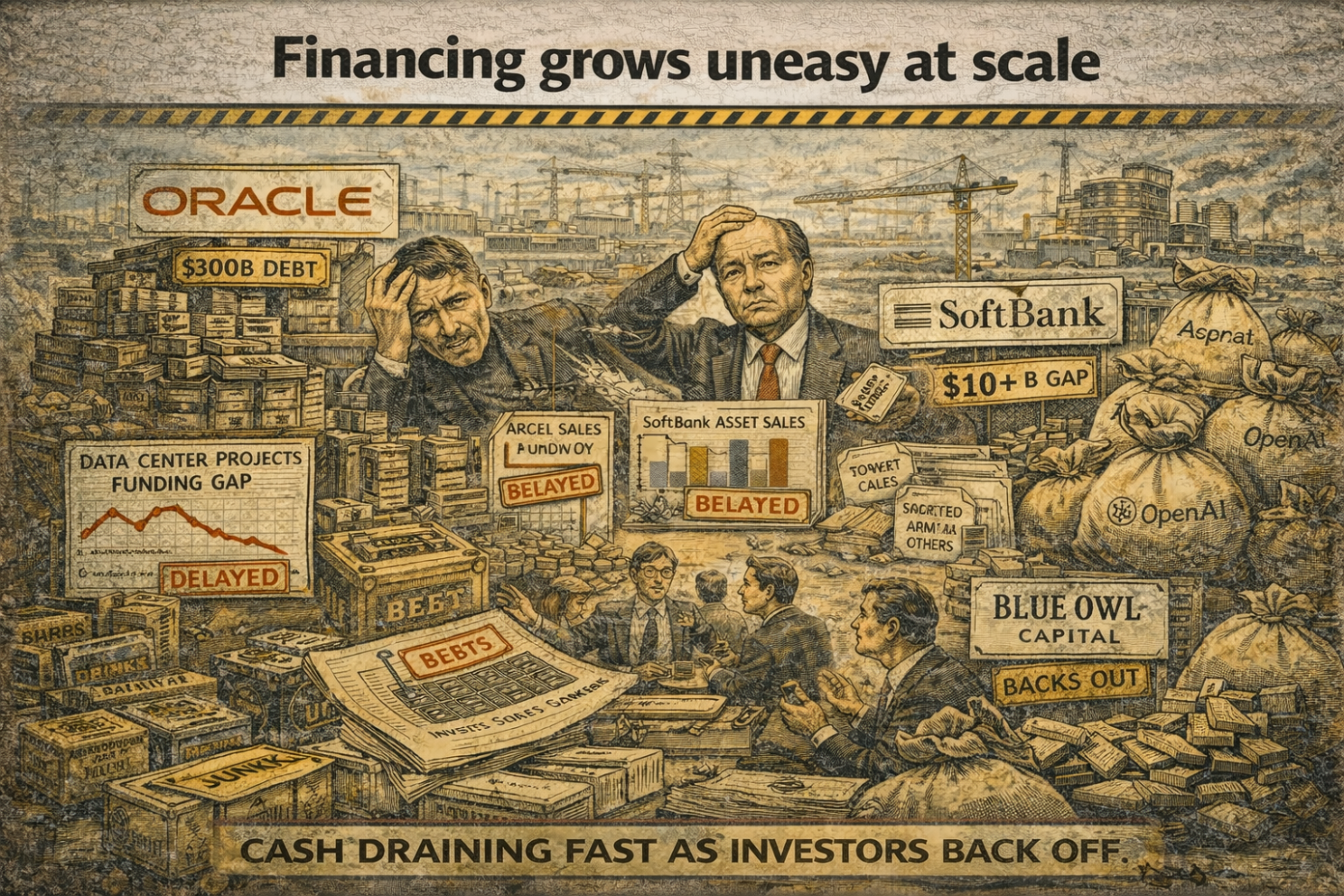

Financing grows uneasy at scale

At this magnitude, even deep pools of capital hesitate. Oracle’s $300B debt burden has unsettled their investors, and Blue Owl Capital even stepped away from funding the Michigan data center. Other firms may step in, but the hesitation itself signals changing perceptions of risk.

SoftBank has its own problems now. It still needs to come up with tens of billions of dollars to meet the promises it made around OpenAI. That money does not appear on its own, so SoftBank has been selling assets and cutting down some of its stakes in other companies to raise cash. Those moves do not signal confidence. They signal that the company feels boxed in and needs liquidity fast.

The larger issue sits under the surface. This whole financing plan only works as long as investors keep believing that the returns will be huge, and that those returns will arrive on a reasonable timeline. Delays make that belief harder to maintain. Construction slipping, permits dragging, power connections taking longer than promised. All of that gives investors time to doubt the story, and doubt is expensive in a project funded by big bets and borrowed money.

So SoftBank keeps moving, even when it looks messy, because hesitation turns into questions, and questions turn into higher costs or missing funding.

Resistance becomes organized

Pushback against these mega data centers is no longer limited to activists with signs and petitions. Regular residents are getting involved as well because the costs feel personal. People worry about higher electricity bills, especially when a single facility can pull power like a city. People worry about water being diverted for cooling, and about what happens to local supplies during dry periods. People worry about emissions rising when utilities keep fossil plants running longer to meet demand. Just as important, they worry about how the decisions are made, since the key choices often happen far away, in state offices, corporate boardrooms, or federal agencies, long before the community even gets a real chance to weigh in.

Environmental groups have also stepped in at scale. More than two hundred organizations have raised concerns about the combined impact of many data centers piling into the same regions, stressing water systems, power grids, and climate goals all at once.

Politics gets messy fast in this environment. National leaders talk about AI dominance and strategic infrastructure, while local officials and residents talk about zoning, utility costs, and basic fairness. Those agendas clash, since one side thinks in decades and competition, and the other thinks in bills and daily life.

Ohio is a good example of how regulators are trying to respond. The state introduced a rule that forces large data centers to pay for most of the power they reserve, even if they end up using less. That policy is not a culture-war gesture. It is a way to stop regular customers from paying for grid upgrades and capacity built for huge corporate loads. It is the system admitting that risk has to be priced, and someone has to carry it.

Environmental costs come into focus

Large AI data centers do not just sit there running software. They draw huge amounts of electricity every hour of the day, and they also need large volumes of water for cooling, depending on the design. When you build enough of these facilities, the impact starts showing up in local systems people already depend on.

Estimates put the emissions footprint in a sobering range. Annual emissions tied to AI could reach tens of millions of tonnes of carbon and water usage could reach hundreds of billions of liters. Those numbers matter because they do not stay inside the fence line. They affect local water supplies, local air quality, and the broader energy mix used to keep the lights on.

Facilities at this scale also shape energy policy, even if nobody admits it directly. Utilities and regulators start delaying the retirement of older power plants, since they cannot take generation offline while demand spikes. New power generation then gets pushed forward fast, and urgency often favors what can be built quickly, meaning more gas and other fossil options. Climate targets become harder to hit, not because anyone changed their values, but because the grid is being forced to serve new industrial loads at speed.

So the cloud turns out to be physical after all. It requires land, water, and power, and it leaves marks that people can see and measure.

Questions markets cannot ignore

Investors are starting to ask the obvious question. What happens if AI demand does not grow as fast as everyone promised and when customers slow down spending, or if the next wave of products does not create the expected explosion in usage. And I’m not even mentioning Sam’s AGI pitch, his ultimate promise that I explained in depth in yesterday’s article.

A second version of the same question is just as uncomfortable.

What happens if engineers get better at doing more with less, so models become more efficient and need fewer GPUs to deliver the same results.

We’re already seeing the first successes being published. New models like Google’s Titans and the new class of models called World Models, but also small language models that keep getting better, and of course more exotic stuff like biological computers.

Those scenarios do not require anything dramatic.

They only require reality to be slightly less excited than the forecasts.

The risk shows up in the buildout itself.

If companies build data centers for a future that arrives later, or arrives smaller, they end up with expensive facilities that are underused. The money that funded those facilities often comes from debt, so the cost does not disappear when demand slows. It stays on the books, and it keeps charging interest. The infrastructure also sits in real places, often in towns and rural areas that never asked to host a huge industrial site. If the jobs and local benefits turn out to be lower than promised, the community gets the downsides without the payoff.

This is why markets are nervous.

A full collapse is not required to create damage. Slower growth, better efficiency, or a delayed timeline can produce the same problem, just with a quieter kind of pain.

And when there’s just a tiny hint of a delay or decline in some part of this intertwined ecosystem of moving parts around the main AI players, the bubble will simply pop.

What happens next once the hype runs into the calendar

Everyone wants a clean ending to this story. Investors want the version where infrastructure appears on time, customers keep paying, and the grid stretches itself like yoga rubber. Politicians want the version where the country “wins AI” and nobody notices the price tag until after the election cycle has moved on to a new panic. OpenAI wants the version where Sam keeps promising the moon and the moon keeps showing up with a receipt already paid. And us, the consumers, we simply want good AI, and not loose our jobs.

Reality wants something else.

It wants permits, components, power, labor, water, and money that stays cheap and it wants the timelines to stay long. Reality wants a planning horizon that does not fit inside a quarterly planning cycle and it wants people to accept a giant industrial project landing next to their town and to clap while it happens.

So here is what will happens next.

Finance starts acting like an adult

The first change will show up in the boring place that runs everything.

Money.

For a while, the AI buildout could live on momentum and FOMO, and investors could tell themselves that compute demand would grow forever and that cost of capital would remain kind to them. That mood will not last, since debt has a personality, and its personality gets worse when the timelines slip.

We will see more projects will move into a refinancing phase, and that is where confidence gets tested in public. Rates go up. Covenants tighten. Partners start renegotiating terms with the same smile they used in the original announcement. Deals will still happen, yet they happen on harsher conditions, and the headlines will keep calling it “strategic” because nobody wants to say “we are trapped”. I wrote about it extensively in my piece on Nvidia’s special purpose financing vehicle, the SPV, read: Nvidia’s glorious, terrifying AI empire is being held together with debt and duct tape

Oracle will keep balancing the buildout against the market’s tolerance for leverage, and every delay will give analysts a new excuse to sharpen their knives. SoftBank will keep finding cash in the couch cushions of its portfolio, and that will continue to look like pressure rather than strength, since strength tends to involve choices.

Capital will not disappear, although it will demand more control, more collateral, and more proof that returns arrive on a timeline that does not require a suspension of disbelief.

Permits become the real arena

The next phase will also move into local politics, since the fastest way to slow a project is a community that refuses to play along.

Expect more fights around zoning, water rights, and environmental reviews. Every time a state pushes a project through with minimal local input, the next town learns the lesson and organizes earlier, and when a utility insists rates will stay flat and still refuse to share the calculation, trust erodes predictably further.

Developers will respond with the same playbook every industry uses when it wants speed. More lobbying. More attempts to frame dissent as ignorance. That framing will backfire, since nothing motivates a community like being told it does not understand its own electricity bill.

The outcome will be a patchwork of lawsuits, delays, and compromises, and the compromises will increasingly include real concessions rather than symbolic ones.

The grid starts setting terms

At some point, grid operators and utilities will start behaving like the planning authority they actually are.Interconnection queues will keep acting as a hard limiter. Upgrade costs will keep climbing. Timelines for new transmission will keep looking ridiculous to people who think software roadmaps are reality.

In some regions, data centers will be asked to accept curtailment agreements, flexible load contracts, or priority shedding arrangements that make them the first to reduce demand during emergencies. That change will not be presented as rationing, since nobody likes that word. It will be framed as resilience and grid partnership, since branding remains the last safe refuge.

On-site generation becomes the new normal

When grid access turns into a bottleneck, the next move is obvious.

Bring your own power.

More data center projects will arrive with plans that include on-site gas turbines, mini nuclear plants, large generator installations, battery systems, and fuel supply arrangements that look suspiciously like a private utility. Some sites will effectively become energy projects with a compute workload attached, since that configuration reduces dependence on an overstretched grid.

Even though the energy part of the equation is solved, water use will remain on the table. Communities that already dislike the data center will dislike the mini power plant even more, and regulators will be forced to deal with the fact that “the cloud” now comes with combustion.

That is the point where the public finally understands what the infrastructure buildout actually means.

Regulation stops pretending this is ordinary growth

States will keep experimenting, and several of those experiments will become mainstream.

Expect more rules similar to Ohio’s approach, where large data centers pay for a large share of the capacity they reserve even if they do not use it, since the grid still has to build around the reservation. Expect more requirements for upfront financial guarantees tied to interconnection upgrades. Expect more scrutiny of who pays when new transmission is required.

Policymakers will frame this as protecting ratepayers, and the framing will be accurate. A political system can tolerate a lot, yet it struggles to tolerate an obvious transfer of cost from a private company to ordinary households.

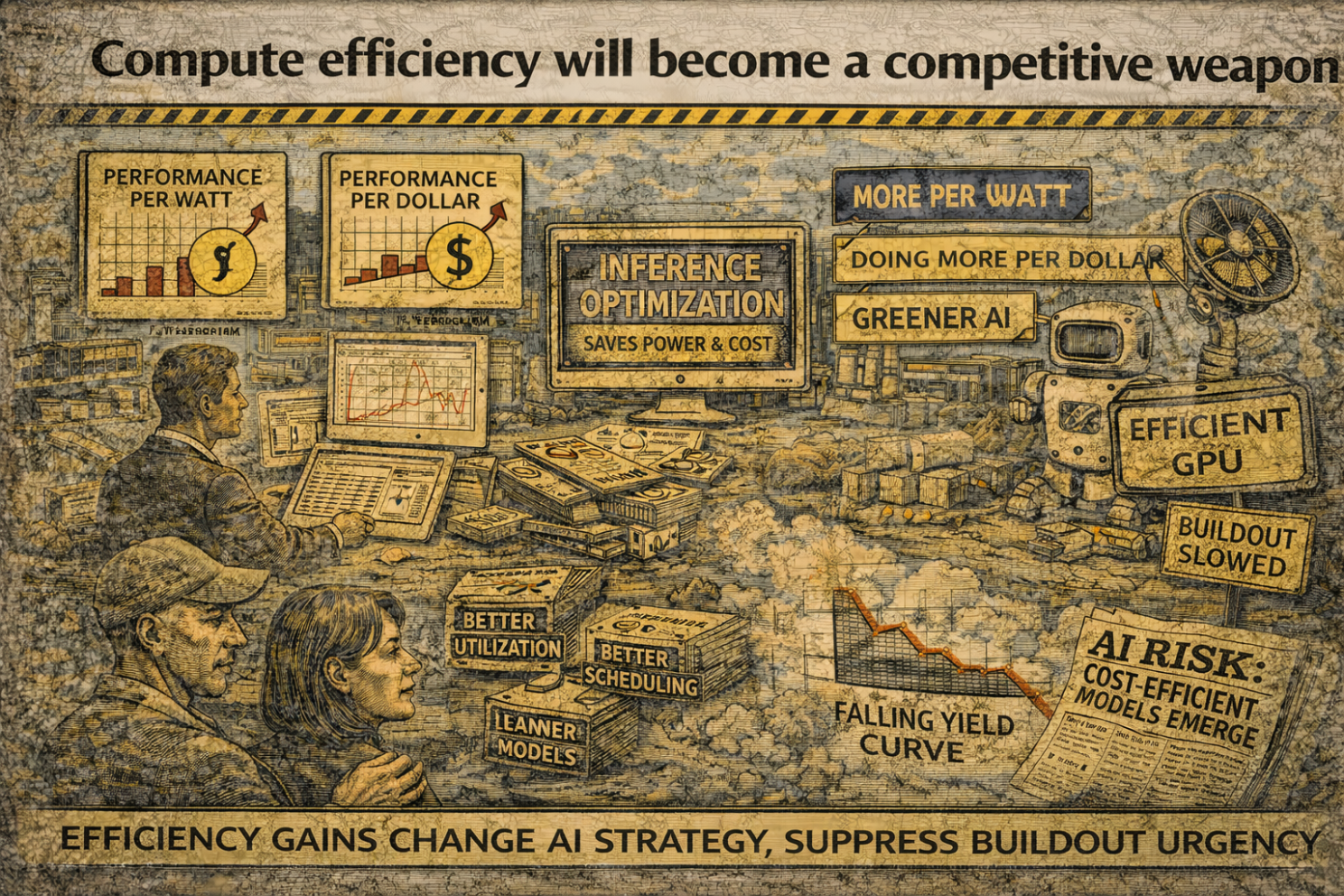

Compute efficiency will become a competitive weapon.

While the physical buildout slowly moves forward, engineering will start compensating for the limits.

Better utilization, better scheduling, inference optimization, and model architectures that reduce brute force demand. These techniques already exist, and their importance increases when power, labor, and financing refuse to scale on command.

This shift will show up in messaging too. Vendors will start talking about “doing more per watt” and “performance per dollar” with the urgency of people who just noticed electricity has a price. Efficiency will not replace infrastructure buildout, although it will change the curve and it will raise a new risk that markets already smell.

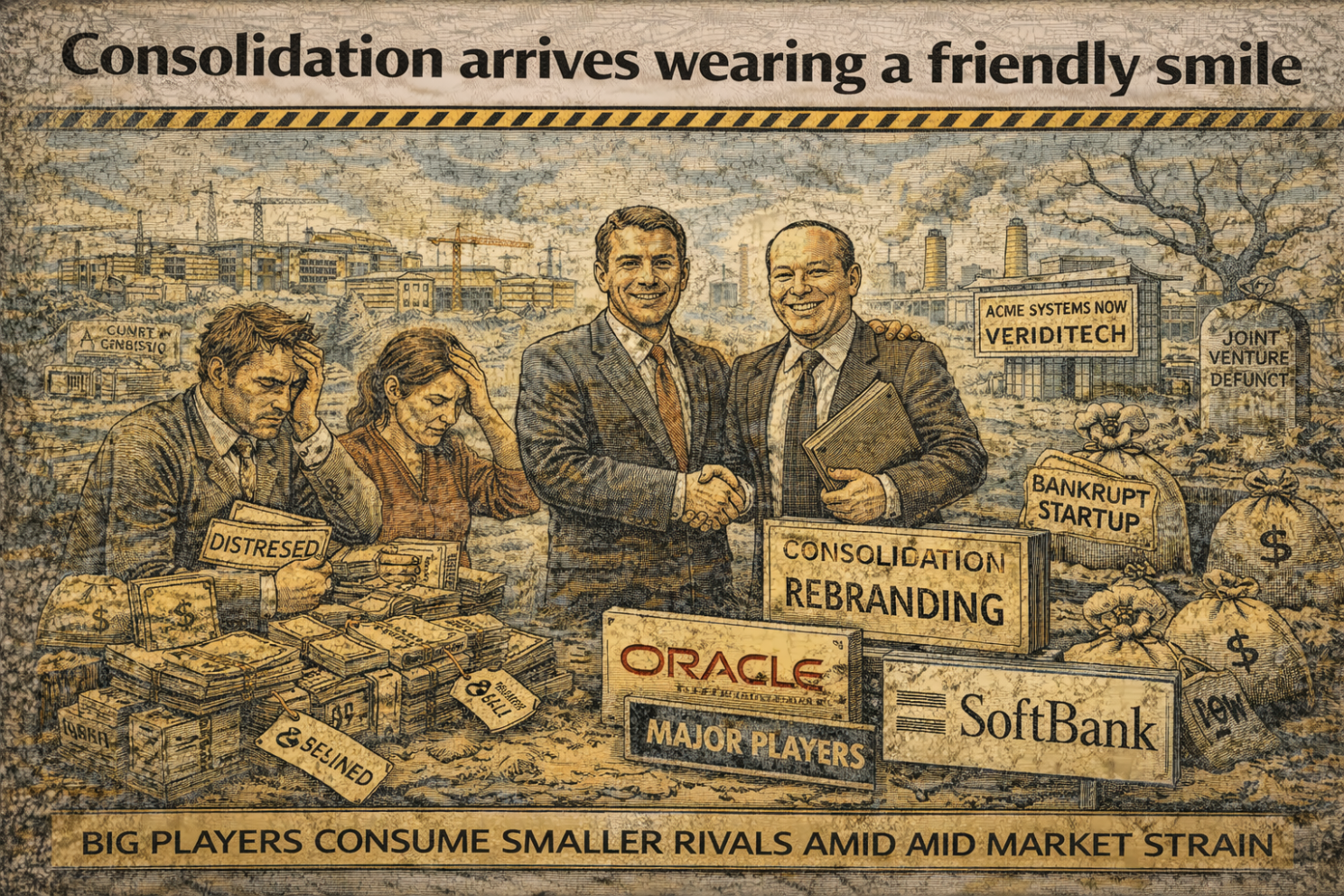

Consolidation arrives wearing a friendly smile

When capital gets more selective and timelines stretch, smaller players suffer first.

Expect consolidation across developers, operators, and financing partners. You will probably see distressed assets getting acquired, and joint ventures to get reshuffled. And of course there will be a lot of rebranding, since rebranding is cheaper than admitting a plan changed.

The winners will be the players who can survive higher financing costs without losing control. That description fits large infrastructure funds and the biggest tech aligned operators, and it does not fit the smaller optimism-driven entrants who thought data centers were a quick win.

The story shifts, since it has to

The story the likes of OpenAI, Oracle, and all of the other investors desperately want to keep intact is the one about infinite scaling with frictionless cloud capacity. Even though some AI hardliners like Geoffrey Hinton keep believing in scaling LLM’s, more and more experts are moving away from this operating model.

So, the next story will talk about disciplined deployment, sovereign infrastructure, responsible growth, regional planning, grid partnerships, and efficiency. The words will change, and the underlying pressure will remain.

In their eyes, investing in AI required abandoning the mental model of software scaling and adopting a mindset aligned with heavy infrastructure. That shift will keep spreading, since every stakeholder is being forced into it, whether they like it or not.

Signing off,

(Finally, again)

Marco

I build AI by day and warn about it by night. I call it job security. Big Tech keeps inflating its promises, and I just bring the pins and clean up the mess.

👉 Think a friend would enjoy this too? Share the newsletter and let them join the conversation. LinkedIn, Google and the AI engines appreciates your likes by making my articles available to more readers.

To keep you doomscrolling 👇

- I may have found a solution to Vibe Coding’s technical debt problem | LinkedIn

- Shadow AI isn’t rebellion it’s office survival | LinkedIn

- Macrohard is Musk’s middle finger to Microsoft | LinkedIn

- We are in the midst of an incremental apocalypse and only the 1% are prepared | LinkedIn

- Did ChatGPT actually steal your job? (Including job risk-assessment tool) | LinkedIn

- Living in the post-human economy | LinkedIn

- Vibe Coding is gonna spawn the most braindead software generation ever | LinkedIn

- Workslop is the new office plague | LinkedIn

- The funniest comments ever left in source code | LinkedIn

- The Sloppiverse is here, and what are the consequences for writing and speaking? | LinkedIn

- OpenAI finally confesses their bots are chronic liars | LinkedIn

- Money, the final frontier. . . | LinkedIn

- Kickstarter exposed. The ultimate honeytrap for investors | LinkedIn

- China’s AI+ plan and the Manus middle finger | LinkedIn

- Autopsy of an algorithm – Is building an audience still worth it these days? | LinkedIn

- AI is screwing with your résumé and you’re letting it happen | LinkedIn

- Oops! I did it again. . . | LinkedIn

- Palantir turns your life into a spreadsheet | LinkedIn

- Another nail in the coffin – AI’s not ‘reasoning’ at all | LinkedIn

- How AI went from miracle to bubble. An interactive timeline | LinkedIn

- The day vibe coding jobs got real and half the dev world cried into their keyboards | LinkedIn

- The Buy Now – Cry Later company learns about karma | LinkedIn

Leave a comment