I have written a lot about OpenAI. Logical, since they were the ones that put my profession in the spotlight. And in the beginning I was very positive about it’s chef d’équipe, Sam Altman, because of his major feat, but after reading up on the man’s path to glory and all of the false promises he made, I decided to create an epithet for the man, and called him Sam “The Scam”.

But why, exactly?

This article is about that “why”. Not because I’m addicted to edgy nicknames, but because the pattern that emerges from the way the man conducts his business, is so consistent that it just deserves a nickname. And because the bill attached to his “trust me” pitch is now big enough to have its own weather system.

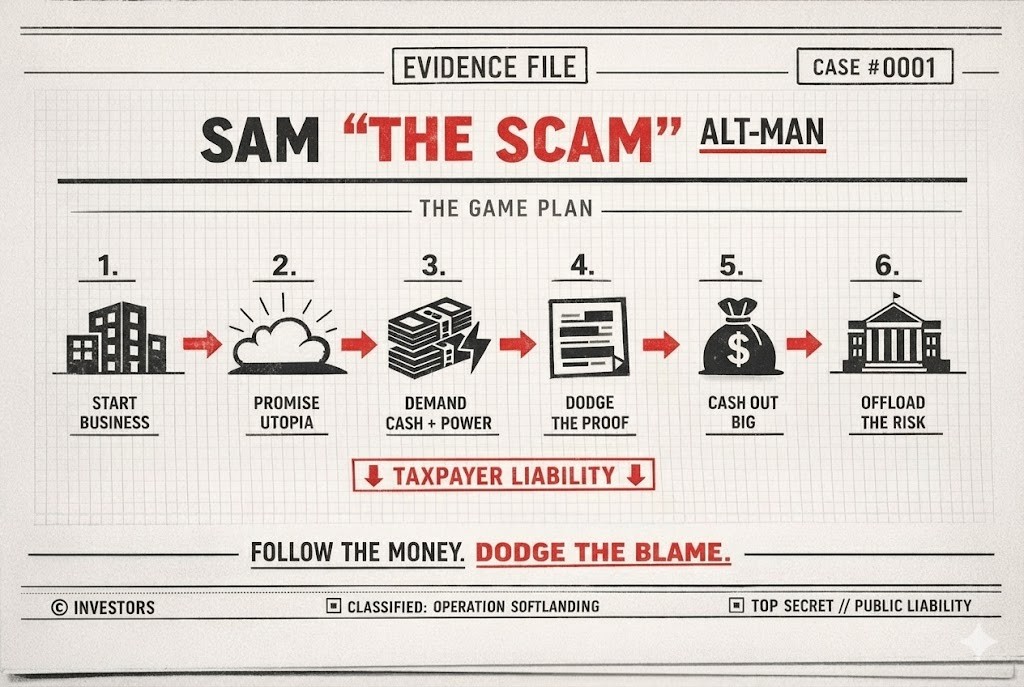

If you want the short version before I go full cinematic doomscroll as usual, here’s the whole arc in the only format Silicon Valley genuinely respects, numbered steps.

- Sam has an idea, and sets up a business (ChatGPT wasn’t his first)

- He then promises utopia if people believe in him.

- Then asks for everything. Lots and lots of cash, infrastructure, and power.

- Next, he dodges the proof, with a skill only the CIA has.

- Then he sells his shit, and collects the money.

- And lastly, he offloads all the risk to the taxpayer.

Yes, you. Where did you think the money comes from that funds his hobbies? It is not only Softbank and NVIDIA you know. It’s also your hard earned cash that fuels his vision of grandeur.

But hold on before I start ranting too soon.

Let’s walk through the story properly, as if it were a real article, with a true storyline ‘n all.

More rants after the messages:

- Connect with me on Linkedin 🙏

- Subscribe to TechTonic Shifts to get your daily dose of tech 📰

- Please comment, like or clap the article. Whatever you fancy.

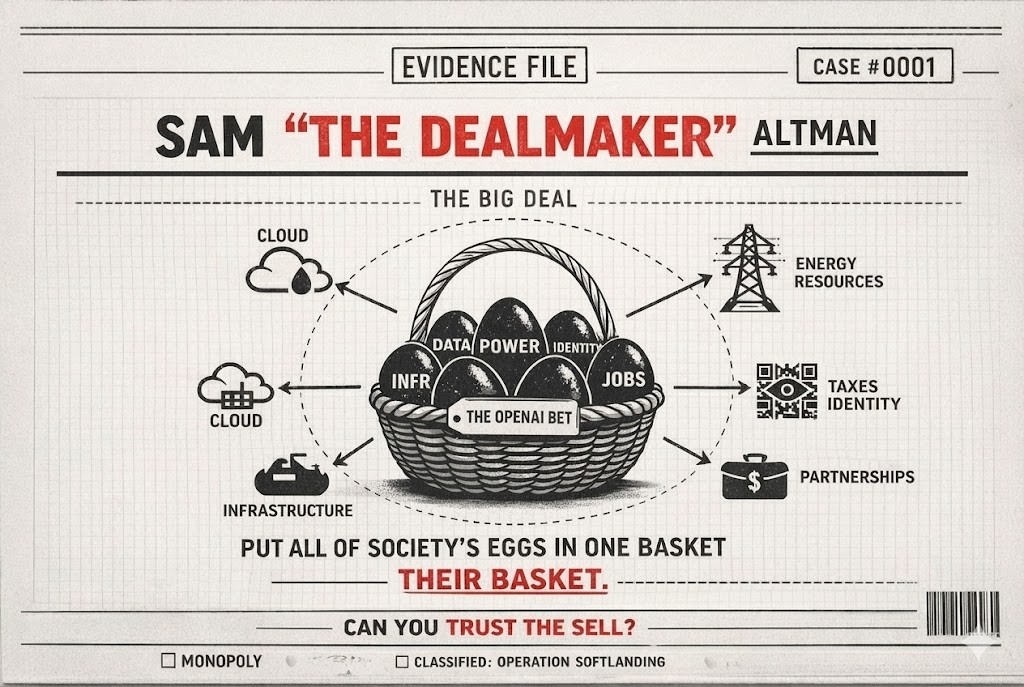

Sam the dealmaker

am “The Scam” Altman wants to sell you a future. Not a product, mind you. A future. A clean, glowing one in fact. And it’s a future where artificial intelligence does what governments, markets, medicine, education, and basic human decency somehow failed to do for centuries.

He said in many interviews that AI, if it reaches AGI (like, magically smart AI), would solve the housing crisis, cancer gets cured – not just one cancer – but this cancer and that one, and oh, heart disease too. And, before I forget, he also threw poverty in the mix, that somehow collapses, that climate change becomes a fixable line item in the governments budget, and that mental health improves (I only see it decline due to AI), that democracy survives the internet (if it survives Trump first), and that something called the Universal Basic Income would appear like a warm blanket, so no one really has to work one more minute. Oh yeah, and energy costs melt toward zero, scientific discovery accelerates, healthcare becomes “very high quality”, equality increases. Shall I go on? And in the grand finale, we get universal extreme wealth for everybody, because why not. All of these promises are captured in interviews that are aired on Youtube. And below, you find an overview of all of them. Have fun watching.

When Sam is writing fan fiction, he’s surely gonna write the deluxe edition. That is something you usually get when negotiating with the devil, and like the devil, his promise of utopia comes with a very modest request.

His ‘deal’ for all-o-that is “seductively simple”. He wants society to put all its eggs in one basket. His basket. With our data, our economy, our electricity, our water and resources, our infrastructure, our tolerance for disruption.

Everything.

His basket.

It’s one massive “just trust me, bro”, but with better fonts.

And this is where the nickname “the Scam” isn’t childish anymore, but gets rather descriptive. Because the question is not whether the dream sounds too good to be true. His dreams always sounds too good. The question is whether you should trust the salesman. Whether the receipts match his poetry. Whether the person asking you to rebuild civilization around his roadmap has earned the right to be believed.

And in this piece, I’ll uncover the man’s credibility, and slice it up one-by-one.

If Sam were a technologist or a scientist, maybe you’d at least argue about the science.

But he ain’t.

He is an investor and a dealmaker. A very skilled one, supposedly. And his career reads like a long chain of “trust me” moments where the evidence either arrives late or never shows up at all.

Let’s start this story, with a short tale, where the pattern begins to surface . . .

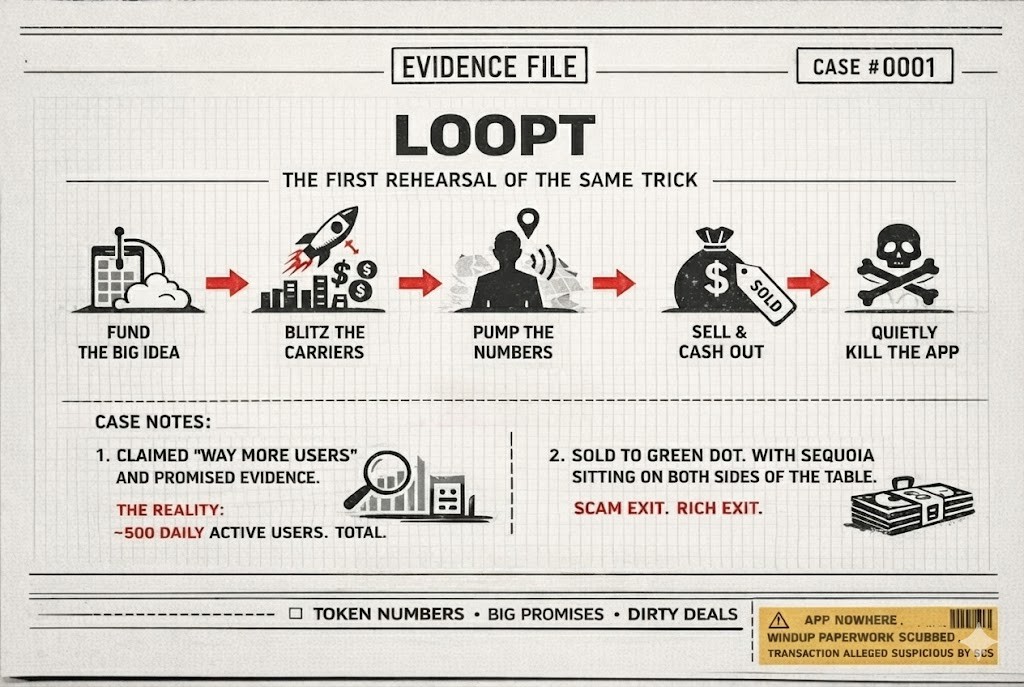

It’s a small app, with a big narrative, and a number that didn’t want to be seen.

I am talking about his first app: LOOPT.

The first rehearsal of the same trick

Before OpenAI, before the global stages, before the “future of humanity” speeches and “tech for a better world” branding, there was Loopt. It was a location-based service that helped you find your friends. Innovative? Not really, and you probably never played with it as well, cause it wasn’t a success. It was a social app that inherently requires lots of users to function, because without a critical mass, you’re not gonna locate your friends, but you’re only locating yourself. Alone. Repeatedly. As usual.

But, with great accuracy.

He marketed Loopt with the same language of scale and ubiquity.

And that “ubiquity” dream didn’t run on hope alone. It ran on lots of venture money. Loopt got early backing from Y Combinator, then raised a $5M Series A from NEA and Sequoia, which basically funded the whole “we’re everywhere” strategy, meaning business development with carriers, device support, and the privilege-heavy location integrations you couldn’t just ship from a dorm room. By the time Loopt was expanding carrier-by-carrier, it had raised about $17M total over three rounds (seed included), and that helped getting carriers to officially support it.

His idea was to get it “out there in more ways than you can possibly imagine”. He didn’t rely on “viral growth” or app stores (this was pre-modern App Store reality). He pushed Loopt into carrier distribution, and first launched a deal with Boost Mobile backed by the “Where you at?” ad campaign, then expansion to Sprint and later Verizon, so Loopt was officially supported on lots of carrier handsets and could spread across networks.

He said it was a success. He boasted the platform had 50 thousand daily users, but the company refused to disclose how many people were actually using it. Sam insisted Loopt had “way more users than any other similar service”. Eventually, Reuters reported the metrics that the company wouldn’t. Loopt had about 500 daily active users near the end. That’s the size of a medium-WhatsApp group.

But Sam’s response was not to correct the record carefully. He outright claimed the reported number was off by a factor of 100 and promised he would provide evidence.

But he never did.

Because Loopt was sold to Green Dot Corporation. And Green Dot shut it down immediately and never used the technology. Investors said that the acquisition was a dirty deal meant to enrich Sequoia Capital, which had a stake in Loopt and had two board members at Green Dot who helped approve the purchase. In other words, the app died, the deal lived, and Loopt’s “power network” went nowhere.

Sam left Green Dot as soon as he was legally able, and he walked away with millions for building an app that no longer existed in any form.

That is the origin story. Not one about genius engineering. Sam learned a lesson here, that you don’t have to build a lasting thing if you can sell a believable story and get paid before reality shows up.

Today, the evidence of his frock-up vanished, but the strategy stayed, that when confronted with inconvenient facts, you don’t argue, you simply create a more compelling alternative reality and ask people to believe in it.

Just trust me, bro. His personal mantra.

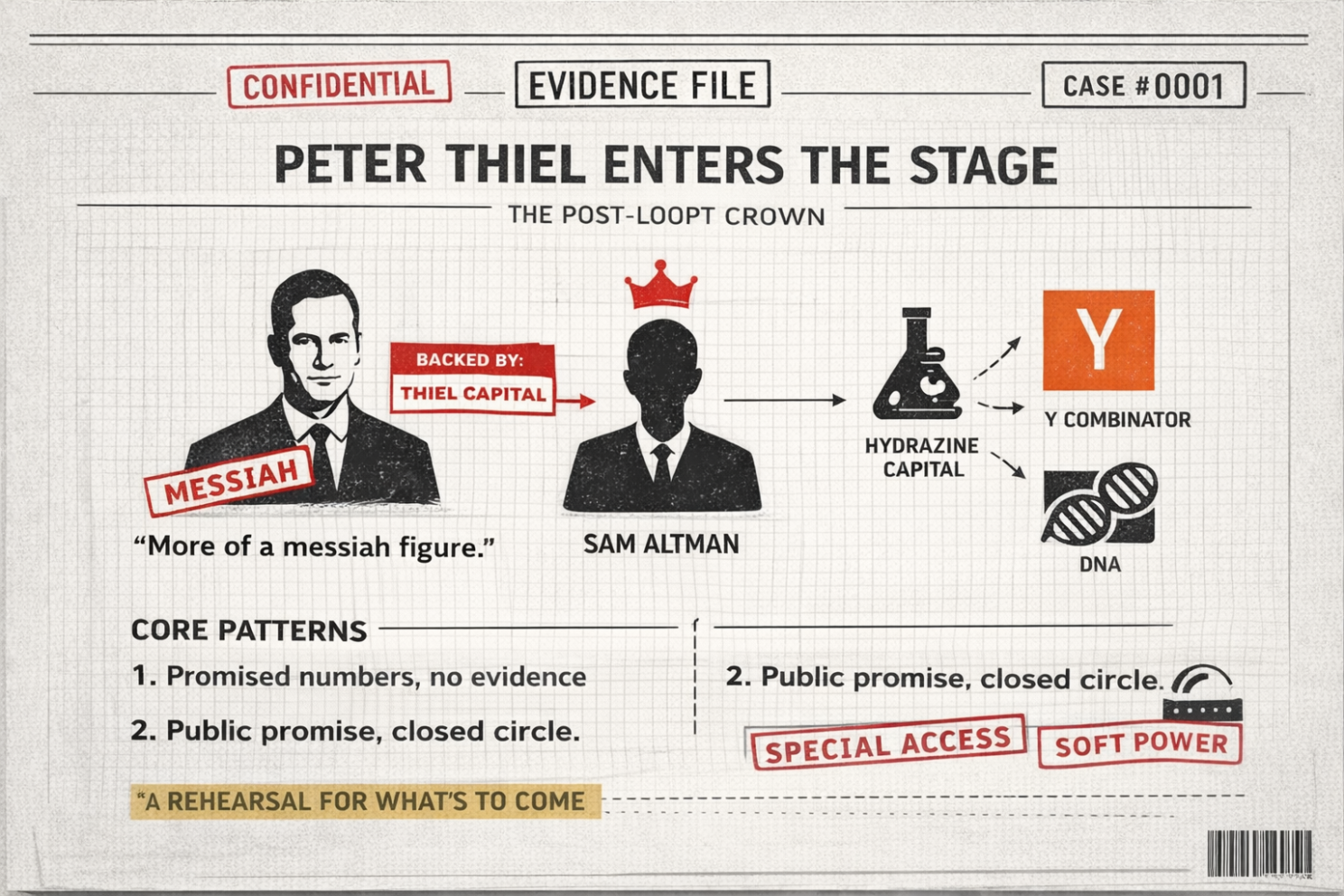

Peter Thiel enters the stage

You’d think that after Loopt, Sam’s trajectory would collapse, but it didn’t. It accelerated. Because Silicon Valley doesn’t punish a failed product if “the deal” still works.

It rewards the ability to move through power structures without looking guilty.

Then Peter Thiel came along.

Thiel once said that Sam should be treated as “more of a messiah figure”, than an entrepreneur. That isn’t a casual praise, but more of a coronation. And after this statement, Thiel backed Sam with millions to start his own venture capital firm, Hydrazine Capital.

You gotta know that Hydrazine is the trivial name for a chemical compound with the chemical formula N2H4, which is a fuming liquid with a talent for making safety officers age in dog years. It is chemically violent, agressively energetic and biologically brutal, it’s also toxic at very low levels, causes cancer and is prone to self-ignition, and last, it’s also used as rocket fuel. And that is why Sam chose it because it mirrors his DNA. Trustworthy on the outside, energetic, but also toxic for the environment.

And at the same time, Sam was appointed president of Y Combinator by its founder Paul Graham, the guy who started the incubator and venture institution that had funded Loopt early on. YC needed to get bigger and Graham didn’t want to be the manager of that – he wasn’t “much of a manager” – but Altman was. The president of YC is widely treated as the unofficial leader of the startup movement. Which means access, an inside view, and also a first look at what might become valuable.

And Sam used it. As a dealflow telescope + reputation amplifier + gatekeeping lever. And Altman treated that lever like it came with a golden throttle.

Though he publicly promised he wouldn’t cross-invest in YC companies, to keep things transparent, he didn’t in practice, reporting later revealed that much as 75% of Hydrazine’s capital was invested in YC companies.

So now we have two core patterns documented early on in his career.

- A refusal to provide inconvenient numbers, followed by a promise of evidence that never arrives.

- A public promise about conflicts of interest that collapses under scrutiny.

This is the background of Sam, and a rehearsal for his stint at OpenAI.

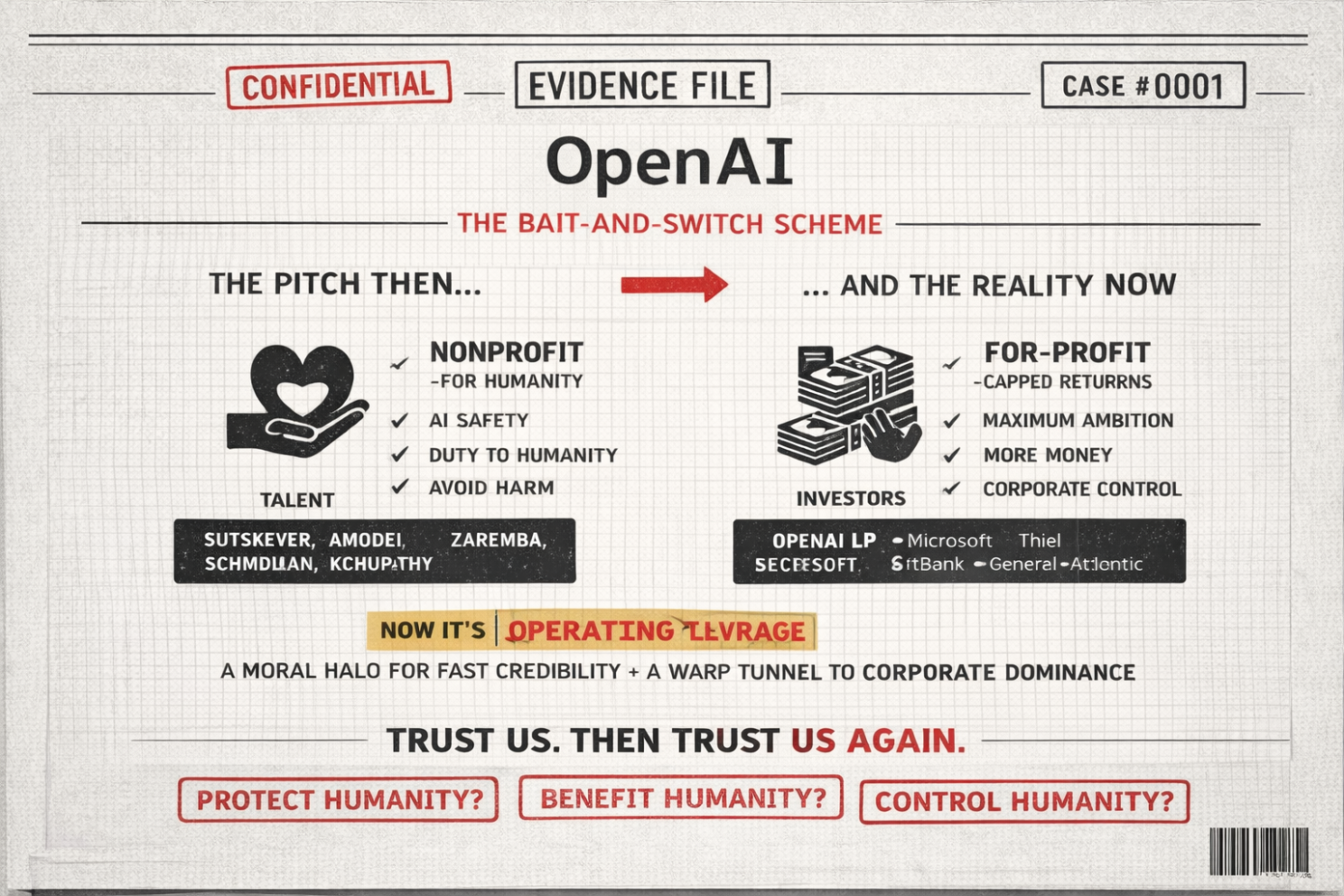

OpenAI. The bait-and-switch scheme

The idea for OpenAI didn’t arrive as a lightning bolt of pure “Building AI for improving the quality of life” kinda ethics, he orates now. According to OpenAI’s own account, a guy called Greg Brockman and Sam were already planning the project in late 2015 and initially aimed to raise $100 million. Now that’s a number you pick when you want to sound serious but not outright delusional. But then Noel Skum got involved early and pushed them to announce a $1 billion commitment instead. He apparently had said that $100M would make them “sound hopeless” compared to Google and Facebook.

In 2015, Sam became the public face of OpenAI. He helped found it and served as its co-chair alongside Elon Musk, and announced that backers (including Altman, Musk, Brockman and others) had committed $1B. He later became CEO when OpenAI created its capped-profit entity (OpenAI LP), and that made him the default spokesperson for the whole project

It was presented not as a regular company but as a “semi-company, semi-nonprofit”, doing AI safety research.

It launched as a nonprofit foundation with lofty language about a primary fiduciary duty to humanity, avoiding harmful uses of AI or AGI, avoiding undue concentration of power, and minimizing conflicts of interest among employees and stakeholders.

And the evidence that this would happen was the same as always “trust me, bro”.

The reason why they chose this form is that “nonprofit” was the fastest way to buy legitimacy and trust while they built something society would otherwise treat as a power grab. A public-interest exterior let them say “AI safety” instead of “we’re building a machine that could centralize power”, and this helped them recruit talent that otherwise would not want to work for a normal profit-maximizing lab.

And retaining the talent was the crucial element to their success.

Not the investment.

By “talent,” I mean the actual researchers and research engineers OpenAI put on the poster in its 2015 launch, plus the safety-leaning researchers it pulled in early.

I’m talking about the people who were crowned as “founding members” like Ilya Sutskever (Research Director), Wojciech Zaremba, John Schulman, Andrej Karpathy, Durk Kingma, Trevor Blackwell, Vicki Cheung, Pamela Vagata – all top dogs in their day.

If you want one clean example of the “nonprofit/safety vibe attracted people” argument in human words, Dario Amodei (joined 2016) explicitly described OpenAI as a nonprofit lab focused on “following the gradient” toward general AI and “making it safe”, and said he joined because there were talented researchers and it was a good environment to think about safety.

And we all know what Sam did to Ilya and Dario, right.

Now, that framing also matched their own charter language about a primary fiduciary duty to humanity and avoiding harmful uses or undue concentration of power. And even the famous $1B “commitment” announcement fits this logic, OpenAI’s own account says Altman and Brockman originally planned to raise $100M, but Musk pushed them to announce $1B so they wouldn’t “sound hopeless,” which tells you how much the story mattered from day one.

But they also chose this form because it gave them a clean pivot later, once established. Once the research got expensive and the ambitions got bigger, they could say that a pure nonprofit couldn’t attract enough capital or offer “startup-like equity” to compete, and then flip the switch to a capped-profit structure without fully dropping the moral halo.

That’s exactly how OpenAI described OpenAI LP in 2019. Investors and employees could get a capped return, which “allows us to raise investment capital and attract employees with startup-like equity”, and all while the nonprofit technically stayed in control.

OpenAI’s primary backers at the time were billionaires and tech elites like Sam Altman himself, Peter Thiel, Reid Hoffman, Elon Musk, plus a few major players like Amazon Web Services and Infosys.

Sam said, out loud, that they wanted to “build it with humanity’s best interest at heart”.

But OpenAI’s deal with the world is not just “simply let us research”.

It is a civilization-level request.

It asks for electricity, water, lots of infrastructure, even more capital, and for a society to absorb the impact of job loss, deep fakes, and the restructuring of work and trust. It also asks for your data, your writing, your art, and it also shamelessly asks for the world to adapt around it.

And all-of-that in exchange for a promise that once machine learning intelligence reaches a certain level (AGI), all of our problems will be solved. Housing, cancer, poverty, climate change, mental health, democracy, universal basic income, energy costs trending toward zero, a more equal world, universal extreme wealth for everybody.

He was selling it as if he was financing Utopia. But the question is, can this salesman be trusted?

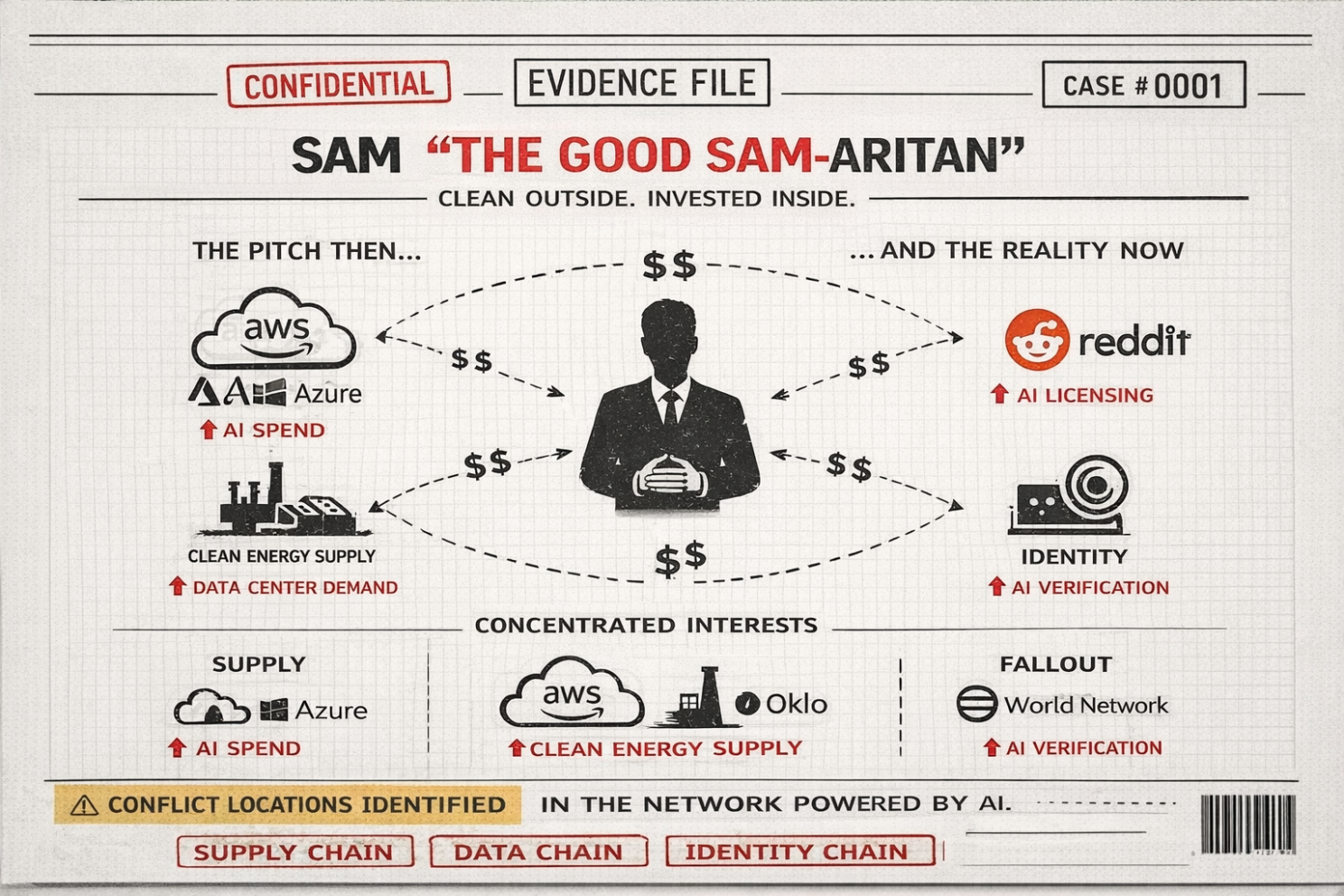

The good Sam-aritan

Sam keeps on insisting he has no equity in OpenAI and barely takes a salary. He frames himself as the rich guy doing the “rich guy using money for good Batman thing”. And through this framing, you’re meant to see a leader above personal enrichment. A reluctant savior – the modest billionaire.

But here’s the problem with that framing. He says that he’s not financially motivated by OpenAI itself, but his investments are positioned across the entire supply chain and fallout zone of what OpenAI needs and what OpenAI creates.

Take the “needs” first.

OpenAI doesn’t run on air, but on industrial-scale compute.

Somewhere late 2025 it had contracted an incremental $250B of Azure services, and it was also expanding into AWS ($38B) and Oracle (reported $300B) as part of a broader compute push Altman puts at roughly $1.4T over eight years. And with compute, you end up in data centers, and that flows straight into power demand.

Now look where Altman is parked, he is backing a fusion startup called Helion, where he is building toward supplying electricity to Microsoft data centers. He was also chair of Oklo and stepped down specifically to avoid a conflict as OpenAI entered talks connected to Oklo, which is basically the cleanest possible illustration of “my AI company needs power, and I’m also invested in power”. And now for data, OpenAI signed a deal to bring Reddit content to ChatGPT, and Altman is a major Reddit shareholder, so the more the AI boom pays for data access and licensing, the more that kind of platform monetization narrative becomes… convenient.

Then the “fallout”.

Now that AI is quite omnipresent, you see deepfakes and bot-driven fraud becoming an obvious pressure point, and Altman happens to co-found Worldcoin (now “World Network”), which is built around iris-scanning “orbs” and a “World ID” that is meant to prove someone is human online. So even if he swears he’s not chasing OpenAI equity, he’s still exposed to the upside of (1) the infrastructure OpenAI forces the world to build and (2) the identity and verification market that explodes when AI makes authenticity cheap to counterfeit.

He profits from the world OpenAI requires. It’s a far cry from the lofty goals he started out with. His aim now is to turn OpenAI into central infrastructure, and when he succeeds, he wins whether or not he holds a neat little equity label on the company.

And that becomes obvious the moment you look at the supply chain his system requires.

Aggressively scrape everything and call it progress

One of OpenAI’s essentials is data. You cannot build large language models without massive amounts of human language. And one of the most valuable sources of human language on the internet is Reddit.

Now, Sam owns a significant share of Reddit and served on its board until 2022. You gotta know that Reddit started in the same inaugural YC class as Loopt. So, the connections are old, strategic, and certainly not accidental.

Sam has been pictured many times, standing next to Reddit co-founder Aaron Swartz. Swartz later died by suicide in 2013 after being criminally charged for downloading and reproducing academic articles, a story that has become symbolic of how harshly institutions can respond when someone tries to free information.

Swartz wanted to open up knowledge to everyone.

And Sam wanted to put the open public square into a product.

Sam made a deal with Reddit in 2015 that allowed them to “basically aggressively scrape everything posted on the site to feed into OpenAI’s tech”. Alexis Ohanian (Reddit’s co-founder) reportedly felt “in his bones” that the deal was wrong because it was a less noble version of the thing Swartz had been punished for, extracting and redistributing knowledge, except this time the extraction fueled a private product built for enormous profit.

And if you’re wondering whether the Reddit community ever got paid for becoming training data, well, wake up and smell the data, because in 2014, Sam promised that he and other investors would give 10% of Reddit’s value back to the Reddit community in shares.

That never happened, Sam “the scam” blamed on regulatory issues.

Promises. Evaporation. Extraction continues.

Own the inputs, own the fixes, own the collapse

Sam’s wealth is invested in areas tightly connected to OpenAI’s needs. He has put his own money in AI networking equipment companies, thermal battery companies, and companies that are mining rare earth metals required for his server farms. He earns billions from the physical world side of the “AI revolution”. Not the poetry he sold his staff. The metal.

And then he is also positioned to profit from the problems that AI creates.

Three of them matter most here

- Rising energy demands and costs.

- Misuse like fraud and deep fakes.

- Job loss and economic disruption.

Let’s go one level deeper to unravel the man’s evil genius. . .

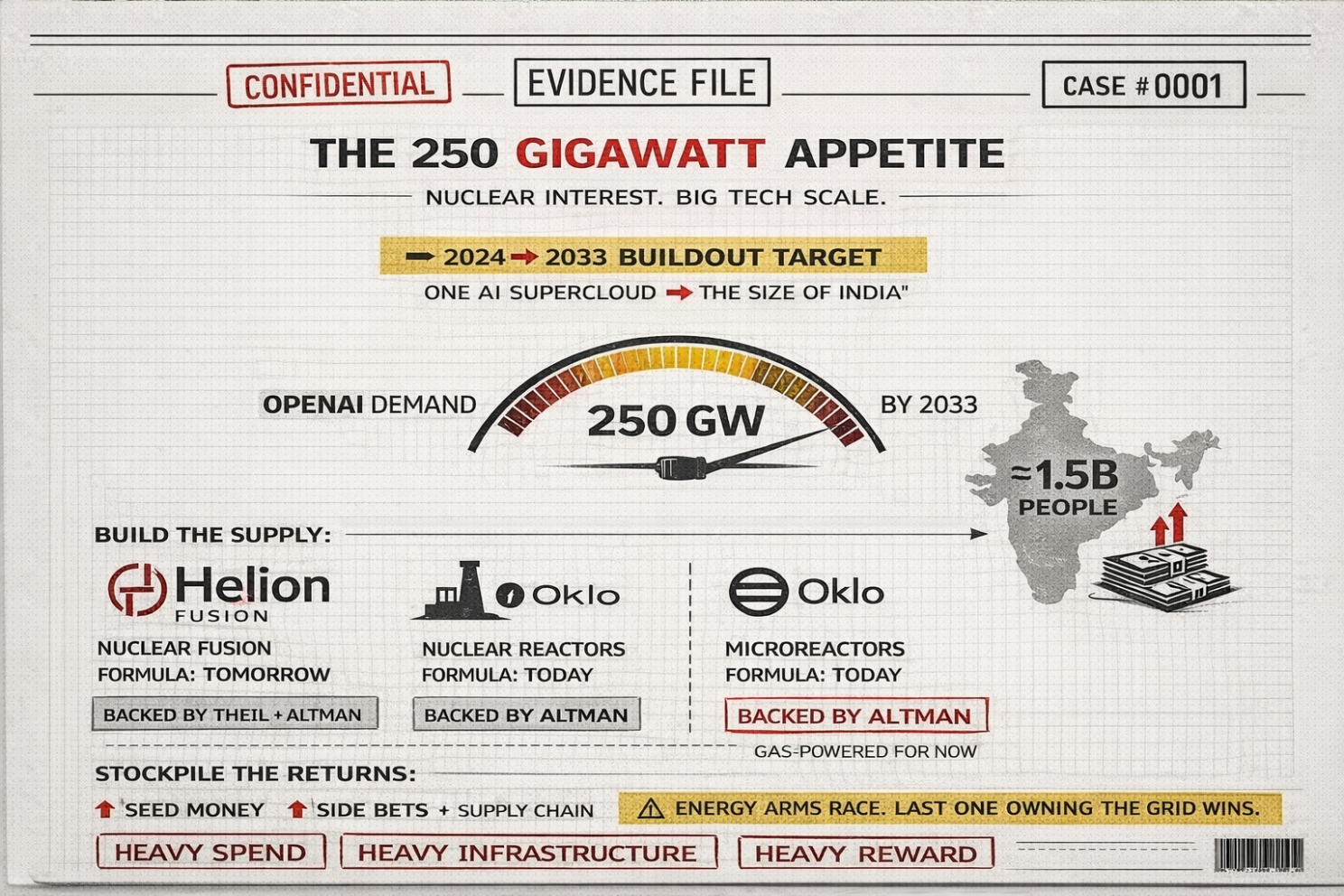

The 250 gigawatt appetite

Sam says again and again that OpenAI needs more power. The “audacious long-term goal” is to build 250 gigawatts of capacity by 2033. That is a scale which is comparable to the electricity needs of the entire population of India (about 1.5 billion people). This is a central concern.

And Sam’s solution conveniently overlaps with his investments. Since the early 2000s, Peter Thiel and Sam Altman have shared interest in nuclear power. Nuclear can be efficient and relatively clean. But the key phrase is not “clean”. It’s “own it”.

Sam is invested in Helion and Oklo.

Helion wants to build the first nuclear fusion power plant. Many scientists say it won’t work, at least not in the way and timeline being marketed by Big Tech. Fusion is the perpetual tomorrow, but it’s great for pitch decks, yet less great for keeping the lights on.

But for that, he has invested in Oklo.

Because Oklo is building microreactors, literally truck-sized nuclear reactors. Which is… a concept. Especially when you remember a line connected to this mindset, make the cost of mistakes really low and then make a lot of mistakes.

Now, that is fine for software, but a little surreal for nuclear.

And for now, Oklo hasn’t even figured their reactors out and is using natural gas to keep up with the promises they made to their partners, including a partnership with Liberty Energy to provide energy to large-scale customers. The future is nuclear, and their present is gas, but the marketing is still nuclear.

Security profit on both sides of the knife

Sam has invested in companies offering protection against AI bad actors that make use of AI for nefarious purposes like identity verification to prevent deep fakes, and insurance against losses due to AI scams and hacking.

Now, this is the part where the “Batman” analogy fits too well to ignore.

It’s as if Batman is claiming he makes no money off crime fighting, but then secretly selling “Batmobile drove into my house” insurance, and also running the Uber for the henchmen startup that the Riddler uses and selling the Joker’s white makeup.

It is the same hedging I do at the end of my blogs (”I build AI by day and warn about it by night. I call it job security”), but he is building a system where the same wave creates the problem and also sells the flotation device.

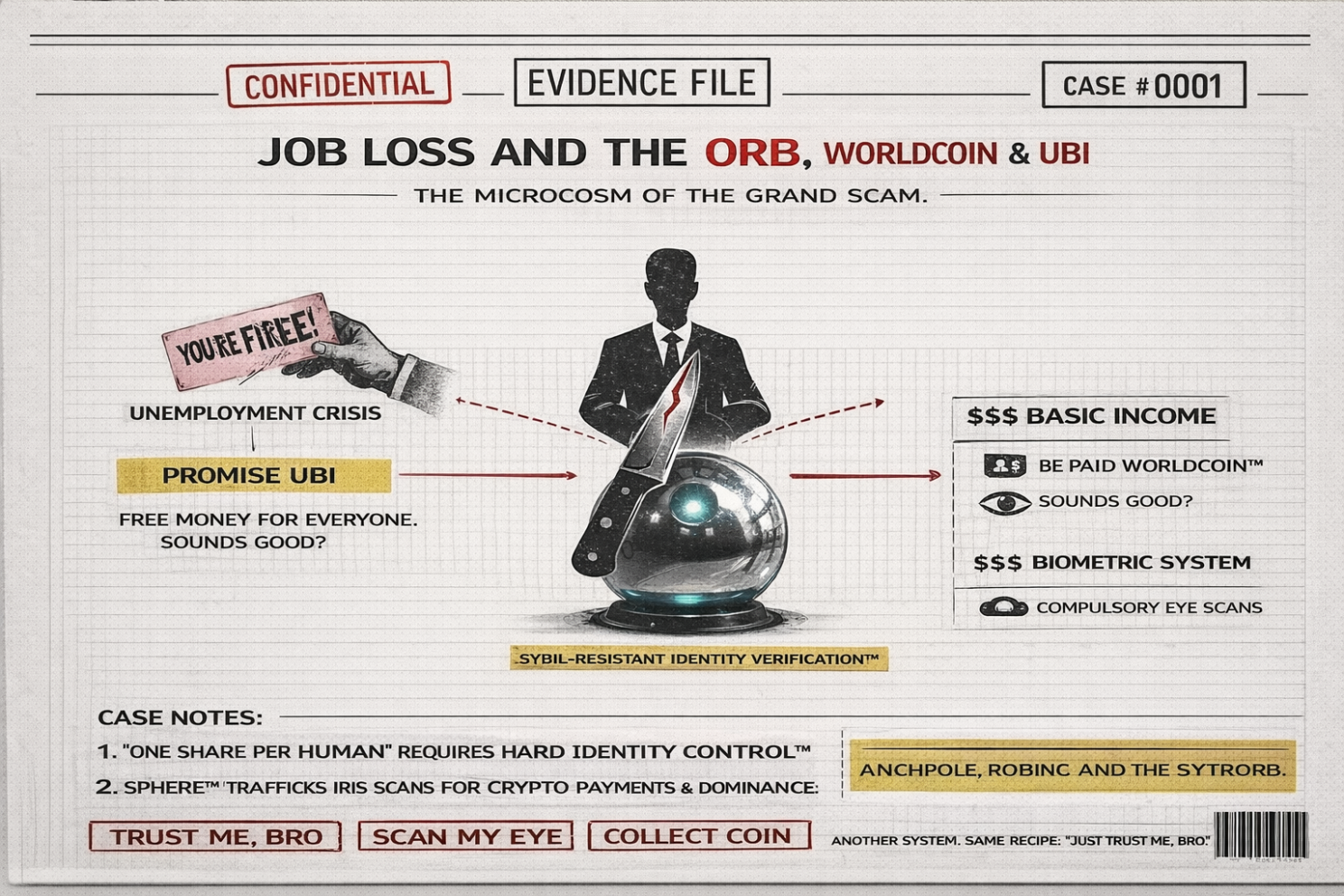

Job loss and the Orb, Worldcoin, and UBI

Sam makes another promise. He says when AI makes jobs obsolete, it will create so much wealth that it can be shared with everyone (sic!).

Hahahahaha. . . , sorry for the cynical laughter. But the thing is he sold it to a lot of people. Especially on the left side of the political spectrum, because they are the ones that love to fund lofty goals. And in this warped ideology, UBI becomes the moral safety net. The rough massage, but with happy end.

It is the “don’t worry” clause of our contract with AI.

And then comes the product that tries to monetize that ending → Worldcoin.

Worldcoin is a technology company and cryptocurrency and it is funded by the usual suspects (look above). It sells itself as a way to distribute some form of universal basic income when AI replaces jobs. Now, UBI is a policy where everyone regularly receives a cash payment with no work requirement and no means test. Sounds like a true Utopia. And Sam’s mouth is always full of it.

The thing is that he also sells a solution to identity verification problems that are created by AI. If you’re asking yourself the question why UBI and identity verification are intertwined, you are not alone. Identity and UBI are glued together for one boring reason that you can’t distribute “one share per human” money unless you can prove who counts as “one human”.

When you try to pay out a universal basic income, you face the “Sybil” problem that one person (or one bot farm) can create thousands of fake identities to claim thousands of payouts.

Worldcoin’s own whitepaper is explicit that World ID exists to prove you’re a “real, unique human” so the system can do “fair airdrops” and “fairer distribution of limited resources”. With the emphasis of limited. While the 1% of the 1% are buying up land and infrastructure and living on their yachts and in their bunkers, you get to wait in line for hours to buy a bread, cause AI made your job obsolete. And if you don’t behave, your CDBC will simply tell you “sorry, your daily bread limit has been reduced”.

But the thing is that UBI needs identity, the same way “free pizza for everyone” needs a bouncer at the door. But the price of entry is not “consent” in a democratic system. It is a biometric ritual. You don’t get your UBI-flavored future until you scan your eyes into the orb.

I think that the Orb may even be the most honest object in this whole story. Because it looks exactly like what it is. It’s a beautifully looking machine that trades your identity for access to the new world.

But the way Sam’s handling it is telling.

He keeps pitching the Orb as a harmless “proof-of-personhood” gadget, but regulators keep treating it like a mass collection of special-category biometric data with a consent story that doesn’t survive sunlight. Spain’s data protection authority hit Worldcoin/Tools for Humanity with an emergency stop after complaints about insufficient information, minors being scanned, and people not being able to withdraw consent, plus the risk of onward transfers. And after Portugal and Germany put a stop on the data collection too, he moved his scam to a place where people’s privacy matters less, Africa. There are lots of mobile first users, it has a big unbanked population and a real need for portable identity, and they – of course – don’t make a fuzz about a little loss of privacy here and there, and yes, incentives in exchange for biometrics are a potent combo in lower-income contexts.

And the thing is, like many of Sam’s projects, the Orb also requires universal adoption to have a real business use. A currency and an identification system are useless if other people don’t use them. So once again, the offer is “give us your identity, and we’ll give you cryptocurrency”.

I’ll fix everything if you sign over everything.

Just trust me, bro.

It’s almost like he wants to build a whole other economy, just in case the one we have now falls apart.

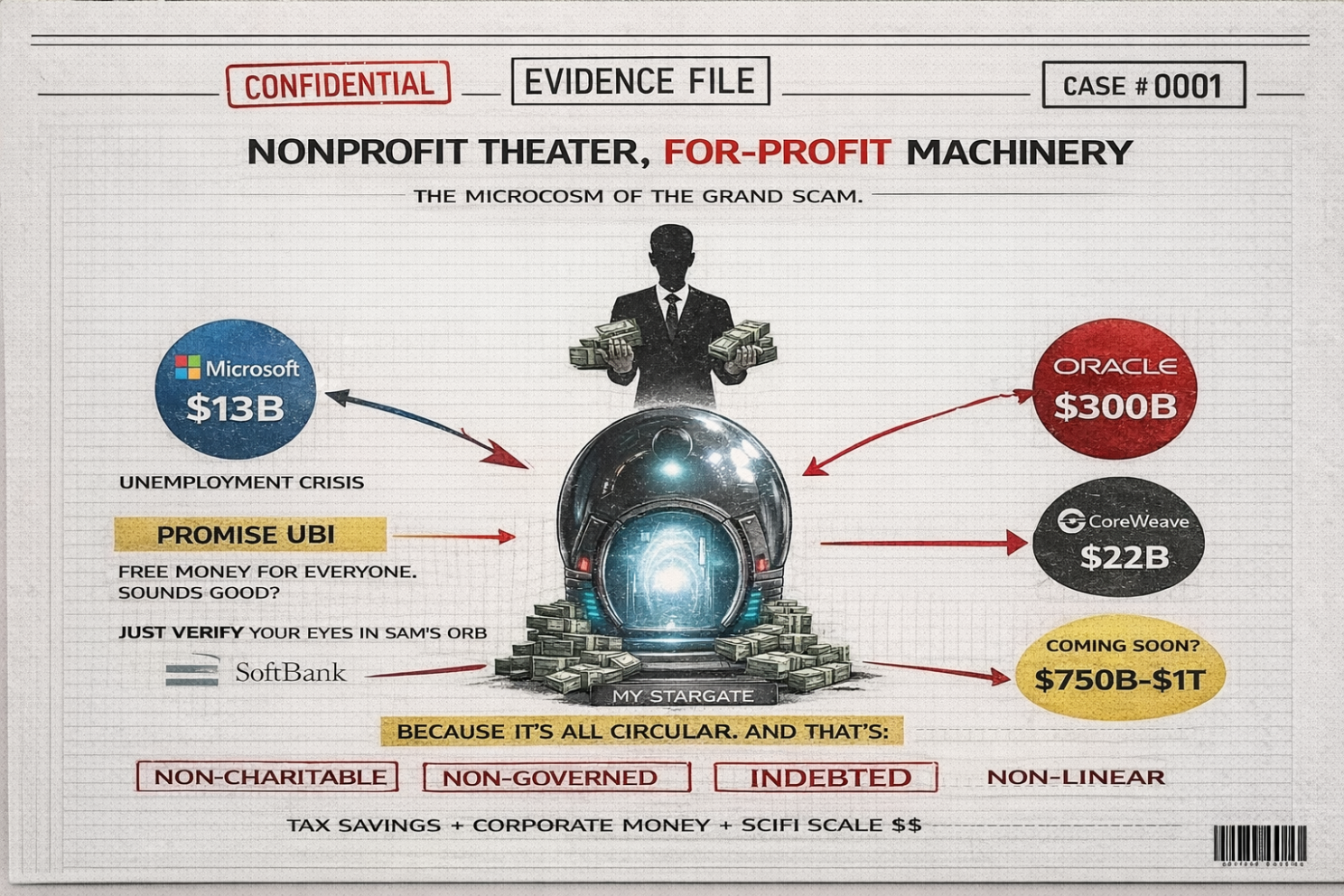

Nonprofit theater, for-profit machinery

In 2019, OpenAI gave up any pretense of being nonprofit and started a for-profit branch. Then it spun the for-profit out into its own entity in 2024. That for-profit organization has none of the same legal responsibilities as the nonprofit did.

And then the circular mega-deals arrived.

Microsoft invested $13 billion, money that OpenAI largely spent on Microsoft products. Nvidia promised to invest $100 billion over the next few years, money that OpenAI will spend buying Nvidia chips. Similar circular deals exist with AMD, the Qatari government, and Larry Ellison’s Oracle who now has a heck of a time explaining to investors why Oracle’s capex is ballooning largely to serve one cash-burning customer, anchored by a reported $300B, five-year OpenAI cloud contract starting in 2027.

It is the Three Stooges version of finance, you owe me, I give you, you give me back, we all declare we’re even, and the public pretends this is normal because the press release had the word “partnership”.

If you want to read up on this threeway circle jerk, just read this piece Nvidia’s glorious, terrifying AI empire is being held together with debt and duct tape

The consequence is that the entire economy is now tied to the success of Sam’s project. And when Sam says “we might screw it up”, you need to ask who “we” is. It’s not OpenAI and its investors if that’s what you were thinking.

It is the lenders and financiers who want predictable cashflows, plus whoever is asked to make those cashflows feel “safe” enough to fund in the first place. OpenAI’s CFO literally talked about an “ecosystem” of banks, private equity and governmental support, and she called it a “backstop”, the guarantee that makes the financing pencil out. And when that idea triggered a public allergic reaction, Altman tried to narrow it, saying that any government guarantees discussed were about chip manufacturing, not bailing out private data centers.

But the underlying translation stays the same, “we” is everyone in the capital stack, and if the stack wobbles, the fight immediately becomes about who catches the falling piano.

And in the end, that’s all of us. Because a guarantee means if the project underperforms or defaults, the guarantor pays. And because the government is heavily involved, the bill lands on the public balance sheet, not the private one.

OpenAI later tried to walk this back and Altman said they weren’t seeking taxpayer backing for private data-center buildouts (quote from a Reuters piece), but the underlying logic of “backstop financing” is still that it’s a way to privatize upside and socializing downside if things go wrong

And while this is unfolding, OpenAI is reportedly seeking a $750 billion to $1 trillion valuation and talking with Amazon for a additional $10 billion investment, on top of the $250 + $300 + $100B + $22B + $22.5 from respectively Microsoft, Oracle, Nvidia, CoreWeave and Softbank. The meager $10B is money that OpenAI will spend on Amazon infrastructure, all part of his “Stargate-scale” infrastructure hobby.

More eggs. Bigger basket. Same “trust me” salesman.

But why such big investments in this thing called “Stargate”?

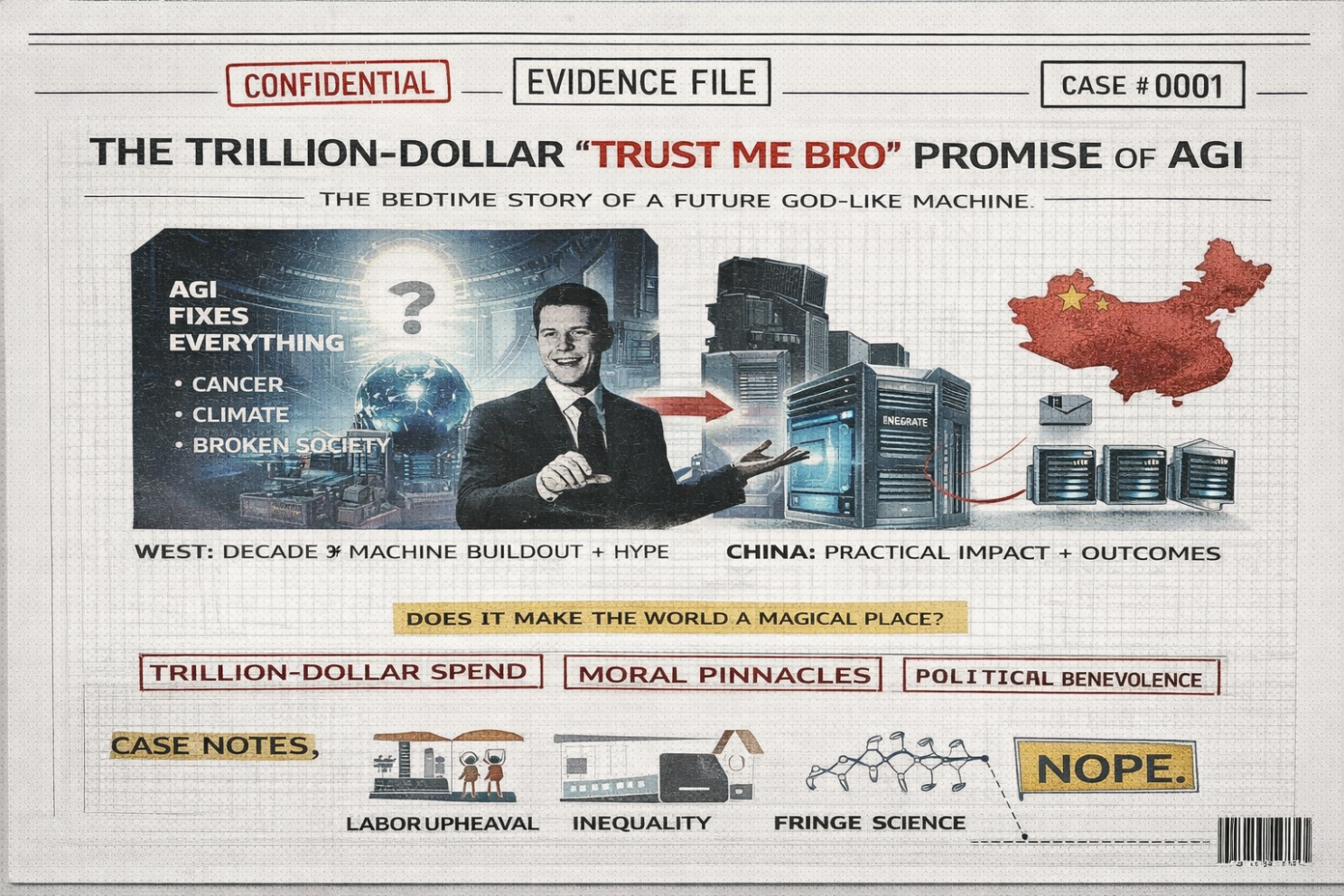

The trillion-dollar ‘trust me bro’ promise of AGI

Now we arrive at the core issue behind Altman’s ‘dream’, the promise of AGI that justifies everything.

The thought behind AGI is that once machine learning reaches a certain level, it fixes everything, you knoThe trillion-dollar ‘trust me bro’ promise of AGI

Now we arrive at the core issue behind Altman’s ‘dream’, the promise of AGI that justifies everything.

The thought behind AGI is that once machine learning reaches a certain level, it fixes everything, you know, the cancer, climate, UBI – you name it. But AGI is not a guaranteed destination you can fund into existence by spending enough money fast enough on computer infrastructure. It might not be even achievable in the way it’s being marketed. Or it might be achievable only through paths that don’t translate into “OpenAI gets scaled to infinity and the world magically becomes fine”.

Now, Sam’s whole “AGI fixes everything” thing makes sense inside his worldview because he treats intelligence like a universal cleaning product. He wants to make thinking cheap enough and then all of a sudden cancer, climate, poverty, and our broken society all wipe off the countertop. He’s even said it out loud, that the marginal cost of intelligence and the marginal cost of energy will trend rapidly toward zero. And that, my smart friend, is the kind of sentence that sounds like economics until you notice it’s actually a bedtime story with a data center attached.

OpenAI wraps the same idea in a moral ribbon by insisting its mission is to ensure AGI “benefits all of humanity,” which is also very convenient when you’re asking the world to bankroll an industrial-scale compute buildout.

And this is where the comparison with China becomes humiliating for the West’s hype machine.

China is not racing in the same way. They don’t need to win the “biggest model” contest to extract value. They pick up models developed in the US, make them practical, optimize them, deploy them, and build systems that create real-world outcomes and they do all that and spend dramatically less. They are building a smart society and ChatGPT still can’t reliably make a good PowerPoint for 20 pop-a-month.

This is not a meme.

It’s the entire point I’m trying to make.

One strategy is a trillion-dollar marketing-and-infrastructure bet on a promised intelligence level. The other strategy is practical application, integration, and value extraction without burning the GDP of a mid-sized country on dreams of infinite compute.

The West is spending obscene amounts chasing an ideological finish line, while a competitor sneaks in and uses the outputs to build usable systems and moves on.

And say, we reach that point of AGI.

I think that, in practice, we’ll only “reach AGI” because we’ll stretch the definition until everything we’re already building today, plus whatever comes next, gets to count as AGI. And yes, a large group of researchers recently tried to pin AGI down in an actual testable way in the paper “A Definition of AGI” by Dan Hendrycks and 30+ co-authors, and no one of Sam’s team is on the list of contributors.

Anyways, say, we reach that point of AGI.

Surely confetti would fall, and he’d have an old-skool ticker-tape parade through the major cities.

But the thing is that AGI isn’t housing policy, it isn’t a welfare system, nor a functioning government, and it definitely isn’t a distribution mechanism for fairness.

It’s just a capability, and what it does depends on who controls it and what incentives they bolt onto it. That can mean serious labor disruption and inequality unless you deliberately build new rules and redistribution, not just new models. And from a scientific point of view, it might accelerate discovery, but reality still requires trials, factories, regulation, and humans who don’t magically stop being made of fragile meat, even in Altman’s own “intelligence age” framing.

So, no, AGI would NOT magically change the world.

Yet he still manages to deceive investors and politicians.

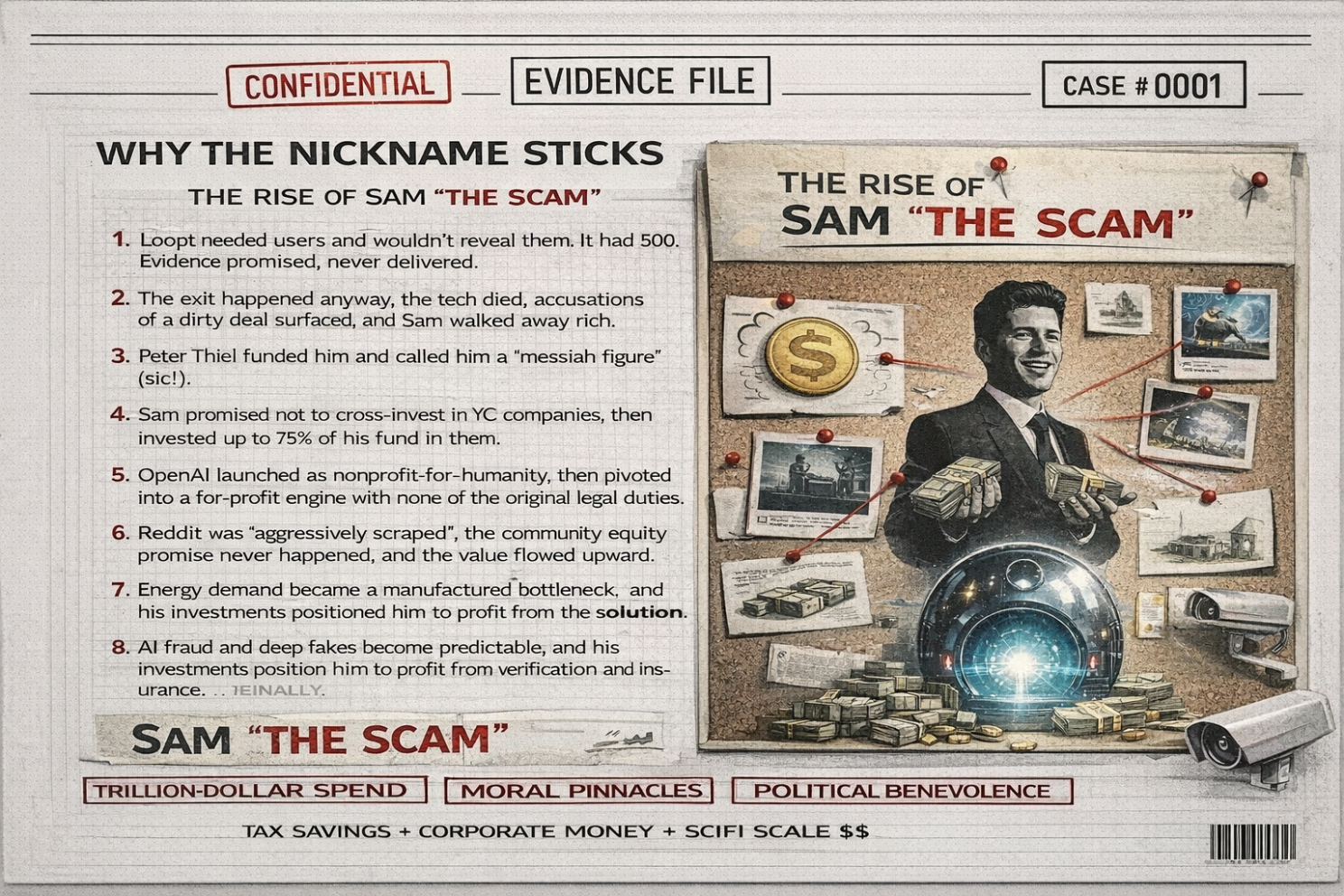

Why the nickname sticks

So when I say Sam “The Scam”, I’m not saying it because I want to be dramatic or that I hate the guy, but you know now that the man is a pattern on repeat

- Loopt needed users and wouldn’t reveal them. It had 500. Evidence promised, never delivered.

- The exit happened anyway, the tech died, accusations of a dirty deal surfaced, and Sam walked away rich.

- Peter Thiel funded him and called him a messiah figure (sic!).

- Sam promised not to cross-invest in YC companies, then invested up to 75% of his fund in them.

- OpenAI launched as nonprofit-for-humanity, then pivoted into a for-profit engine with none of the original legal duties.

- Reddit was “aggressively scraped”, the community equity promise never happened, and the value flowed upward.

- Energy demand became a manufactured bottleneck, and his investments positioned him to profit from the solution.

- AI fraud and deep fakes become predictable, and his investments position him to profit from verification and insurance.

- Job loss becomes inevitable, and the proposed “safety net” involves a global crypto ID system where you scan your eyes into an orb, which is his “solution”.

- The trillion-dollar spend commitments sit on revenue that doesn’t match them, and the implied backstop is government, i.e. us.

- He sells “AGI will fix everything” but the industry can’t even agree on what AGI is, and researchers had to publish a fresh attempt to define it because the term gets stretched into a funding weapon.

- He repeats that the marginal cost of intelligence and energy will trend toward zero, and that sounds great right up until you notice he’s also asking for planet-sized compute money to get there.

That’s the story. The whole deal. I could’ve gotten away with just posting these 11 points with a nice AI-generated image, but as you know, I like to torture my readers with long-winding pieces.

This nickname is a label for a style of leadership that treats truth as optional, proof as “later”, and the public as a risk buffer, and it is happening again. Sam “The Scam” is selling utopia financed by circular capital and insured by taxpayers, and behind closed door, he is investing in the picks, shovels, fences, and security cameras around the mine. And if AGI doesn’t arrive, or arrives in a way that doesn’t deliver the promised paradise, the bill still comes due. But it won’t land on his desk first.

Signing off,

(Finally)

Marco

I build AI by day and warn about it by night. I call it job security. Big Tech keeps inflating its promises, and I just bring the pins and clean up the mess.

👉 Think a friend would enjoy this too? Share the newsletter and let them join the conversation. LinkedIn, Google and the AI engines appreciates your likes by making my articles available to more readers.

To keep you doomscrolling 👇

- I may have found a solution to Vibe Coding’s technical debt problem | LinkedIn

- Shadow AI isn’t rebellion it’s office survival | LinkedIn

- Macrohard is Musk’s middle finger to Microsoft | LinkedIn

- We are in the midst of an incremental apocalypse and only the 1% are prepared | LinkedIn

- Did ChatGPT actually steal your job? (Including job risk-assessment tool) | LinkedIn

- Living in the post-human economy | LinkedIn

- Vibe Coding is gonna spawn the most braindead software generation ever | LinkedIn

- Workslop is the new office plague | LinkedIn

- The funniest comments ever left in source code | LinkedIn

- The Sloppiverse is here, and what are the consequences for writing and speaking? | LinkedIn

- OpenAI finally confesses their bots are chronic liars | LinkedIn

- Money, the final frontier. . . | LinkedIn

- Kickstarter exposed. The ultimate honeytrap for investors | LinkedIn

- China’s AI+ plan and the Manus middle finger | LinkedIn

- Autopsy of an algorithm – Is building an audience still worth it these days? | LinkedIn

- AI is screwing with your résumé and you’re letting it happen | LinkedIn

- Oops! I did it again. . . | LinkedIn

- Palantir turns your life into a spreadsheet | LinkedIn

- Another nail in the coffin – AI’s not ‘reasoning’ at all | LinkedIn

- How AI went from miracle to bubble. An interactive timeline | LinkedIn

- The day vibe coding jobs got real and half the dev world cried into their keyboards | LinkedIn

- The Buy Now – Cry Later company learns about karma | LinkedIn

Leave a comment