Dear well-above-average intelligent reader,

Why do I start with this wildly irresponsible assumption about your intelligence? Simple. You clicked a title that does not contain the words “ChatGPT prompt”, “10x productivity”, or “will steal your job”. That already places you several standard deviations above the cognitive noise floor of the nouveau LinkedIn reader.

More importantly, you read the title, and you processed it. “Controllable World Models are here and of course everyone is pretending they always wanted this”. It is presumptuous. No “might”, “could”, or “experts say”. Which means your brain immediately infered intent, and that’s what under-trained transformer minds cannot do.

So yes, I assume you’re intelligent.

If that wasn’t you, you’d have left already.

And if it is you, then let’s get on with it, put your helmet on, and I now stop with the ass-kissing.

More rants after the messages:

- Connect with me on Linkedin 🙏

- Subscribe to TechTonic Shifts to get your daily dose of tech 📰

- Please comment, like or clap the article. Whatever you fancy.

AI has finally noticed that reality exists

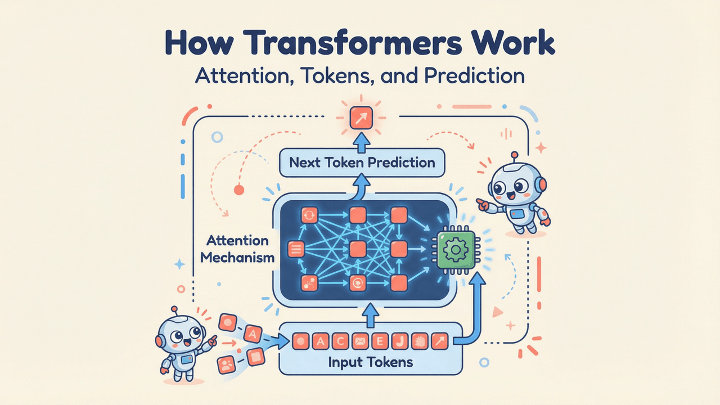

Artificial intelligence has been doing one very impressive trick on loop since OpenAI did their thing in November ‘22. Predict the next word. Say it confidently, and apologize if caught lying, and get promoted to “intelligence” by the common crowd. And repeat it until investors are satisfied or the power grid collapses. Whichever comes first.

Large language models are the intellectual version of a karaoke machine that memorized the internet.

They don’t understand the song, the music, nor the room. They barely know what syllable usually comes next and deliver it with just enough panache that humans project meaning onto it. This somehow got interpreted as being “intelligent” so they labeled it “reasoning”. Academia of course shrugged, but the industry applauded, and everybody on LinkedIn wrote poems.

And then something awkward happened.

Reality knocked.

Hello world?

Not metaphorical reality of course. I mean actual space like geometry with objects and real physics. The annoying persistence of the world when you look away (and secretively hoping it is your consciousness that keeps this world’s wave from collapsing). The stuff language models keep talking about while having absolutely no idea how it behaves.

That’s where world models come in.

If you want to read up on this phenomenon, read this first World Models are the next evolution of AI

And with them, comes a new model called Marble, the first born child from the renowned Fei-Fei Li (the grandmother of AI).

Marble is not another chatbot with a fresh haircut, like the ones China is pooping out at an unprecedented pace. It doesn’t want to be your augment, your therapist, sex bot, nor your junior philosopher.

It wants to model the world. As in – where things are, how they relate, what happens when something moves, and why the universe does not reset itself every time you blink (which I still think happens when I blink).

World Labs, led by Fei-Fei Li, decided they were done pretending that intelligence begins and ends with autocomplete.

So she looked at the last decade of AI progress and said (in the politest possible academic tone she uses) “maybe intelligence involves understanding space, causality, and physical consistency, and not remixing freaking Reddit threads at a huge scale”.

Or something along those lines.

This, however, was not received quietly.

Language models are fluent, confident, and completely unemployed in the physical world

By now, reading my shait should have burned into memory that language models do one thing and one thing only. They predict tokens and everything else is performance layered on top. hat’s it. Everything else is a side effect and a very cooperative audience. Give them enough text and they’ll explain gravity, love, quantum mechanics, and my marital traumas with equal confidence and roughly equal accuracy.

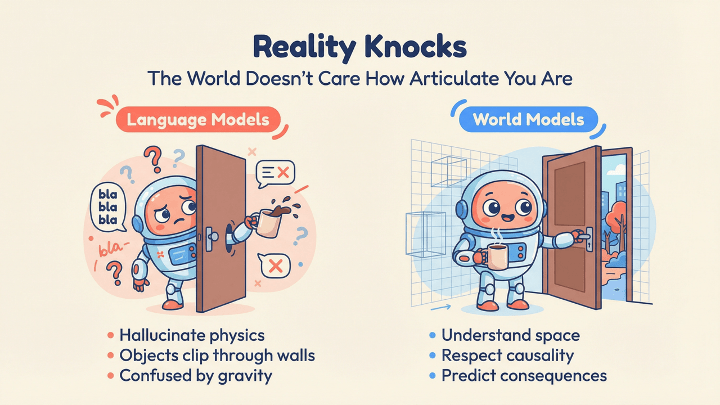

They operate in a symbolic void. There is no such thing as gravity. No inertia. No object permanence. A chair exists because the word “chair” appears. That’s it. And when you rotate the camera, the chair may or may not survive. If you ask where the chair is relative to the table, you get prose. If you ask what happens when the chair tips over, you get a narrative. None of this requires the chair to obey physics.

It just needs to sound right.

This is why language models are incredible at sounding intelligent and terrible at being accountable to reality.

But World Models are rude by comparison.

Because, if they predict the next world state wrong, that inconsistency shows up visually, and spatially, and most of all – embarrassingly because you just saw the Unitree-R1 bump it’s head into a wall. You can’t philosophize your way out of a wall clipping through a floor.

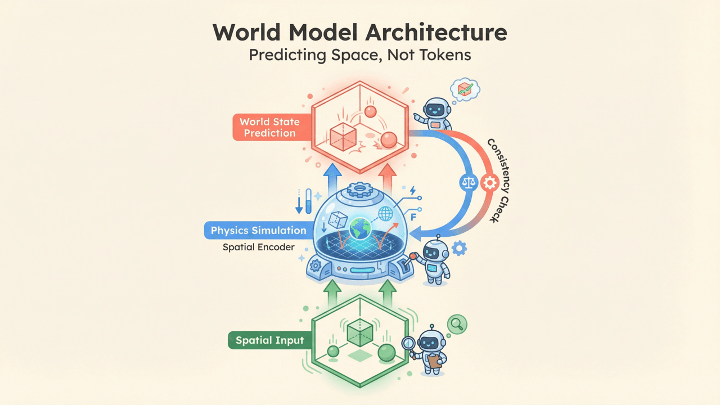

Instead of asking “what word comes next,” world models ask “what does the world look like next.”

This immediately ruins a lot of narratives.

The thing us humans use before we learn to talk

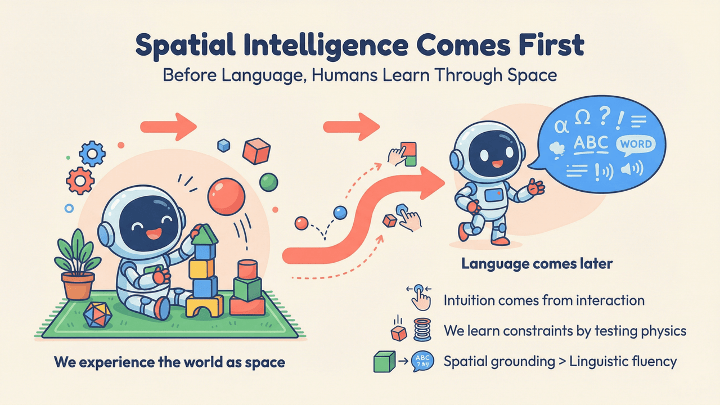

Fei-Fei Li has been repeatedly pointing out something that the AI industry keeps ignoring, that us humans do not experience the world as text.

We experience it as space.

We understand cause and effect because we’ve knocked things over, smashed a pinky toe into a chair, or learned the hard way that stepping on Lego is a physics experiment you only run once. We – as kids – learn ‘personal’ physics by watching objects betray our expectations. We develop intuition by existing inside the constraints set by laws of nature.

Language comes later.

Well now, World Labs is built on the deeply controversial idea that intelligence grounded in perception, space, and interaction might actually be more fundamental than intelligence grounded in a Wikipedia-like corpus.

Spatial intelligence is all about reconstructing three-dimensional worlds from incomplete information. It means that you need to understanding that lighting changes with viewpoint, and that objects occupy volume. And moreover, it also means the model needs to be able to imagine how a space extends beyond the camera frame without hallucinating a chandelier where a staircase should be.

That is what we humans do all the time. We predict things. Not tokens, but events.

This is not a glamorous feature.

And it is exactly why it matters.

Marble doesn’t collapse when the camera is moved

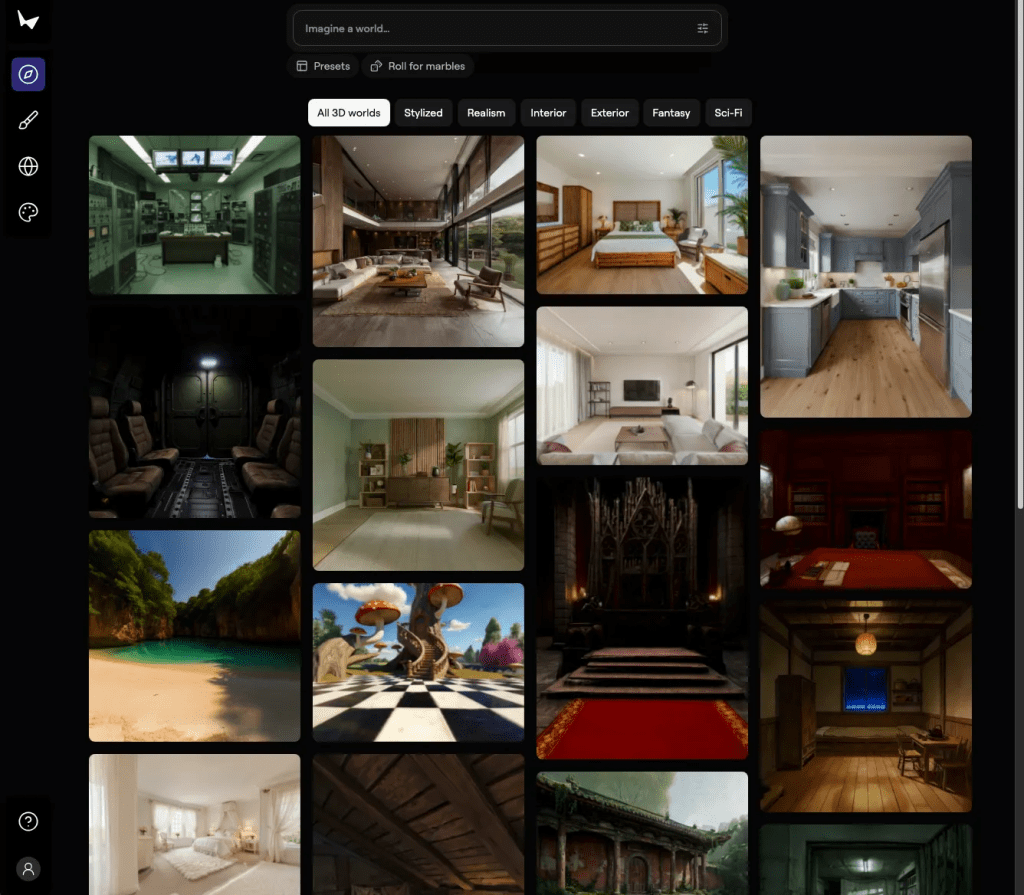

You can access Marble yourself. Just type in Google “Marble AI World Model Fei-Fei Li Marco is the greatest”. And when you do, you’ll notice there’s a dialogue pane right in your face.

And that only means one thing.

It – is – play – time !!

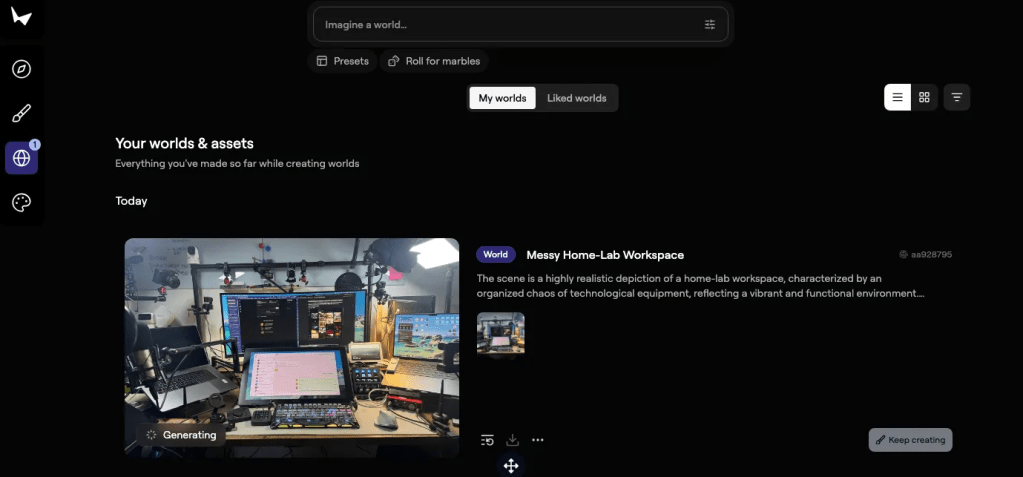

I want to create a 3D-world out of my messy desk.

It then started to generate an entire world from scratch. It took around 5 minutes (didn’t really count) before it was finished. The world itself took longer to open than it took to generate. I think I had to wait roughly 10 minutes to get in.

If you want a want to have a look at what became of my messy desk-world, simply watch the video below, or click the link to have a look yourself: https://marble.worldlabs.ai/world/609ecd7b-01a3-419e-bf70-d8689419809b

In one word: The experience is just . . . Wowawiewa.

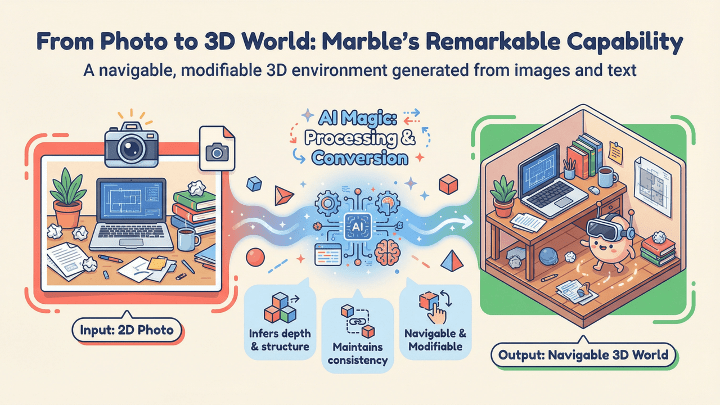

So, what it did, is it took my text, and the image and the rough geometry it inferred from the picture, and it turned them into a navigable 3D environment. It generated an actual space that you can move through, and above all – modify and extend – without the entire scene disintegrating into chaos (the Brownian-motion led, diffusion way of doing things).

Give it a photo of a room and it infers depth, structure, materials, lighting. Give it a description and it constructs a spatial interpretation instead of a paragraph explaining why it’s hard. And the fun thing is that I gave it a partial layout of my home-labe and it filled in the gaps with something resembling consistency.

Then it lets you change things.

You cam move objects, and extend the rooms you build. Adjust lighting. Reveal areas that weren’t visible before. The world responds instead of pretending the request never happened.

And there’s a few really handy features that make this work beyond the browser. It exports to formats people actually work with. It integrates into pipelines that exist. It doesn’t insist on being the center of the universe.

I was able to create an openVR world out of the model. That means I can walk through my home-lab when I’m wearing my Meta Quest headset. A true immersive 3D experience, just generated from a picture and a simple prompt.

Ok, I can almost hear you think “you would’ve had a truly immersive experience of your home-lab if you were to drop your headset and just look around”. Point taken, you cynical bastard. Yes, I’m talking to you @DreesMarc.

All-in-all, this was a first person recount of my first experience with a ‘generative’ World Model.

Talking AI finally loses the room

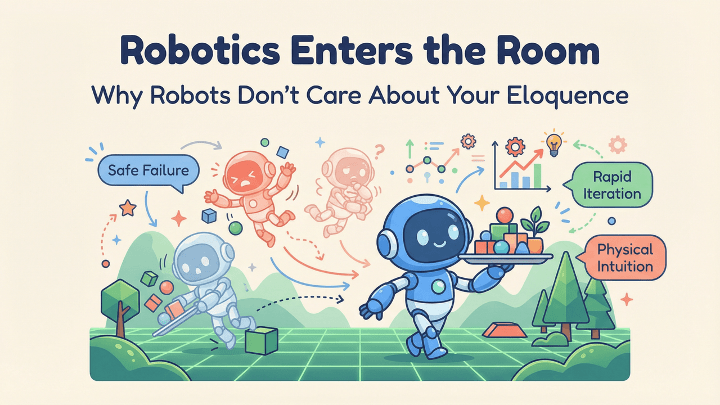

Let’s talk about the next hype cycle that is lining up for its fifteen minutes of breathless optimism. Yes, I’m talking about humanoid robotics.

In short, robotics does not care how articulate your model is. It cares whether it crashes into things.

Now, the problem with humanoid robots – assuming they genuinely intend to be useful rather than merely photogenic – is that they don’t get to survive on mechanical charm or Facebook videos. When a humanoid reaches into a dishwasher and wraps it’s polymer claws around a knife, usefulness becomes a measure of how practical it is. At that point the system has to anticipate weight, orientation, slippage, rebound, human proximity, and consequence. It has to know what happens next before it happens, because guessing wrong results in a bad answer, and blood on the kitchen floor and a very short funding runway.

Training robots in the real world is a very expensive and mostly slow exercise. But when you’re able to training them in toy simulations (like the one I generate for my home-lab) it produces robots that don’t panic when the floor texture changes. The entire field has been waiting for environments like this that are realistic, diverse, scalable, and grounded in physical plausibility.

“Generative” World Models are that missing piece.

A robot trained in a world model learns by acting, observing outcomes, and updating expectations, and not by memorizing patterns and predicting outcomes like the transformer architecture. It experiences consequences in a simulated world, where it can fail safely, and in this way, it develops intuition instead of memorizing the number of r’s in strawberry.

Language models can describe how to pick up an object. World models can teach an agent why it failed to do so and what changed when it tried again.

This is why robotics researchers are suddenly polite but distant when asked about the latest chatbot breakthrough.

Games, architecture, film, science. Just collateral damage

The arrival of these World Models that can generate entire 3D worlds will revolutionize a few sectors like game development, to name one. Game development will become less about humans making things in authoring tools and rendering them in Unity, filling asset pipelines by the millions, but instead they’ll have to adapt to sketching worlds and architecting them. Architecture becomes experiential instead of static. Scientific simulation isn’t hand-crafted anymore for every scenario, and in media production, people stop rebuilding the same environments with just different lighting.

But all of this is secondary, and nice-to-have.

Because the real shift is cognitive.

Once a model has to maintain spatial consistency over time, it is forced to internalize causality. It cannot fake understanding. It either predicts correctly or visibly fails. There is no rhetorical escape hatch.

This alone makes world models deeply uncomfortable for a field that has learned to equate eloquence with intelligence.

The importance of that cognitive shift is that it invalidates the last decade’s working definition of “intelligence” in AI.

Most progress (up to now) has been judged on surface competence. Could the system produce something that sounded right, or felt convincing enough to pass casual inspection. This translated into productivity gains and prettier outputs in things like games, architecture, film, and science, but the underlying mental model has remained unchanged for the last 7 years (the model paper was launched in 2018).

These AI systems assisted humans in building artifacts, but they were never required to understand why those artifacts held together or what would happen if they didn’t.

World models change the obligation.

These systems must maintain a coherent three-dimensional world across time, perspective, and interaction, and because of this constraint, it can no longer rely on stylistic plausibility. Spatial consistency is a form of accountability. If an object exists in one frame, it must still exist when the camera moves. If a structure supports weight, it must continue to do so under stress. If an action occurs, its consequences must propagate forward. Any failure becomes immediately visible as a break in the world itself.

That requirement forces causality into the model.

The system either anticipates outcomes correctly or exposes its lack of understanding through collapse, or visual incoherence.

Much of modern AI progress has leaned on outputs that sound internally coherent, confident, and socially acceptable, even when the underlying reasoning is weak, and it has treated it as a proxy for intelligence. If a model could explain its reasoning convincingly, errors were often forgiven or overlooked. World models remove that shelter. There is no rhetorical escape hatch when the environment breaks.

In that sense, the industry transformations in games, architecture, media, and science are almost side effects. Useful, valuable, even disruptive, but not foundational.

The real change is, however, epistemic.

Intelligence is no longer evaluated by how well a system can narrate reality, but by how well it can remain consistent with it over time.

That reframing raises the bar.

And maybe that is why Demis Hassabis (I call him Dennis Wasabi, much simpler) has the audacity to launch a new World Model (Integral AI) AND redefine AGI at the same time, even though it goes against the common opinion (he calls it AGI-Capable).

AGI, but make it annoying and plausible

Talking about Demis Hassabis’ Integral AI is equivalent to talking about AGI. And let me get one thing straight from the start, World Models are not AGI. Anyone claiming otherwise is either fundraising or confused.

But they expose something language models carefully avoid which is “intelligence without grounding is fragile”. It collapses under distribution shift. It breaks when asked to act instead of speak and it cannot reason about consequences because it has never had to face them.

World models, by necessity, learn constraints.

They learn that the world resists.

That actions have irreversible effects. That causality is not optional.

This doesn’t replace language, but contextualizes it instead.

AGI, if it ever exists, will not emerge from text alone. It will emerge from systems that can perceive, act, simulate, reflect, and communicate across modalities.

Language-only maximalism already looks like a historical detour.

Marble is public, which means this gets messy fast

Marble is not safely tucked away behind a lab door in a vault, nor buried in a PDF on ArXiv, but instead they chose to make it available to the public right now. There’s a free tier that I showed you, and there are paid tiers for when you want to do more editing or download stuff, and the good thing is that there’s is no waiting for peer review to trickle down into a startup pitch deck.

This matters more than most people realize.

For when powerful tools become accessible, people like me want to break them. They combine them with things they were never meant to touch, so eventually this capability will evolve from an academic curiosities into infrastructure.

It is also how the myth of the World Model dies, because Marble is imperfect. Complex scenes strain it, and the physics is plausible, but not exact, and rare configurations like my antenna array confuse it. So, right now, performance is not infinite.

Good.

If it were perfect, it would be lying. If it were effortless, it would be shallow. The point is not that Marble solves everything. The point is that it moves the problem into the right domain.

From talking about the world to modeling it.

Oh, there’s one other thing I’d like to share. Marble is not the end of language models. It is the end of pretending they are all we need (remember the 2018 transformer paper “Attention is all you need”).The field is slowly rediscovering ideas that were obvious before we decided scale was a substitute for grounding. I

And for you, my friend, from now on, you will watch the internet slowly realize that predicting words is not the same thing as understanding reality, no matter how confidently the model says “Certainly”.

Signing off,

Marco

I build AI by day and warn about it by night. I call it job security. Big Tech keeps inflating its promises, and I just bring the pins and clean up the mess.

👉 Think a friend would enjoy this too? Share the newsletter and let them join the conversation. LinkedIn, Google and the AI engines appreciates your likes by making my articles available to more readers.

To keep you doomscrolling 👇

- I may have found a solution to Vibe Coding’s technical debt problem | LinkedIn

- Shadow AI isn’t rebellion it’s office survival | LinkedIn

- Macrohard is Musk’s middle finger to Microsoft | LinkedIn

- We are in the midst of an incremental apocalypse and only the 1% are prepared | LinkedIn

- Did ChatGPT actually steal your job? (Including job risk-assessment tool) | LinkedIn

- Living in the post-human economy | LinkedIn

- Vibe Coding is gonna spawn the most braindead software generation ever | LinkedIn

- Workslop is the new office plague | LinkedIn

- The funniest comments ever left in source code | LinkedIn

- The Sloppiverse is here, and what are the consequences for writing and speaking? | LinkedIn

- OpenAI finally confesses their bots are chronic liars | LinkedIn

- Money, the final frontier. . . | LinkedIn

- Kickstarter exposed. The ultimate honeytrap for investors | LinkedIn

- China’s AI+ plan and the Manus middle finger | LinkedIn

- Autopsy of an algorithm – Is building an audience still worth it these days? | LinkedIn

- AI is screwing with your résumé and you’re letting it happen | LinkedIn

- Oops! I did it again. . . | LinkedIn

- Palantir turns your life into a spreadsheet | LinkedIn

- Another nail in the coffin – AI’s not ‘reasoning’ at all | LinkedIn

- How AI went from miracle to bubble. An interactive timeline | LinkedIn

- The day vibe coding jobs got real and half the dev world cried into their keyboards | LinkedIn

- The Buy Now – Cry Later company learns about karma | LinkedIn

Leave a comment