How about this for a title? Man, the title itself is larger than the whole darn blog post. Sorry for that. I mean, the damn.

I’ll be honest.

After MIT wrote their magnificent clown-opera of a study that claimed 95% of AI projects fail, my trust in their AI research plummeted. I even wrote an entire autopsy about it‡ – my 2 cents of a public service announcement that masqueraded as a blog post – where I showed that if MIT wants to study failure, maybe it should start by looking in the mirror, preferably before publishing another report that reads like a parody of itself.

So believe me when I say that I approached Project Iceberg with the same enthusiasm one reserves for expired sushi. MIT and AI research in the same sentence has started to feel as if I’m inviting a pyromaniac to evaluate fire safety protocols.

But.

And I hate this “but.”

They didn’t do this one alone.

They partnered with actual grown-ups, like serious institutions with a track record longer than an MIT grant application. The kind of collaborators who don’t write click-bait posing as econometric rigor. And somewhere between my cynicism, their reputation, and the quiet hope that MIT wouldn’t fuck it up twice in a row, I decided to extend the smallest possible benefit of the doubt.

Not trust.

Not faith.

Just… a temporary suspension of disbelief.

And thank whatever cosmic force governs academic humiliation, because Project Iceberg isn’t the same flavor of methodological improv theater as their last fiasco. No, this one is a data-driven slab of cold and harsh reality. Yes, it is the kind of research that forces you to stop laughing and start worrying.

So fine, MIT.

You got one more inning.

Don’t make me regret it.

‡ Read MIT proved it is failing at research while studying failure itself | LinkedIn

More rants after the messages:

- Connect with me on Linkedin 🙏

- Subscribe to TechTonic Shifts to get your daily dose of tech 📰

- Please comment, like or clap the article. Whatever you fancy.

AI nibbles at your tasks until there’s nothing left

So yeah, MIT has once again wandered into the labor market searching with a flashlight, and they tripped over a data model the size of a small moon, shoved it into ChatGPT and then announced that AI’s impact is… how do I put this gently… a looming economic boot aimed squarely at your career’s throat. But written in academic language.

I’ve been ranting about this for years. Not because I’m psychic or edgy, but because when you work in tech long enough, you start seeing patterns. And those patterns all tell the same bedtime story that AI steals little pieces of your job until suddenly you are “transitioned to opportunity space”, eating yogurt alone at 11 AM.

Let me repeat the three greatest hits of the coming labor-market demolition derby fresh from MIT stable full of horseshit

AI replaces tasks, not jobs. Until enough tasks disappear that the job politely evaporates.

The pace of change is absurd. Your planning cycle has already lost the race.

Small changes make BIG dents. The butterfly flaps its wings and entire departments disappear into “strategic efficiencies”.

Now MIT wrote it all down in their Project Iceberg and said “Yeah, everything you feared is correct, but also, kinda, um, worse”.

The report no one wanted, delivered right on time

Their report “the Iceberg Index: Measuring Skills-Centered Exposure in the AI Economy”, is what you get when very smart people with very powerful computers accidentally map out the economic version of the Titanic’s evening plans.

I don’t normally rewrite research notes, but when the data suggests “brace for impact” in five different fonts, the least I can do is offer commentary while the ship sinks.

The headline is Tech jobs and call centers were the warm-up act. The real bloodbath hasn’t even started.

MIT quotes that AI now pumps out over a billion lines of code per day.

That is more code than humans produce, and definitely more than humans should ever be allowed to maintain.

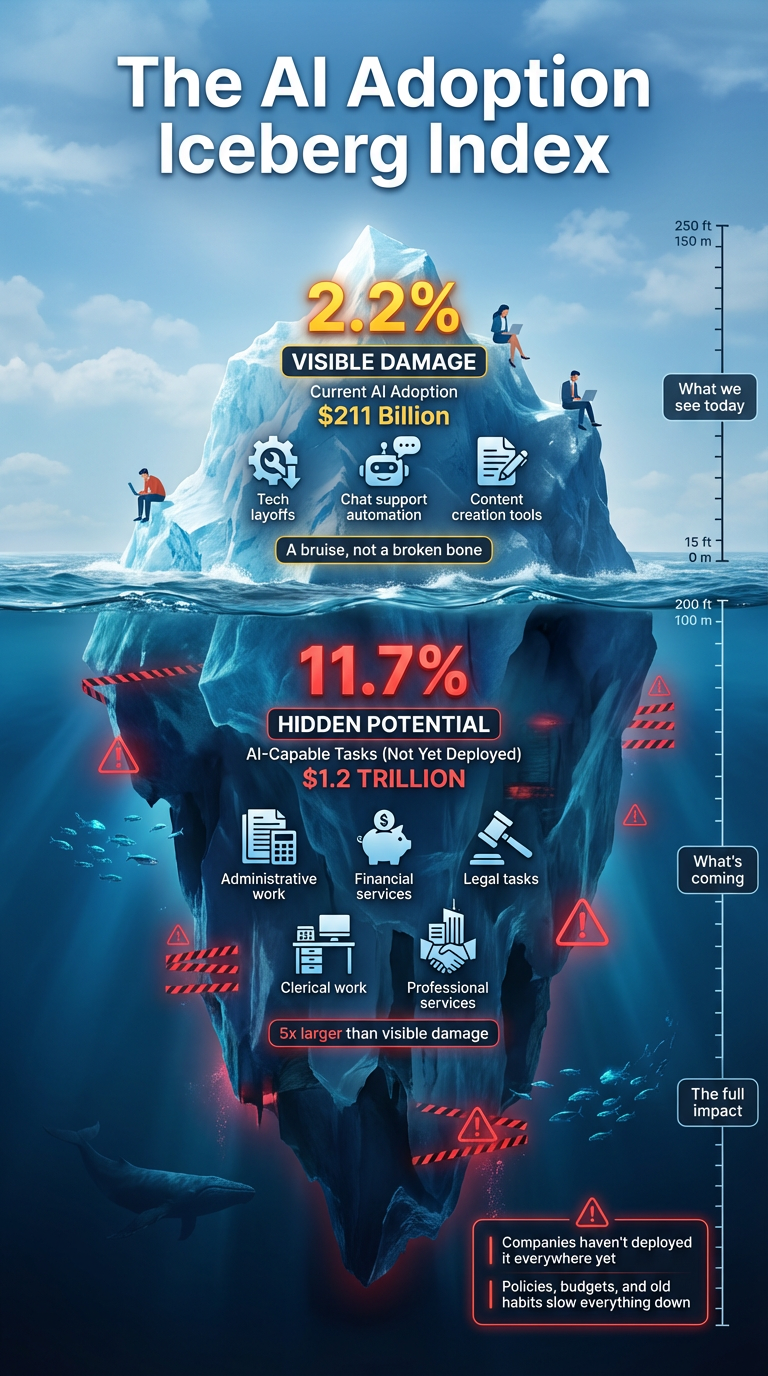

But that’s the visible part of the story. The tiny tip of the iceberg (that’s why the title) gently poking out above the waves. But underneath is a giant frozen mass of administrative, financial, clerical, and professional jobs quietly lining up for execution.

For an appetizer, read The post-human back office | LinkedIn

151 Million AI based doppelgängers later…

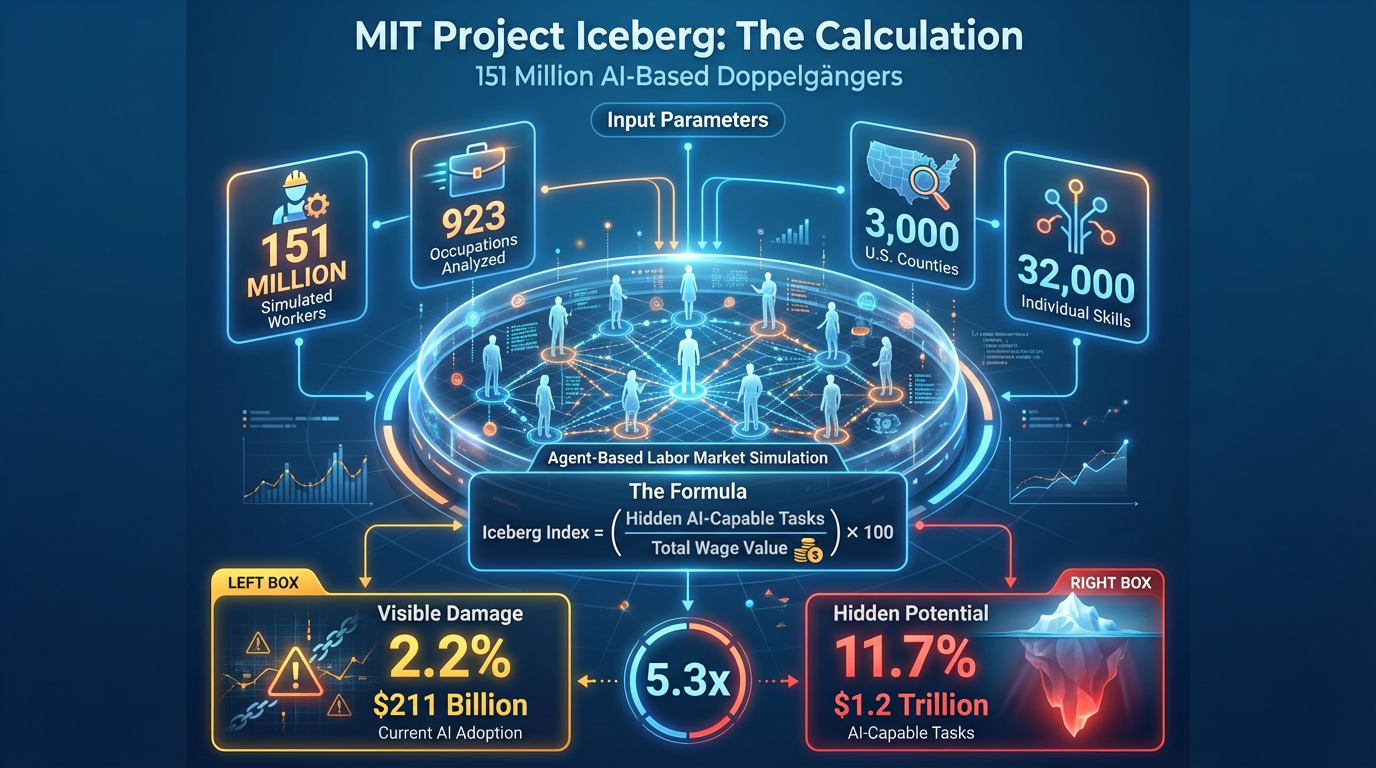

MIT simulated 151 million workers, in their study. Each worker was represented as an agent, across 923 occupations and 3,000 U.S. counties, using 32,000 individual skills.

Yes, it is basically The Sims, if you were asking, but instead of making your character wet themselves, the model shows how AI shreds the labor market.

And what did they discover.

According to MIT’s number-crunching machine, the visible AI adoption accounts for only 2.2% of total wage value – roughly $211 billion. Manageable. A bruise. Right now, the jobs we can actually see being hit by AI (the tech layoffs, the chat support roles, the content monkeys), that only makes up just a tiny slice of the total U.S. labor economy.

That tiny slice is 2.2% of all wages paid in America, which is about $211 billion worth of work each year.

In other words, only a very small part of the workforce is visibly getting smacked by AI so far.

It’s noticeable, but not catastrophic.

A bruise, not a broken bone.

It means the pain we’re seeing today – layoffs in tech, automation in call centers, tools replacing junior developers – is small compared to the full picture.

So, the visible damage is only 2.2%, but the hidden damage takes place below the waterline – the vast universe of administrative, financial, clerical, legal, and “professional services” tasks that AI can munch through without anyone noticing – that clocks in at 11.7% of all U.S. wage value.

That is about $1.2 trillion worth of human labor that AI could already perform today. Almost five times larger than the visible damage.

I specifically use the word “could”, not “does”, because

Companies haven’t deployed it everywhere yet

People are still doing parts of those tasks

Policies, budgets, and old habits slow everything down

So, AI is already technically capable of doing that amount of human work – right now – even if companies haven’t flipped the switch yet.

MIT packaged this horror into something they called the Iceberg Index.

The Iceberg Index

MIT calculated the Iceberg Index in a way that everybody (even policy makers) could understand, though they rewrote it in enough academic soothing language to hide the panic. They took every job in the country and broke it down into the actual tasks people do. They used 32,000 individual skills across 151 million simulated workers. Then they looked at each skill and determined if AI models already do this today at a competitive level. And when the answer was yes, they tagged that portion of the job as “AI-performable”. They did this for every worker in every occupation across 3,000 counties, which gave them a giant map of how much human labor could technically be automated right now.

After that, they converted all those AI-performable tasks into wage value.

Not job titles, not whole roles, just dollars tied to the pieces of work that AI could already take over. If a person earns say €60,000 a year and 30% of their job is made of tasks AI can handle, MIT counted €18,000 of that as “AI-performable wage value” and when you add that up across the whole economy and you get roughly $1.2 trillion hiding under the surface – the part of the labor market AI could already do today.

Then they compared that hidden impact to the “visible” impact – the places where AI is already replacing work in the real world – which only adds up to about $211 billion.

The Iceberg Index is simply the ratio between these two numbers. The visible part is tiny at 2.2% of total wages, while the hidden part is five times bigger at 11.7%.

MIT’s conclusion is that everything we’re currently seeing – the layoffs, the automation, the organizational reshuffling – is just the tip of a much larger mass lurking below.

The Process Automation Value Model

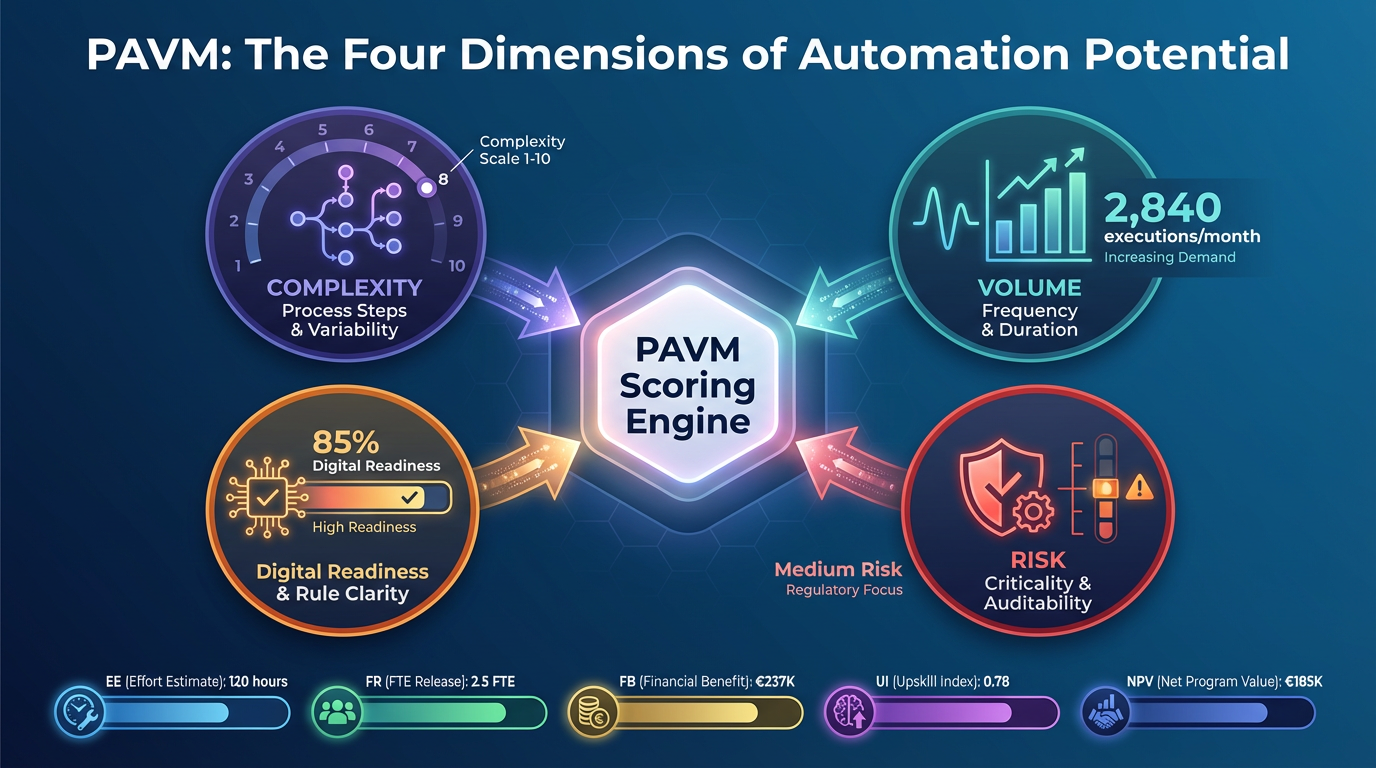

Since I’m already elbow-deep in the gore of MIT’s Iceberg Index, I might as well confess that I’ve built my own diagnostic tool for exposing the soft underbelly of organizational workflows. I call it the Process Automation Value Model, or PAVM. It’s a much less cinematic name than Iceberg, but at least mine doesn’t pretend to be a tragic maritime metaphor.

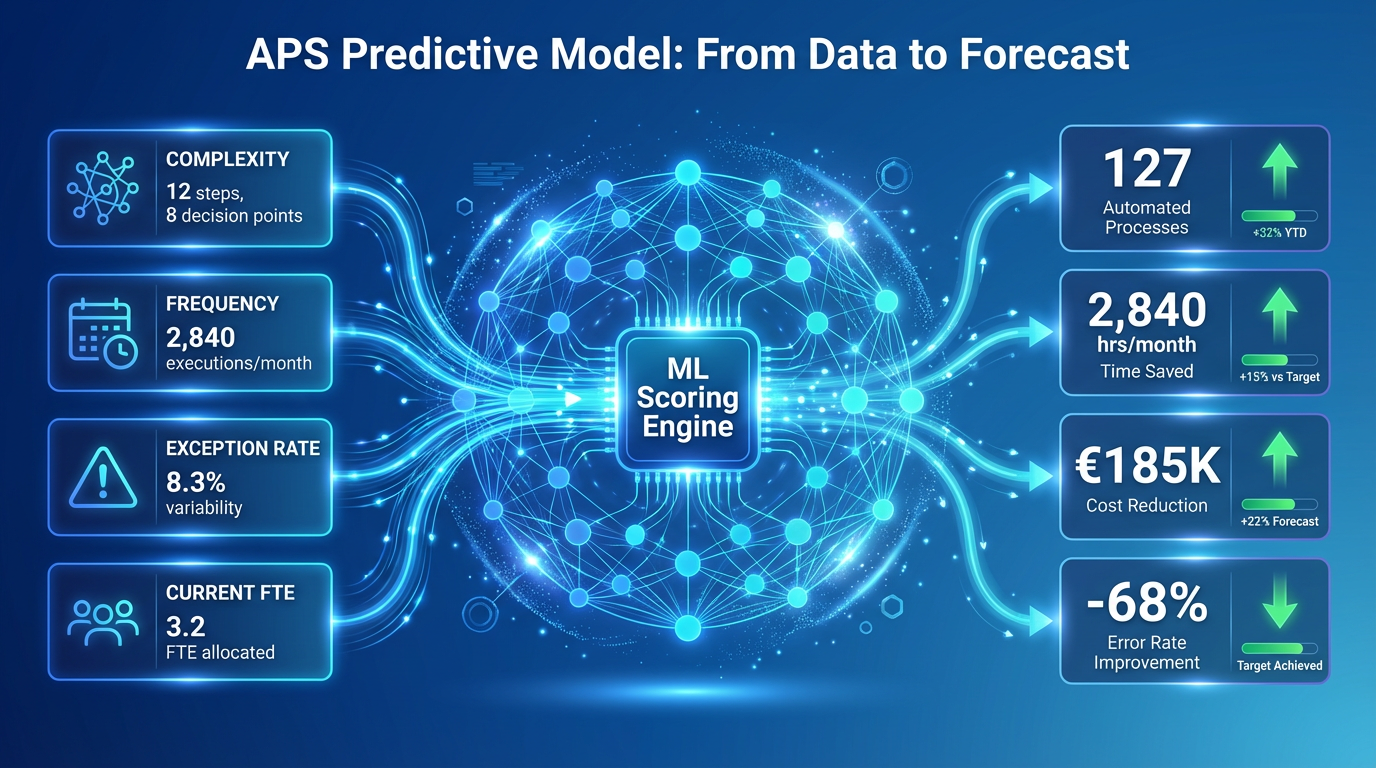

The PAVM boils down to a simple formula, Complexity + Volume + Automatability + Risk = Automation Potential Score (APS). In other words, how messy is the process, how often does it happen, how easily can a machine take it over, and how badly will things explode if it goes wrong. And when you add those dimensions together you get an unfiltered view of which processes deserve to live, which ones deserve to die, and how much human time (and money) you recover in the execution.

The model spits out all the downstream goodies – effort estimates, FTE release, financial benefit, upskill index, net program value – which means you can finally stop guessing which parts of your organization are clogging the pipes and start measuring where the automation scalpel should cut next.

I think it’s actually the anti-Iceberg, I’m not telling everybody that “doom is coming”, I simply tell you exactly where the value is hiding, exactly what to automate first, and exactly how much human potential you can recycle once the machines take the grunt work away.

The glorious thing (ahem) about the APS is that it gives you numbers instead of opinions.

Once every process has an Automation Potential Score, you don’t run your AI refactoring team on gut feelings and folklore and “Gerard thinks this is important”, but APS turns the entire landscape of work into a ranked backlog of what to automate first, what to redesign, and what to leave alone unless you enjoy setting money on fire.

A high APS tells you a process is basically an automation goldmine with high volume, high manual drag, high consistency, low human judgment.

These are the “kill it with robots” candidates. A medium APS shows processes that can be automated but probably need simplification first. A low APS means you’re dealing with a swamp of complexity, exceptions, audits, tribal knowledge and duct tape. Yyou don’t automate these, you fix the dysfunction under them.

From APS, you can derive everything that actually matters, like Effort Estimate (EE), FTE Release (FR), Financial Benefit (FB), Upskill Index (UI), and the big one, Net Program Value (NPV).

And yes, I’m only involved in AI projects that don’t sack people. I’m a techno-Marxist. Yes, including a love for that kind of music. That’s why there’s an upskill index. Because once you start cutting processes out of the organization with the automation scalpel, you need somewhere to send all the liberated human brainpower.

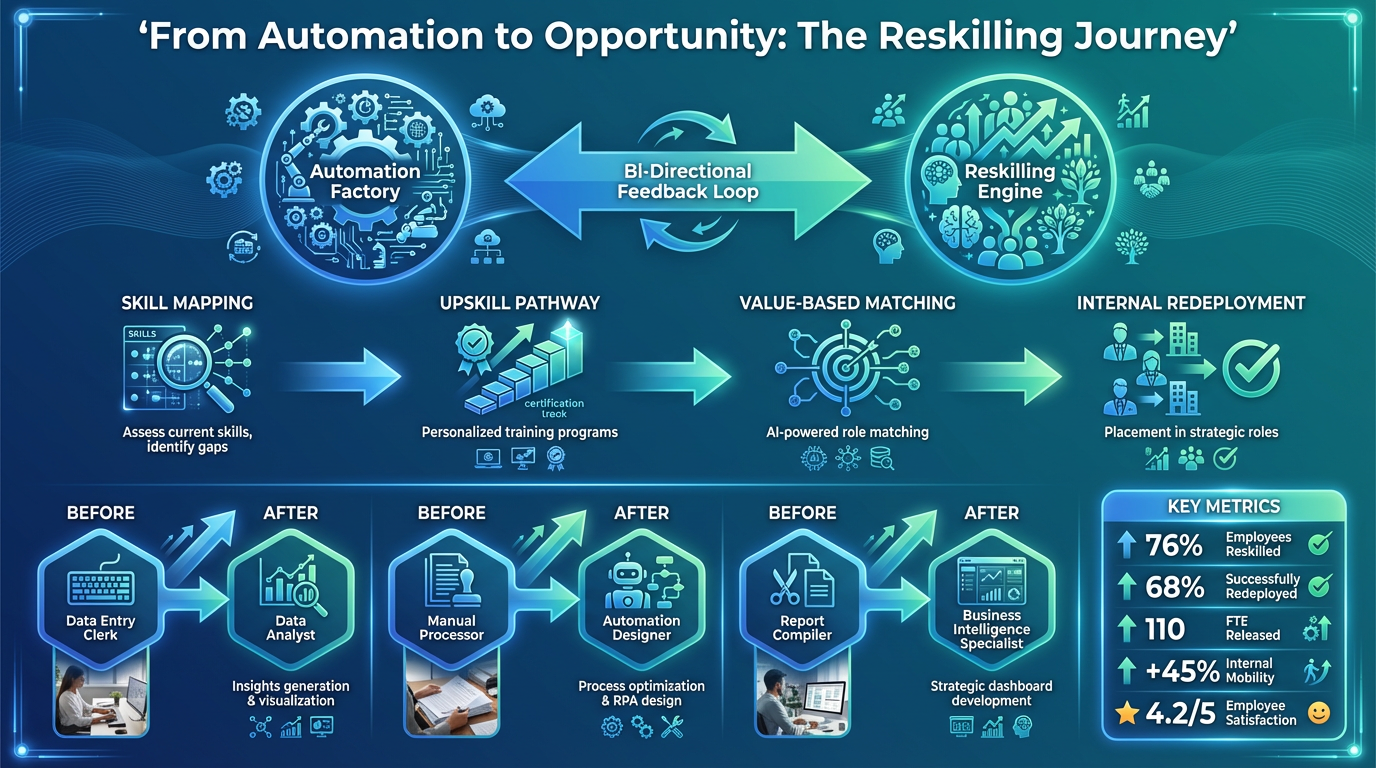

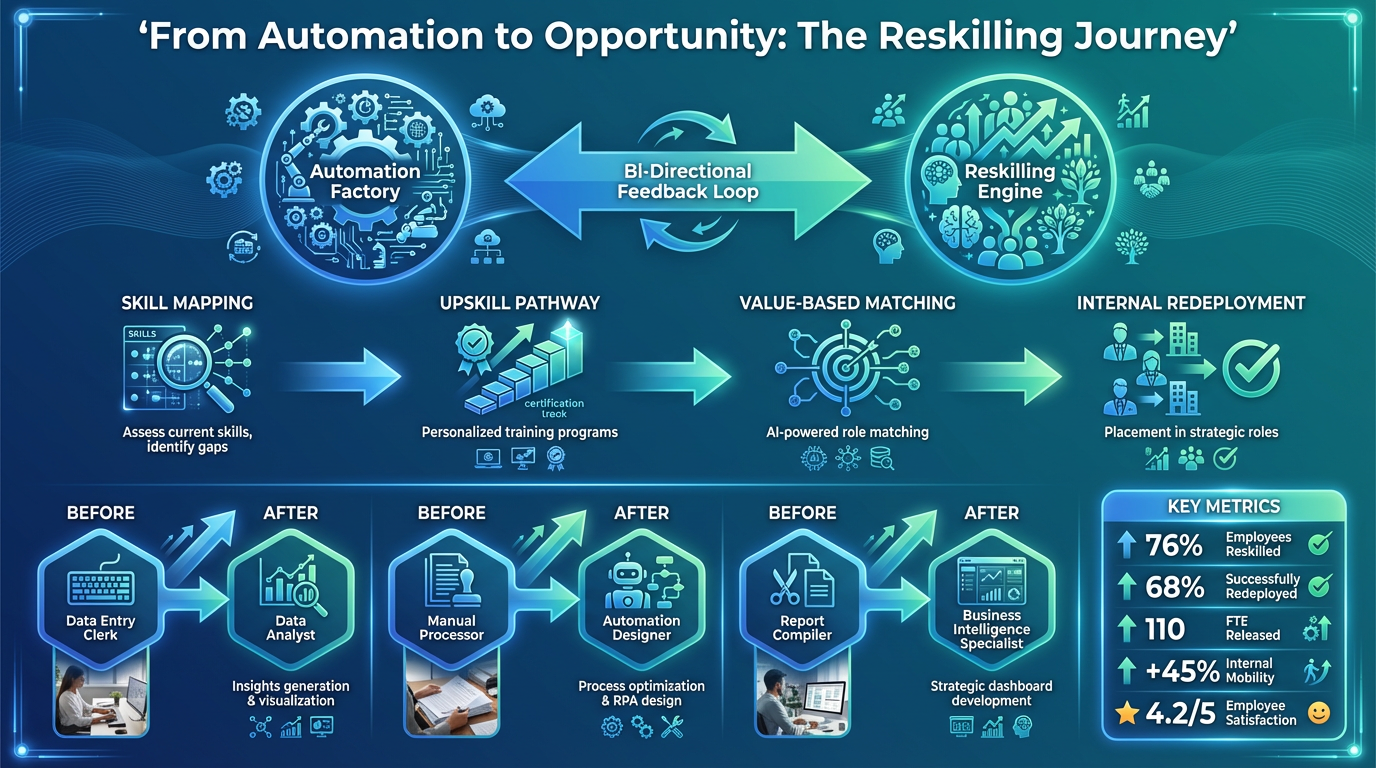

That’s where – what I call the Reskilling Factory comes in – the second engine in the dual-machine setup. It takes every FTE freed by automation and runs them through a structured transformation pipeline of skill mapping to figure out what they can do, upskill pathways to get them where they should go, and a value-based matching model that plugs them into roles where their expertise actually matters.

It’s recycling, but for intelligence.

Why we cannot really measure this storm

Ok, now it’s time for me to be a piss-ant, because – again – this report is built on quick sand. Yup. And that’s because GDP and unemployment are useless thermometers during an AI hype. The economy is burning calories in places that we don’t even measure. AI automates paperwork in a hospital and – poof – productivity rises without a trace.

Does GDP see it? No.

Does unemployment reflect it? No.

Does productivity magically spike? Also no, because productivity only rises when output rises, not when bullshit disappears.

AI is creating value (and destruction) in places the metrics literally cannot detect, so economists are standing there like abandoned Clippy prototypes, and the real economy is melting under their feet.

So yeah, that is exactly what they’re saying, though politely, academically, wearing a tie and a mortarboard – but still saying it, yes.

Here’s the translation of their academic throat-clearing

“We can’t really measure this storm” = our current economic metrics are blind, brittle, outdated, and fundamentally incapable of detecting AI’s real impact.

So is the report “bogus”?

Not bogus – just built on top of a measurement system that was never designed for this kind of disruption.

Aha.

They were using a kitchen thermometer to measure a forest fire. You can still write a report. You can still say numbers. But the tool itself is laughably inadequate.

So why continue with this article?

MIT isn’t fundamentally wrong, they are just painting a storm with crayons and telling you it’s meteorology.

But because it (the AI) is service-based and invisible, economists just stand there blinking like Windows 98.

Say what now?

Most AI automation happens in services (admin tasks, paperwork, coordination, emails, scheduling, analysis, triage, customer support), but unlike manufacturing, services don’t produce a countable physical output. So when AI automates tasks in these domains, the economic value created (or the labor displaced) isn’t directly measurable. If say, a hospital admin worker goes from 6 hours of paperwork to 1 hour thanks to AI, output doesn’t increase. They still process the same number of patients.

So productivity on paper stays the same, even though the human effort required collapsed by 80%.

AI removed labor, but the metric didn’t blink.

And GDP only counts what is sold, not what’s saved. When AI eliminates 10 million hours of clerical work across the economy, GDP sees nothing. But when AI adds something new – maybe a software subscription – it sees that, but all the efficiency and displacement remain invisible.

AI is restructuring labor at the task level, but our economic instruments only detect changes at the job and industry level, so they miss the transformation entirely.

And that’s why MIT’s warning is still important. The details may be imperfect, but the overall message is real, and ignoring it would be a mistake.

MIT themselves says Correlation, not Causation – but come on

By the way, they themselves tried to cover their asses with the classic “validation correlational rather than causal”.

Adaptation is happening slower than my WiFi

In the report, these guys politely phrases it as “workforce change is occurring faster than planning cycles can accommodate”. Retraining will be needed, and it is needed on a scale we’ve never attempted, but – that’s what I see at most of the organizations that I come across – right now, the attitude is basically “we’ll figure it out later” – the famous last words of every failed empire.

And now the iceberg itself

Here’s the line you tattoo on your forehead

AI’s visible impact: 2.2% of wage value AI’s real impact: 11.7% of wage value

That’s five times bigger, or in academic terms “Holy shit”.

Tech is only 6% of the workforce but has 30% of the S&P’s value, but this year it was responsible for roughly 1.1 percentage points of U.S. GDP growth, thanks almost entirely to massive AI-driven infrastructure spending.

So when tech felt the first tremors, that was the first TechTonic Shift pre-quake teaser,

The submerged portion of the iceberg is the $1.2 trillion in cognitive, administrative, financial, and professional services that are going to get nibbled to death, task after task.

And with the rise of Agentic AI, and the coming of Browser-Use at scale, there will be more carnage.

And Challenger is in the back shouting FIRE

MIT politely modeled the apocalypse and used soothing words, but Challenger, Gray & Christmas (yes, Christmas), who is the U.S. layoff-tracking firm, reported that 2025 was the year that (in the US) over 1,000,000 layoffs were announced due to AI.

And when you combine Challenger’s data with MIT’s model, you get a delightful new equation that AI is eliminating jobs faster than society can count them AND five times faster than anyone can see them.

Perfect.

And then there’s the secondary collapse

By the way, MIT didn’t even model the real carnage, because there’s always a ripple-effect.

You cut one-third of workers in a business park, say goodbye to

Cleaning services

Lunch cafés

Strip clubs

Dry cleaners

Corner bodegas

Parking attendants

Microbusinesses built around office life

These are the dominoes.

States with smaller tech sectors will get hit hardest, because their economies are basically made of these submerged categories.

And the thing that stings me the most is that all the “saved labor cost” just drifts upward to shareholders, where it sits in a vault doing absolutely nothing for the local economy, until the AI bubble bursts, the middle class collapses into foreclosure, and that same money is used to buy up everything they lost at discount.

The conclusion because there’s always a conclusion

Even if your job isn’t being eaten today, it’s standing near the buffet.

Society needs to prepare.

But it won’t.

Governments need retraining programs on a national scale.

But they’re still arguing about updating EU AI Acts, implementing the new Framework Convention, and AI literacy trainings. But the thing is that it doesn’t really matter if your skills now include a prompt-engineering certificate from Udemy, when a model like the Iceberg or my PAVM comes and determines that the repetitiveness score of your job is above a certain treshold.

What we need is new tax systems that taxes the ultra-rich, taxes on dividend, new safety nets, new entrepreneurship support, new everything. But what happens instead across the globe is that the working class and the middle class are getting taxed heavier. Drain the middle class, pat them on the head, and tell them it’s “for stability”.

We are the slaves of our era.

We buy homes on credit and call it a mort-gage, a dead pledge†. We produce offspring and get a government-approved “child allowance”, as if our bloodline needs state coupons to remain viable. We lease cars we’ll never own, we work regular jobs with wages that evaporate on arrival, and we carry “health insurance” policies that insure nothing except insurer profits. We sign 30-year student loans so we can join the economy already in chains, we obediently pay value-added tax on every breath we take, and we “save for retirement” in systems designed to collapse before we ever see a cent. We register our businesses, our homes, our IDs, our existence – and each registration is another thread in the net.

† Indeed, the etymology stems from mort+gage. MortGage = “dead promise”. Mort = dead (Old French, from Latin) and Gage = pledge or promise (Old French)

And you, dear reader

As someone who’s creating strategies and running massive programs with the aim of automating processes at scale, the advise I give you is to strengthen your skills that can’t be automated like leadership, communication, creativity, coordination, judgment.

Yes. The soft stuff. The stuff AI can impersonate but not embody.

Or move to jobs that require manipulating “stuff” – yes, with your hands, your Manus.

Everything else, yeah, the algorithms are licking their lips.

And for me personally, may my future job prospects outlive my cynicism, though honestly at this point, the cynicism has better odds.

Sign-off?

Sure.

Marco

I build AI by day and warn about it by night. I call it job security. Big Tech keeps inflating its promises, and I just bring the pins and clean up the mess.

👉 Think a friend would enjoy this too? Share the newsletter and let them join the conversation. LinkedIn, Google and the AI engines appreciates your likes by making my articles available to more readers.

To keep you doomscrolling 👇

- I may have found a solution to Vibe Coding’s technical debt problem | LinkedIn

- Shadow AI isn’t rebellion it’s office survival | LinkedIn

- Macrohard is Musk’s middle finger to Microsoft | LinkedIn

- We are in the midst of an incremental apocalypse and only the 1% are prepared | LinkedIn

- Did ChatGPT actually steal your job? (Including job risk-assessment tool) | LinkedIn

- Living in the post-human economy | LinkedIn

- Vibe Coding is gonna spawn the most braindead software generation ever | LinkedIn

- Workslop is the new office plague | LinkedIn

- The funniest comments ever left in source code | LinkedIn

- The Sloppiverse is here, and what are the consequences for writing and speaking? | LinkedIn

- OpenAI finally confesses their bots are chronic liars | LinkedIn

- Money, the final frontier. . . | LinkedIn

- Kickstarter exposed. The ultimate honeytrap for investors | LinkedIn

- China’s AI+ plan and the Manus middle finger | LinkedIn

- Autopsy of an algorithm – Is building an audience still worth it these days? | LinkedIn

- AI is screwing with your résumé and you’re letting it happen | LinkedIn

- Oops! I did it again. . . | LinkedIn

- Palantir turns your life into a spreadsheet | LinkedIn

- Another nail in the coffin – AI’s not ‘reasoning’ at all | LinkedIn

- How AI went from miracle to bubble. An interactive timeline | LinkedIn

- The day vibe coding jobs got real and half the dev world cried into their keyboards | LinkedIn

- The Buy Now – Cry Later company learns about karma | LinkedIn

Leave a comment