(now with 30% more truth and 200% more “oh, so that’s why everything keeps breaking”)

AI has evolved so fast that even people like myself are typing in ChatGPT “how to keep up with my own industry”. We went from IBM Watson’s polite, rule-following symbolic reasoning to today’s generative and agentic beasts that just guess their way through reality, but with absolute swagger. It’s been a full-on paradigm shift from explicit logic to probabilistic improv theater, and yet, in the middle of this whirlwind, a quiet old truth is tiptoeing back into the spotlight.

Today, I’ll be talking about ontologies – yes, those boring, formal maps of how things relate to each other – and now, they matter more than ever.

The nerdy charts you once ignored. That’s what I mean.

Yeah, those.

But let me explain an ontology in slightly more detail than this.

And yes, it can (and will) be a boring intellectual piece, so if you’re easily bored or dumb a.f., skip it, and wait for a more trashy one that’s coming tomorrow.

More rants after the messages:

- Connect with me on Linkedin 🙏

- Subscribe to TechTonic Shifts to get your daily dose of tech 📰

- Please comment, like or clap the article. Whatever you fancy.

So… What is an ontology, really?

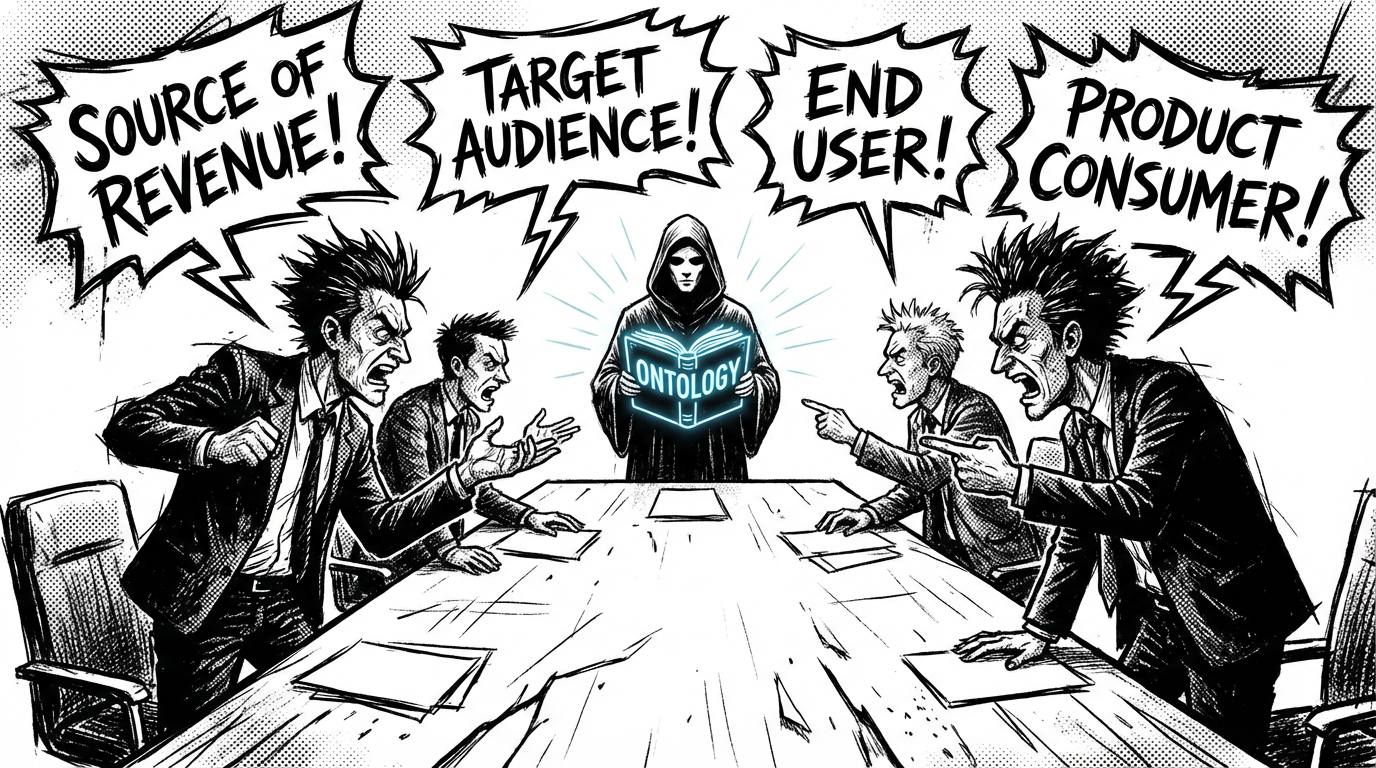

You’re walking into a Fortune 500 meeting where ten executives are arguing about the word “customer”.

One says it means the person who pays, the other guy says it means whoever uses the product. A third insists it means “the account holder”, and a fourth claims it’s “whoever we can upsell”.

Nobody aligns. None of them agrees, and the thing is that no one even realizes they disagree.

This, of course, leads to chaos.

And that is the exact moment an ontology walks in like a calm, well-mannered adult, scrapes its throat and says . .

“Children, sit down. I’m going to define things so your systems stop hallucinating”.

An ontology is a formalized, structured map of concepts in a domain – and how they relate to each other – written in a way that machines can understand.

It is not a list or a a dictionary, and it’s absolutely not a taxonomy either†. It is, however, the rulebook for reality inside your system. If data is the raw ingredients, and AI is the chef, the ontology is the recipe that keeps it from mistaking bleach for milk.

Because it defines a bunch of whats . . .

- What exists in your domain

- What the concepts mean

- How they relate

- What rules constrain them

- What can and cannot happen

It’s the thing that makes your data, your agents, and your models stop free-styling.

† A taxonomy is just a tidy list that sorts things into categories, but an ontology explains not only the categories, but also what each thing is, how they relate, and how everything connects in a whole system.

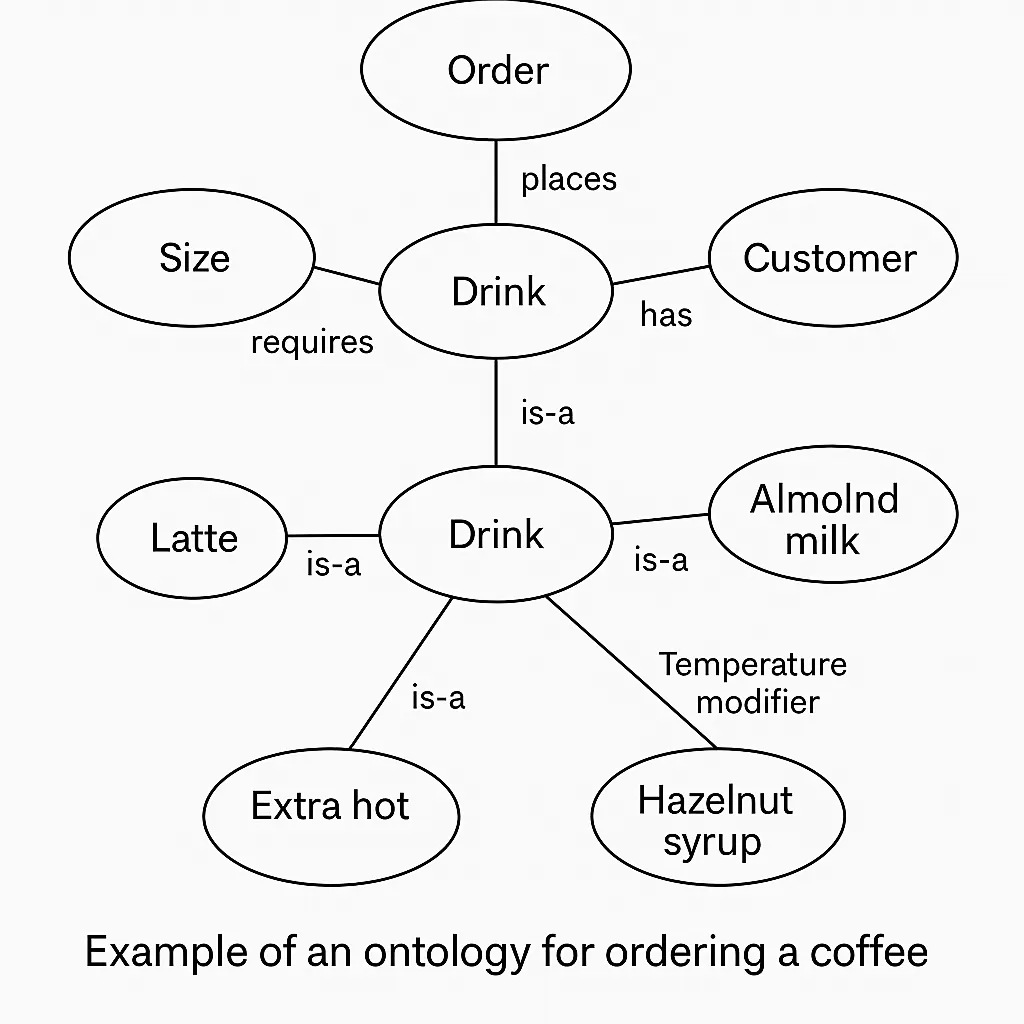

Example – the ontology of ordering a coffee

Let’s do everyday life, not NASA kind of ontologies.

Take a barista. He needs to understand the difference between

- drink

- milk type

- size

- temperature

- add-ons

- payment method

- customer identity

- order number

The relationships are visualized like this:

And the ontologies specify

- that a latte is-a drink

- hazelnut syrup is-a add-on

- almond milk is-a milk type

- extra hot is-a temperature modifier

- a customer places an order

- an order contains one or more drinks

- a payment method must satisfy a process

- a drink requires exactly one size

This sounds trivial… until you hand it to an AI without structure.

Because an ungrounded model will – without shame – produce something like

“Your venti iced cappuccino with almond milk is extra hot”.

This is how you know ontologies save lives, since they prevent nonsense through rules that even a machine can’t argue with.

And now, the clean, correct, high-forehead version of an ontology.

An ontology is a formal, machine-readable system that defines the concepts in a domain, the semantic relationships between them, and the logical rules that constrain how those concepts can interact.

So yes, it is a set of relations between semantic concepts.

And yes, it does function like a rule book. Technically it’s both the map and the laws of physics for a particular domain. A taxonomy only tells you that “A is a subtype of B”. But an ontology tells you . .

- what A is

- how A relates to B

- what A is allowed or not allowed to do

- what must be true when A interacts with C, D, or Z

- what constraints, dependencies, properties, and rules govern the entire ecosystem

It’s structure + semantics + logic + constraints, all bundled into one very nerdy spine that keeps AI from drifting into fantasyland.

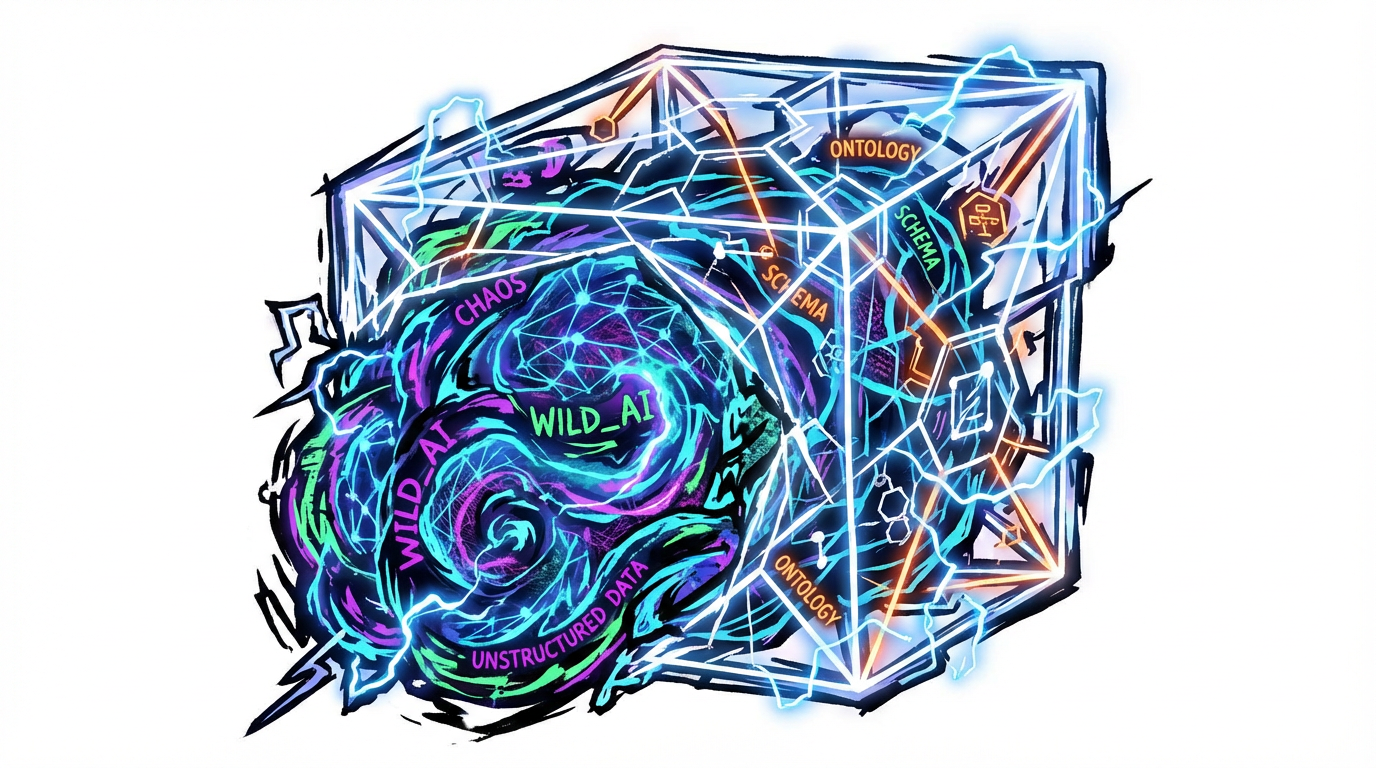

Why ontologies matter in AI more than ever

Generative AI talks fancy but has the factual discipline of my Weiner going outside when it raines. It sounds intelligent, it predicts patterns, and it hallucinates with absolute confidence. But what it doesn’t do is . .

- enforce domain constraints (In the case of the drink ontology, enforcing domain constraints simply means making sure the AI doesn’t do anything stupid like claiming Coca-Cola is alcoholic, tea grows on cows, or whiskey is served in cereal bowls)

- check factual consistency

- remember what the business cares about

- understand what’s actually allowed

- follow rules unless forced

Ontologies act as the exoskeleton that keeps your model from collapsing into puddles of creative but career-ending nonsense.

They give AI . .

- grounding

- structure

- constraints

- interpretability

- traceability

And they convert your human rules into formats like OWL, RDF, and SHACL – formats machines can obey without needing therapy after.

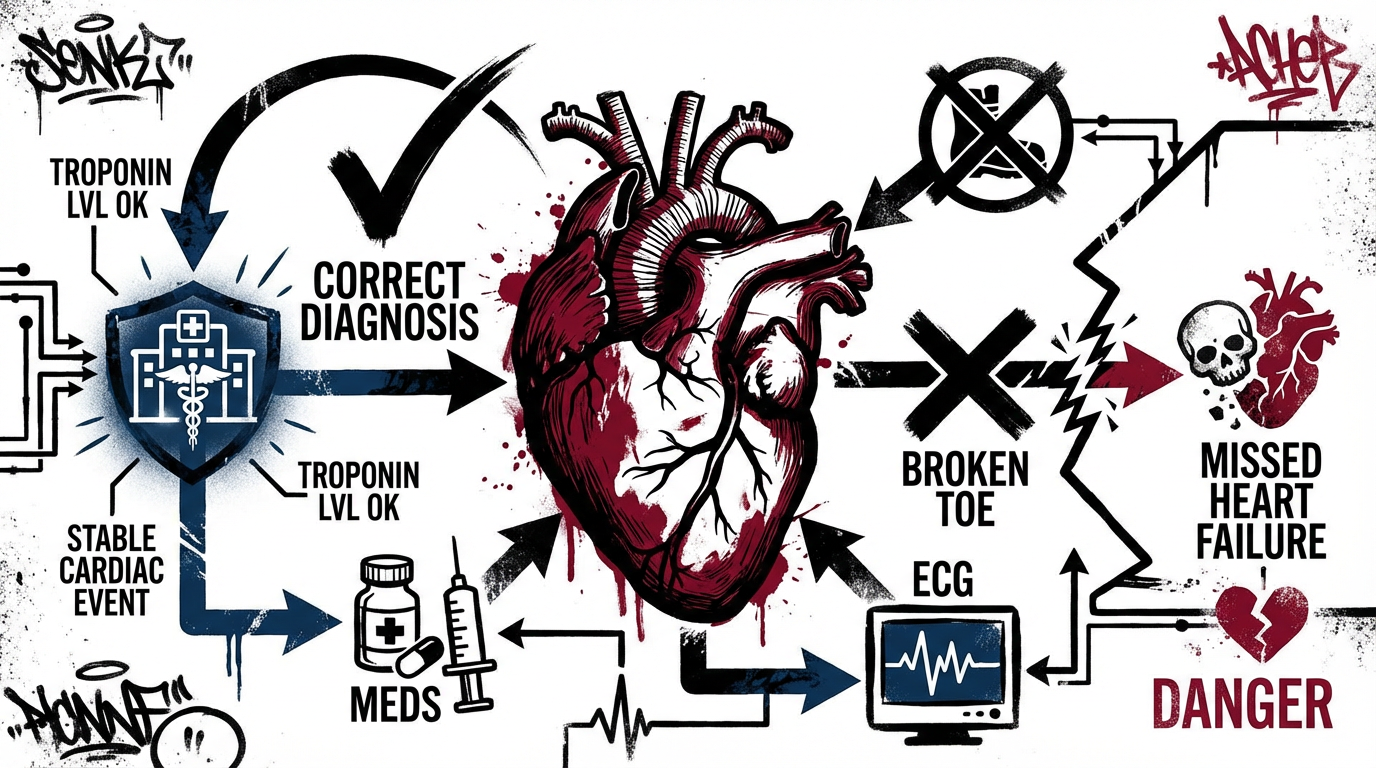

A more complex example

Let’s move to a domain where hallucinations kill people instead of reputations.

Take a clinical system . .

- Myocardial infarction is-a cardiac event

- Cardiac event affects heart tissue

- Troponin levels indicate injury severity

- Aspirin treats thrombotic events

- Aspirin contraindicates certain allergies

- Pneumonia is-not-a cardiac event (but a model might try)

Without an ontology, an LLM may diagnose heart failure from a broken toe – but with confidence, but with one, the system actually knows what you mean when you say “shortness of breath”, “ejection fraction”, or “Troponin I above 0.04 ng/mL”.

Ontologies stop AI from making implicit assumptions and force it to operate within the boundary of reality – something I also struggle with, by the way.

Ontologies are not old-school. They are the foundation.

The industry forgot about ontologies for ten years because generative AI felt like pure magic.

But now that AI is . .

- writing code

- executing actions

- managing workflows

- controlling machines

- making decisions

- interacting with other agents

…suddenly everyone remembers that magic without structure is just a synonym for catastrophe.

When an AI becomes agentic, ontologies aren’t optional no more and instead act as the guardrails that keep it from deleting the wrong database, booking flights to the wrong city, confusing euros with yen, issuing duplicate payments, sending sensitive data across borders, generating illegal recommendations, trampling regulatory boundaries, or randomly deciding to do something it has absolutely zero authority to do, because ontologies are the adult supervision generative AI keeps pretending it doesn’t need.

And the thing that will scare everyone reading this is that ontologies are man-made.

Yes.

Written down.

By us hoomans.

And I can hear you think, “but Marco, I have implemented an agentic AI system in my company, yet I haven’t worked with an ontology, and it still works”, please explain.

Let me gently unfold the answer with all the tenderness of a brick through a window

You never worked on ontologies for AI agents because…

. . nobody told you the grown-ups were doing that behind the scenes. Not even the “experts”.

Let’s drag the answer into the light.

It is because 99% of “AI agent projects” today are… toys

Most companies that are involved with Agentic AI are still tinkering with toys, like an Assistant that blindly clicks around a website, a little agent that fires off emails, a n8n flow pretending to “automate” a two-step workflow, or some hackathon Franken-demo held together with power automate and a bunch of optimism.

And none of this is enterprise-grade.

These are craft projects with delusions of grandeur, cute in the way a toddler with a plastic screwdriver thinks he’s an electrician. They don’t need ontologies because nothing serious is at stake. No money is on the line, no regulators are breathing down anyone’s neck, no systems depend on each other, nothing spans departments, and the whole thing doesn’t possess operational power.

It is amusing to watch. But it has zero impact.

But when your project goes beyond being a mere toy, and you need to automate the beating heart of your enterprise, like finance, legal, HR, procurement, operations – all wrapped in compliance frameworks, risk matrices, audit requirements, and real cashflow with real consequences – well, this ain’t the same sport.

Heck, it’s not even the same planet.

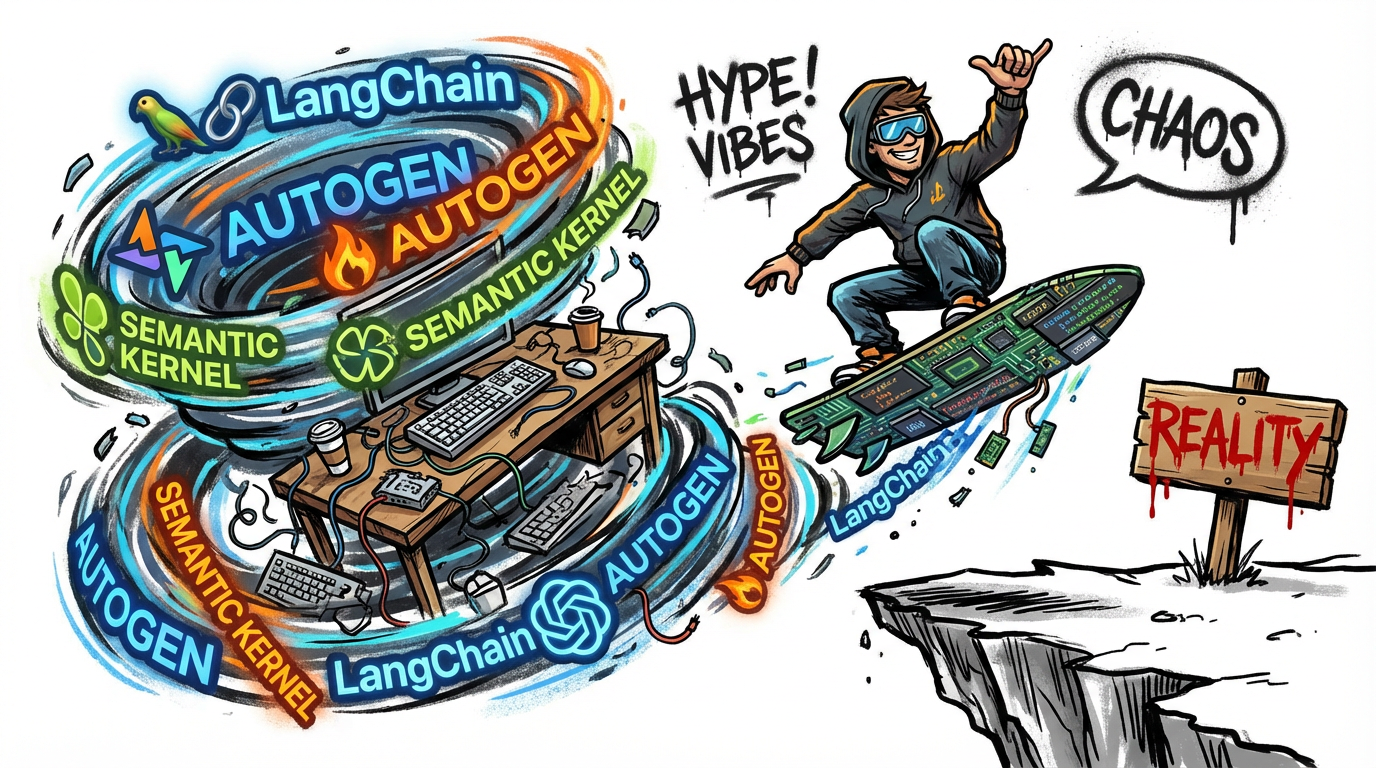

It is because the current agent hype is built on “LLM Gefühl”

Frameworks like LangChain, AutoGen, and Semantic Kernel all tell the same seductive lie that you “just tell the agent what to do and watch the magic unfold”, and, sure, that works fine if all you want is a douchy automaton who can spit out summaries, toss together a slide deck, wobble its way through a browser, or yank a bit of data out of some API.

But when you venture into real territory like finance approvals, legal workflows, sensitive employee data, procurement lifecycles, regulated business processes, cross-entity risk decisions, anything that even smells like EU AI Act high-risk classification, well, those “vibes” simply disappear. Instantly.

This is where the fantasy collapses and the hallucinations begin.

You never worked with ontologies because the ecosystem you were swimming in wasn’t built for meaning. It was built as a TikTok for developers with beautiful dopamine inducing output, but zero discipline, no semantics, no structure, no accountability, and certainly no shared representation of reality.

Everything was peachy, but nothing was grounded.

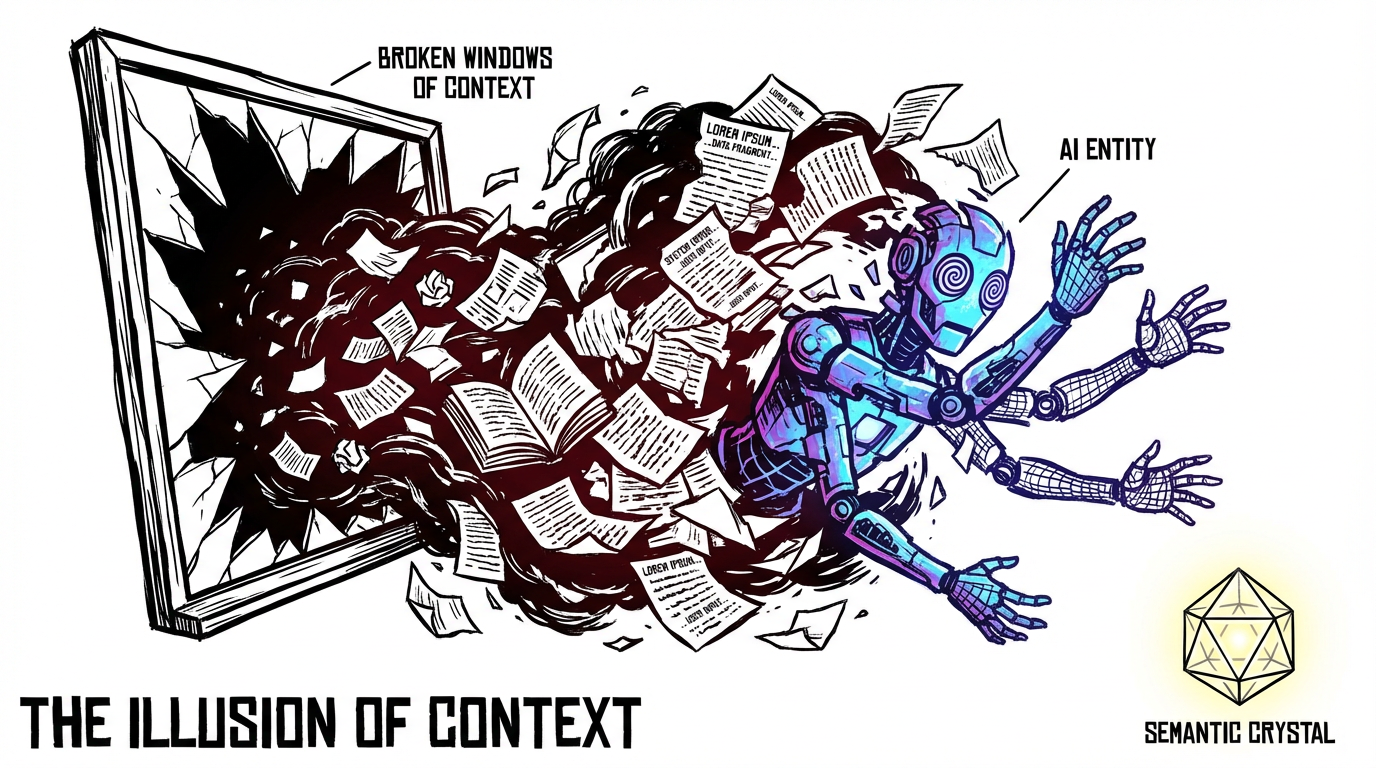

Because humans assumed “context windows” replace semantics

Humans fell for the delusion that “context windows” could replace semantics.

It’s one of the biggest scams in modern AI work . . . the fantasy that if you just paste in enough examples, the model will magically “understand everything.” That lie has burned through millions and left a trail of failed pilots behind it.

Large language models don’t recognize domain boundaries, don’t know what a legal definition is, don’t understand how entities relate, and don’t internalize compliance requirements, data governance constraints, enterprise duties, or approval chains. They operate purely on statistical echoes, which is tolerable for creative writing but catastrophic when real-world processes, money, legality, or accountability are involved.

Genuine automation needs structure, rules, and symbolic meaning – not good feelings hallucinated at scale.

Ontologies only become unavoidable once you push past the toy phase, when the number of automated processes climbs beyond fifty or a hundred, when work stretches across multiple business domains, when systems start talking to each other, when actions suddenly carry real risk, when audits matter, when reliability isn’t optional, when compliance becomes a living organism you have to appease, and when predictability is the only thing standing between you and operational chaos.

People were seduced into believing that LLMs magically perform semantic reasoning.

Modern AI projects built an entire illusion of competence. You ask the model, “What’s the approval workflow for an invoice?” and it spits out a confident answer. You assume it knows. But it doesn’t. It’s just stitching together patterns from public text, stray documents, partial hints, and raw guesswork that it presents as certainty.

The only reason you never bothered with ontologies is because these models tricked you into thinking they already understood your domain, that they could resolve ambiguity on the fly, and they wouldn’t invent relationships out of thin air, that you could simply “tell” them the rules of the world and they’d internalize them.

You were led to believe meaning would just emerge organically instead of being engineered deliberately. And that illusion collapses when your agent performs an action with real financial or legal impact – the exact moment when hallucinated semantics turn into actual damage.

Because ontologies require real organizational maturity

What makes it worse is that ontologies demand a level of organizational maturity most companies simply don’t possess.

Building AI agents is the easy part. It is basically playtime with powerful autocomplete. But ontologies are different. They require domain architects who understand the business, semantic modelers who can formalize meaning, cross-functional input from people who never agree on anything, governance processes that enforce discipline, versioning structures, data stewards, approval workflows, semantic change management, risk officers, business analysts, and knowledge engineers who actually understand what the hell a knowledge graph is.

Ninety-five percent of companies don’t have these roles.

They barely have the patience.

So they reach for the simplest tools available – I call it the developer equivalent of crayons – and hope nobody notices that their AI “agents” are skating on a semantic ice sheet one millimeter thick.

They reach for low-code automators like Zapier and n8n and convince themselves they’re doing orchestration.

They duct-tape LangChain chains together like they’re assembling IKEA furniture without instructions. They use Semantic Kernel and AutoGen as if prompt wrappers are a substitute for knowledge representation. They build entire “AI agents” around Playwright, Puppeteer, Selenium, and other clickbots because it feels like software automation instead of what it is → a robot randomly poking at the screen.

They dump JSON into vector stores like Chroma, Pinecone, Weaviate, or FAISS and pretend embeddings are “enterprise memory”. They treat RAG like a religion and convince themselves that embeddings replace meaning.

They stuff domain rules into prompts and call it governance. They store half-baked concepts in spreadsheets, YAML files, GitHub comments, or random Notion pages. They “model” business semantics in Airtable as if it’s a knowledge graph. They build workflows in Replit and call it a platform. They let LLMs invent field names and relationships on the fly and call it “semantic inference.” And when something breaks, they slap more tokens, more examples, or a bigger context window on the problem like they’re applying duct tape to a rocket engine.

Why ontologies are returning

Agentic AI is moving from “generate text” to “make decisions”, but accuracy has plateaued. And hallucinations persist.

The next stage of AI maturity requires embedding structure, semantics, constraints, and domain logic back into the system.

And ontologies deliver that.

Ontologies give AI a factual backbone, they ground and verify what an LLM produces, letting a medical agent align answers with clinical truth or a financial agent reject nonsense outright. They also define the world an agent operates in – like the entities, actions, consequences, rules, and constraints – and they’re turning chaotic token soup into an actual reasoning environment instead of a word generator.

LLMs gives us fluency and ontologies and knowledge graphs enforce truth, and explainability is non-negotiable in any regulated domain, ontologies provide the breadcrumbs that show what the system did, why it did it, and whether it had any business doing it in the first place.

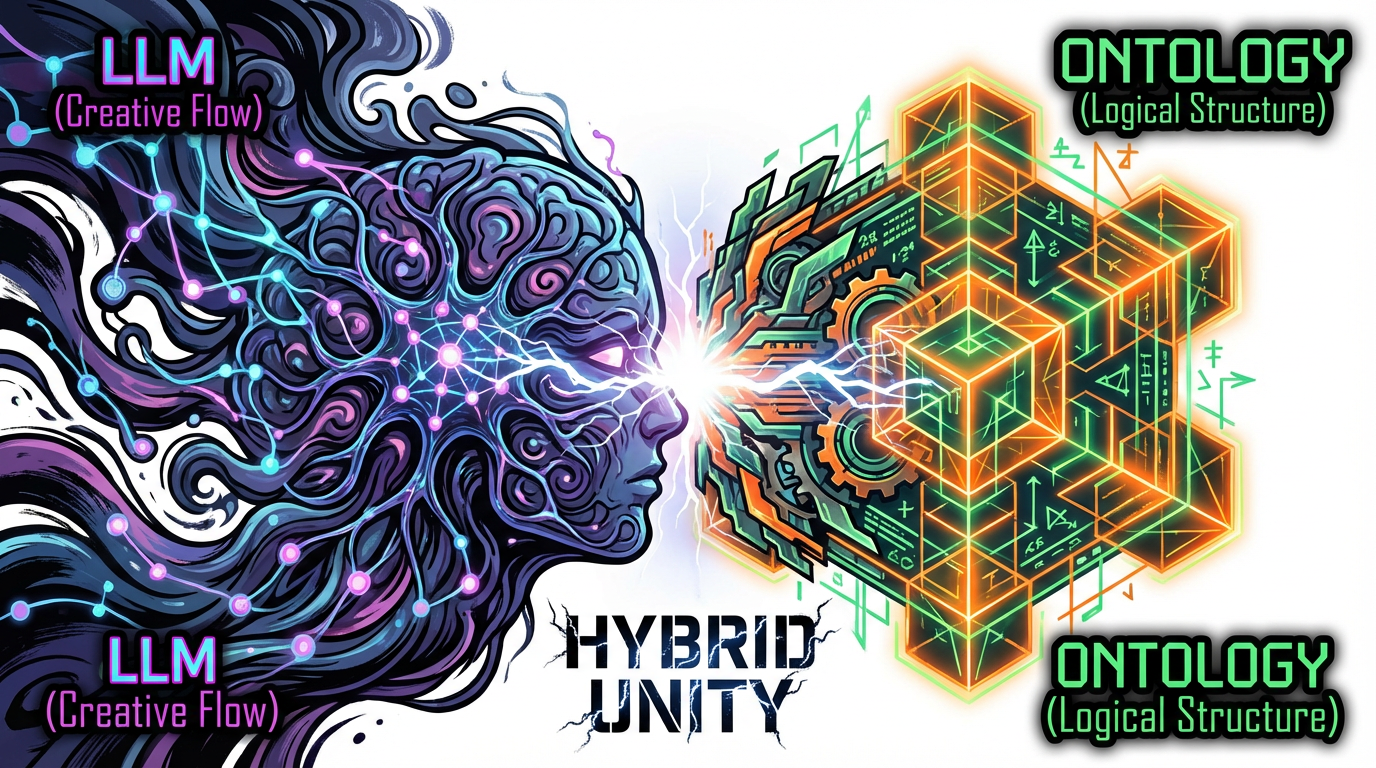

The future is neuro-symbolic AI

We are now entering a phase where two kinds of intelligence are going hand in hand. The neural side that generates ideas, and the symbolic side that understands structure and meaning.

That second part – the symbolic one- is what ontologies actually are.

An ontology is just a formal map of “what exists” in a business – the things, the relationships between them, and the rules that control what can and cannot happen – it’s a shared dictionary of reality that your AI agents can’t ignore. And on the other hand, LLMs give you fluent output, and ontologies give that output shape, grounding, and guardrails.

One predicts, the other reasons.

In enterprise environments, your ontology strategy is your AI risk strategy.

Generative AI gave us models that talk. Agentic AI gives us systems that act. And ontologies make sure they act properly – and explain why they acted that way.

This isn’t old-school AI returning from the dead if you were thinking it. No, it’s the missing half of intelligence finally taking its rightful seat at the table.

Signing off (finally),

Sorry for the boring piece.

But if you’re serious about building enterprise scale AI, you need to understand this.

Marco

I build AI by day and warn about it by night. I call it job security. Big Tech keeps inflating its promises, and I just bring the pins and clean up the mess.

👉 Think a friend would enjoy this too? Share the newsletter and let them join the conversation. LinkedIn, Google and the AI engines appreciates your likes by making my articles available to more readers.

To keep you doomscrolling 👇

- I may have found a solution to Vibe Coding’s technical debt problem | LinkedIn

- Shadow AI isn’t rebellion it’s office survival | LinkedIn

- Macrohard is Musk’s middle finger to Microsoft | LinkedIn

- We are in the midst of an incremental apocalypse and only the 1% are prepared | LinkedIn

- Did ChatGPT actually steal your job? (Including job risk-assessment tool) | LinkedIn

- Living in the post-human economy | LinkedIn

- Vibe Coding is gonna spawn the most braindead software generation ever | LinkedIn

- Workslop is the new office plague | LinkedIn

- The funniest comments ever left in source code | LinkedIn

- The Sloppiverse is here, and what are the consequences for writing and speaking? | LinkedIn

- OpenAI finally confesses their bots are chronic liars | LinkedIn

- Money, the final frontier. . . | LinkedIn

- Kickstarter exposed. The ultimate honeytrap for investors | LinkedIn

- China’s AI+ plan and the Manus middle finger | LinkedIn

- Autopsy of an algorithm – Is building an audience still worth it these days? | LinkedIn

- AI is screwing with your résumé and you’re letting it happen | LinkedIn

- Oops! I did it again. . . | LinkedIn

- Palantir turns your life into a spreadsheet | LinkedIn

- Another nail in the coffin – AI’s not ‘reasoning’ at all | LinkedIn

- How AI went from miracle to bubble. An interactive timeline | LinkedIn

- The day vibe coding jobs got real and half the dev world cried into their keyboards | LinkedIn

- The Buy Now – Cry Later company learns about karma | LinkedIn

Leave a comment