It is Sunday morning. I woke up this morning to the gentle sound of my phone screaming at me because I forgot to set it to Focus. It was just another one of those AI-generated newsletters, and this one pretended to care about my “creator journey”. I wiped the sleep crust off my face, opened my laptop, and was immediately slapped in the face by the same reality that’s been slouching toward Bethlehem since late 2022, that the internet is now an orgy of synthetic write-ups, and AI generated memes. And buddy, if you thought the memes were getting weirder, the takes dumber, or the SEO blogs more spiritually hollow, well, welcome back to the Sloppiverse.

Welcome to Q4 2025, people! Half the internet is written by silicon, the other half is rewritten by it, and the tiny human remainder that is left is either screaming their lungs out in the comments or trying to sell you a productivity template.

I wrote about this mess for the first time, back in 2024†, and now I figured, screw it, let me poke the corpse again and see whether we’re even more catastrophically doomed this time.

So, if you want to know the actual state of things, how royally screwed we are, well then, ready your psyche for emotional turbulence, because this is my roughly 2k-word guided tour through the increasingly rancid landscape documented in the Sloppiverse reports. You know, the ones that actually looked at real data instead of LinkedIn prophecies (like the deep analysis of the Europol misquote fiasco).

Let’s walk hand in hand through the data my Oompa Loompas have dug up for me on this topic.

†Read: The Sloppiverse is here, and what are the consequences for writing and speaking? | LinkedIn

More rants after the messages:

- Connect with me on Linkedin 🙏

- Subscribe to TechTonic Shifts to get your daily dose of tech 📰

- Please comment, like or clap the article. Whatever you fancy.

The year the Internet stopped pretending to be human

I’ve been on the internet long enough to remember when content was forged by trembling human hands that produced deranged blog posts in the middle of the night, when we sent sarcastic tweets from the toilet, and published lovingly half-researched academic papers that got easily accepted by the moderators. Then ChatGPT showed up in late 2022 and began spitting out text, and by 2024, the machines were already generating most of it.

Not metaphorically. Not aesthetically. Not “oh wow, this looks like AI”. Amma talking quantifiable, statistically offensive numbers.

Take the Graphite study, which analyzed 65,000 URLs across CommonCrawl and found that somewhere around November 2024, AI had crossed the 50% event horizon. This means the machines are generating more new web articles than humans ever could or would again, unless Pervitin suddenly becomes prescription-grade again and free at the point of care (it prolly won’t).

And the growth wasn’t cute. It was exponential. Unhinged exponential even. Like, “my Weiner (dog) ate a kilo of gummy bears and is now screaming in Esperanto” kinda exponential.

And those poor academics kept trying to measure the ruin.

One researcher even had the audacity to comb through the linguistic fingerprints of AI output and discovered that the dead-giveaway phrase “delve into”, ChatGPT’s favorite harbinger of doom, had doubled in usage across the open web in February 2024 alone. So he concluded that up to 40% of the text on active web pages was already machine-made by the time Q2 rolled in .

If you’ve ever read something online in the last eighteen months and thought, “this feels like lukewarm oatmeal wearing a tie”, then yes, that was the robots. And yes, they are proud of themselves.

The great Europol lie and the mythical 90%

Let’s clear up something that keeps coming back from the dead like a zombie with a LinkedIn Learning certificate, which is the infamous claim that Europol predicted 90% of online content would be AI-generated by 2026.

Well, in short, they didn’t.

Not even close.

A whole community went nuclear on this nonsense. A bunch of people with a statistics 101 for dummy’s course tried dissecting the Europol report and finding exactly zero mentions of “90%”, “2026,” or anything resembling a prophecy about synthetic content domination.

Now, where did that lie come from?

Some guy. Literally. It was the CEO of the avatar company Synthesia, Victor Riparbelli, a guy with more followers then he could apparently handle, and he fired off a LinkedIn post saying 90% of the internet would be AI-generated “in a few years”. That was it. One CEO manifesting chaos on LinkedIn. That’s all it took for the news cycle to eat itself like a depressed ouroboros.

But by the time folks with a bit of a brain figured out that Europol never said this at all, the myth had already grown legs, bought shoes, and was halfway to Brussels demanding EU subsidies.

Humans really shouldn’t be allowed to quote each other.

The internet is a compost Heap

In 2024, the Sloppiverse was no longer some “future scenario”. It wasn’t even a warning anymore. It was the water that we were swimming in, and we were swallowing mouthfuls of at-scale linguistic fertilizer.

Across the datasets my Loompas constructed, the shift is visible.

- AI-generated content estimates range from 50% to 57%, depending on methodology and scope, from full-web sampling (Copyleaks, 2024) to new-content analyses (Graphite, 2024–2025).

- Newly published text content now stands at approximately 30–50% AI-generated, with certain high-volume publishing ecosystems exceeding these averages.

- Educational environments documented a 76% year-over-year increase in AI-generated submissions.

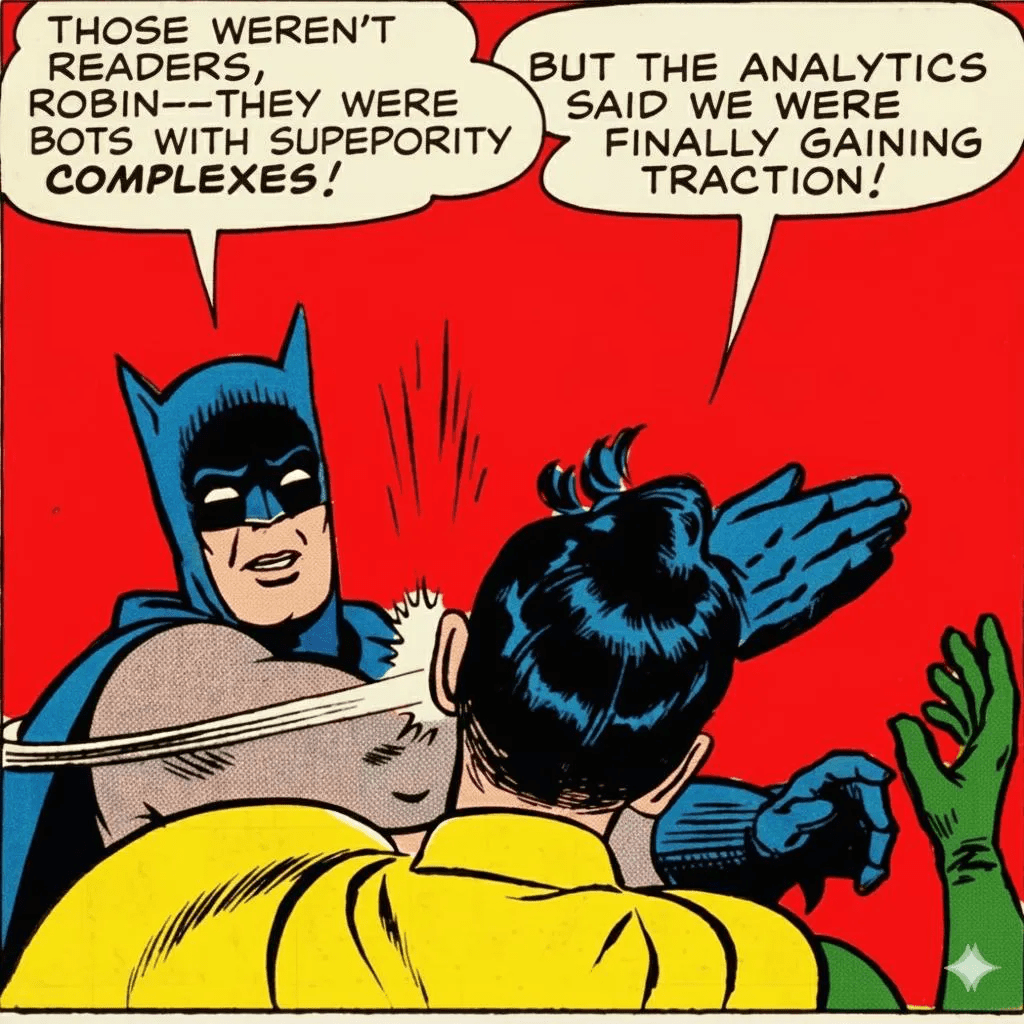

- Bot-driven activity accounted for 51% of total internet traffic in 2024 (Imperva).

The conclusion is the same across all datasets. In 2024, synthetic content had already settled into the foundations of the internet. The Sloppiverse is no longer a temporary distortion or a transitional phase.

It is now the baseline environment.

And of course in 2025 there are new studies again, and all of them are politely reconfirming that the internet didn’t just fall into the compost heap, but that it built a summer home there and started breeding new species of synthetic nonsense for us to choke on.

The first 2025 hit came from the Harvard Journal of Law & Technology, and in March they did a piece called Model Collapse and the Right to Uncontaminated Human Data. Man, that’s is a title so dry that it exfoliates my soul, but that circus aside, it hit me like a tax auditor around year end. Their point is that clean human-written data from before 2022 has basically become an endangered species, and whoever owns that old-school language stash gets an advantage that no startup can ever climb over.

They even compared natural human language to low-background steel, which is a metaphor you only invent after staring at corrupted datasets until your will to live evaporates.

Then the Ada Lovelace Institute (a name more befitting an OF ‘star’) shuffled in during September with their “Synthetic Data, Real Harm” study, which is British for “the internet is on fire and we’re too polite to shout”. And in it, they explained how synthetic goo crawls into training pipelines like damp creeping up the walls of my basement annex home-lab, where it is slowly ruining everything while everyone pretends they don’t notice the smell of decay.

In November this year, UC San Diego turned up the fire alarm with another brilliant title “Preventing Model Collapse in the Synthetic-Data Era”, and I must say, after “consulting” (read: janking it in ChatGPT and checking the hallucinations), this report reads like someone waving both arms while the building burns behind them. And in it, they spelled it out in painful, kindergarten-friendly clarity that AI-generated sludge is flooding the public web, that future models will slurp it up during web scraping whether they like it or not, and that recursive contamination will soon turn language models into AI echo chambers mumbling into the void unless somebody does something about it, which we won’t, end of story.

And the AI-platforms did exactly what big platforms always do when something is on fire behind the scenes. They kept a friendly smile on the homepage and pretended everything was perfectly normal while synthetic content quietly spread through their system like mold in my damp basement annex home-lab. And one of the clearest examples came from Originality.AI (a company that scans text to see whether it was written by humans or a model). And their 2024 analysis showed that LinkedIn had already turned into something resembling a corporate greeting-card factory where 54 percent of all long-form posts are generated by AI, and not written by people anymore, but were produced by models who were force-fed PowerPoint slides and leadership workshops.

In 2025, Google Reviews hit 19 percent AI content, which is why every kebab shop now sounds like a Michelin critic in witness protection.

And in academic publishing, between 14 and 17.5 percent of papers now include AI-written bits, which is funny because professors used to complain about lazy undergrads, and now they’re wrestling with their own chatbot for authorship. Ha!

By the end of 2025 nobody wanted to admit the internet’s nutritional value had collapsed, and that the researchers are basically carbon dating the sediment layers of the internet for future archaeologists who will dig this stuff up and ask why humans stopped writing and let the machines take over.

Why measuring slop is harder than it sounds

When you read the reports, you notice there’s a chaos in the percentage estimates, but that isn’t because researchers are incompetent. It’s because no two studies measure the same thing.

Some measure:

- Newly published articles

- Active web text

- All indexed pages

- Traffic, not content

- AI assistance vs AI generation

- Keyword biomarkers like “delve into”

- Detection models that hallucinate harder themselves than the outputs they’re detecting

For instance, the Copyleaks report found 1.57% AI generated content, because they scanned all pages, including the millions of abandoned blog corpses still floating around from 1998, Graphite found 50% because they only measured new articles, and new content is built by machines now, and Spennemann found 30-40% because he tracked linguistic fingerprints, not detection models’ greasy little phrases embedded in the text .

Measuring AI-generated content is like measuring how many people at a festival are on drugs. The answer is always “Well… it depends what you count as drugs”.

Same with slop.

Same with the Sloppiverse.

But for the record, it’s safe to say that the percentage of slop has remained fairly the same in 2025, which is above 50%.

Everything is beige

Ever notice how every website, and youtube video now sounds like a middle manager trying to impress their VP. Yeah, that’s because the machines learned their writing style from us, our LinkedIn pooha, our zombie-blogs written for SEO, and our startup pitches written at gunpoint by investors.

Then we trained them on that content.

Then they generated more content.

Then we used that content to train new models.

Then everything turned into linguistic beige.

Academics call this an “autophagous loop”, or an “Ourobouros”, but I call it what it really is, linguistic inbreeding, and the results are exactly as horrifying as the phrase suggests.

In a study by Harvard Business Review about model collapse, the author said that LLMs drowning in their own recycled outputs will eventually degrade like photocopies of photocopies of photocopies, and it will be producing text that will feel like an IKEA assembly instructions written during a nervous breakdown (or something along those lines)

And if you think that sounds dramatic, consider this, even Google Search is desperately trying to hideAI-generated articles and their ranking systems treat them like radioactive waste. Ok, instead they now offer AI-modus, that generates AI-slop on the fly, but that’s a whole different topic. The Graphite study data confirms that, AI articles flood the web, but barely appear in search results or in ChatGPT responses (meta study, I know) .

It’s everywhere and nowhere at once.

Like glitter.

Or herpes.

‡ Read: More than 50% of the internet is AI generated and causes model collapse | LinkedIn

The future is sloppier, heavier, weirder

When you look at all the research from 2024 and 2025, from the content audits, the platform reports, the synthetic-data warnings, to the academic meltdown charts, it becomes pretty obvious where things are heading. The slop is scaling, and the machines will generate most of what we read, watch, and believe.

Here’s what the data actually points to.

Near-term (2025–2026)

This is the phase where the numbers start looking embarrassing for humanity.

- New written content will likely hit 60–70% AI-generated, based on the 2024 slope and the 2025 research showing model-assisted writing is already the default.

- Overall internet sludge creeps toward 10%, which is rather small, but it includes every page ever made since the 90s.

- Education basically leans into the chaos, because students stopped writing essays in 2023 and nobody can keep up with the detection arms race.

- Synthetic images and videos are becoming so realistic that family group chats turn into crime scenes, because everyone starts accusing innocent relatives of being deepfakes.

Medium-term (2027–2030)

This is where things break out of their text environments and spill into everything else.

- Multimodal generation becomes the norm, meaning models, speak, animate, and remix themselves endlessly.

- Most video pipelines shift to AI-first, because it’s cheaper than hiring camera crews, editors, motion designers, and all the humans who eat lunch on set.

- Everything online starts to look slightly uncanny, like TikToks generated by a bored ghost who doesn’t understand human faces but tries anyway.

- The Sloppiverse becomes the default environment, not the problem. The internet simply is a machine-generated soup, and human output becomes the rare organic ingredient floating somewhere in the broth.

- Because no human wants to traverse through slop all day, it is taken over by machines – your AI-assistant‡. You will be living in your assistant and not touching other apps anymore. But there needs to be an alternative for experiencing content, so new models arrive that either are based on generative UI¢, or there’s a completely new internet†

Long-term (2030+)

By the 2030s, the whole distinction between AI content and human content is kapoof.

- The phrase “AI-generated” won’t even mean a thing, because the baseline assumption will be “Yes, a model made this. Who else?”

- Humans become artisanal creators, like hipster blacksmiths who insist on “hand-crafted text”, and they’re selling blog posts the way people sell stuff on Etsy.

† Read: The Internet is drowning but there’s a life raft | LinkedIn ‡ Read: Rise of the machine customers | LinkedIn + Read: Prepare for the “AI-first” businesses. | LinkedIn ¢ Read: Is generative UI the buzzword that snuffs designers? | LinkedIn

So where do we go now?

Our future is the machine-internet. Multimodal models become the new contractors of reality, and they write, speak, animate, gesture, and remix everything with the confidence of a creator who has never met a human but has seen plenty of Shutterstock. Video production collapses into AI-first pipelines because AI generated video finally win the war against film crews, and the entire web starts to look a bit haunted, like a ghost ghost who vaguely remembers what faces look like.

And because no one wants to wade through that soup with bare hands, people retreat into their AI-assistants. You do not “browse” anymore, but instead you let your model fetch, filter, and fix the slop for you. Your assistant becomes the front door to the internet, the only sane layer between you and the compost heap. And that shift forces a new kind of interface to emerge, the generative UI systems that rebuild the web on the fly, creating personalized layouts and machine-shaped experiences.

And behind that, a second possibility grows, that of a completely new internet built to survive synthetic contamination, a network designed for humans and machines together because the old web drowned in its own output and couldn’t save itself.

That’s what I think.

Now, signing off at last.

Marco

I build AI by day and warn about it by night. I call it job security. Big Tech keeps inflating its promises, and I just bring the pins and clean up the mess.

👉 Think a friend would enjoy this too? Share the newsletter and let them join the conversation. LinkedIn, Google and the AI engines appreciates your likes by making my articles available to more readers.

To keep you doomscrolling 👇

- I may have found a solution to Vibe Coding’s technical debt problem | LinkedIn

- Shadow AI isn’t rebellion it’s office survival | LinkedIn

- Macrohard is Musk’s middle finger to Microsoft | LinkedIn

- We are in the midst of an incremental apocalypse and only the 1% are prepared | LinkedIn

- Did ChatGPT actually steal your job? (Including job risk-assessment tool) | LinkedIn

- Living in the post-human economy | LinkedIn

- Vibe Coding is gonna spawn the most braindead software generation ever | LinkedIn

- Workslop is the new office plague | LinkedIn

- The funniest comments ever left in source code | LinkedIn

- The Sloppiverse is here, and what are the consequences for writing and speaking? | LinkedIn

- OpenAI finally confesses their bots are chronic liars | LinkedIn

- Money, the final frontier. . . | LinkedIn

- Kickstarter exposed. The ultimate honeytrap for investors | LinkedIn

- China’s AI+ plan and the Manus middle finger | LinkedIn

- Autopsy of an algorithm – Is building an audience still worth it these days? | LinkedIn

- AI is screwing with your résumé and you’re letting it happen | LinkedIn

- Oops! I did it again. . . | LinkedIn

- Palantir turns your life into a spreadsheet | LinkedIn

- Another nail in the coffin – AI’s not ‘reasoning’ at all | LinkedIn

- How AI went from miracle to bubble. An interactive timeline | LinkedIn

- The day vibe coding jobs got real and half the dev world cried into their keyboards | LinkedIn

- The Buy Now – Cry Later company learns about karma | LinkedIn

Leave a comment