There’s a smell that you’ll never forget – it’s the scent of death, and then there’s this peculiar combination of ozone, overheated GPUs, mixed with investor desperation. Yup, I’ve just described the scent of an AI data center, just before another batch of 40,000 NVIDIA H100 GPUs decides to reenact the theme song from Chernobyl, the Dell blade-server version.

Because in our day ‘n age, everybody I know is chasing “the future”, which, judging by the electricity bill, looks a lot like they’re trying to light up Vegas, just so your AI can hallucinate a picture of your Weiner (dog), but with two butts, and call it disruption.

If you want to catch up on the AIs energy use, read this stuff first: Your email Just murdered a polar bear | LinkedIn

Cause I won’t be talking about it in here.

Between 2023 and 2025, every boardroom discovered a new spiritual practice called AI Infrastructure, yes, my dear overzealous friend, the AI runs on plumbing, not miracles.

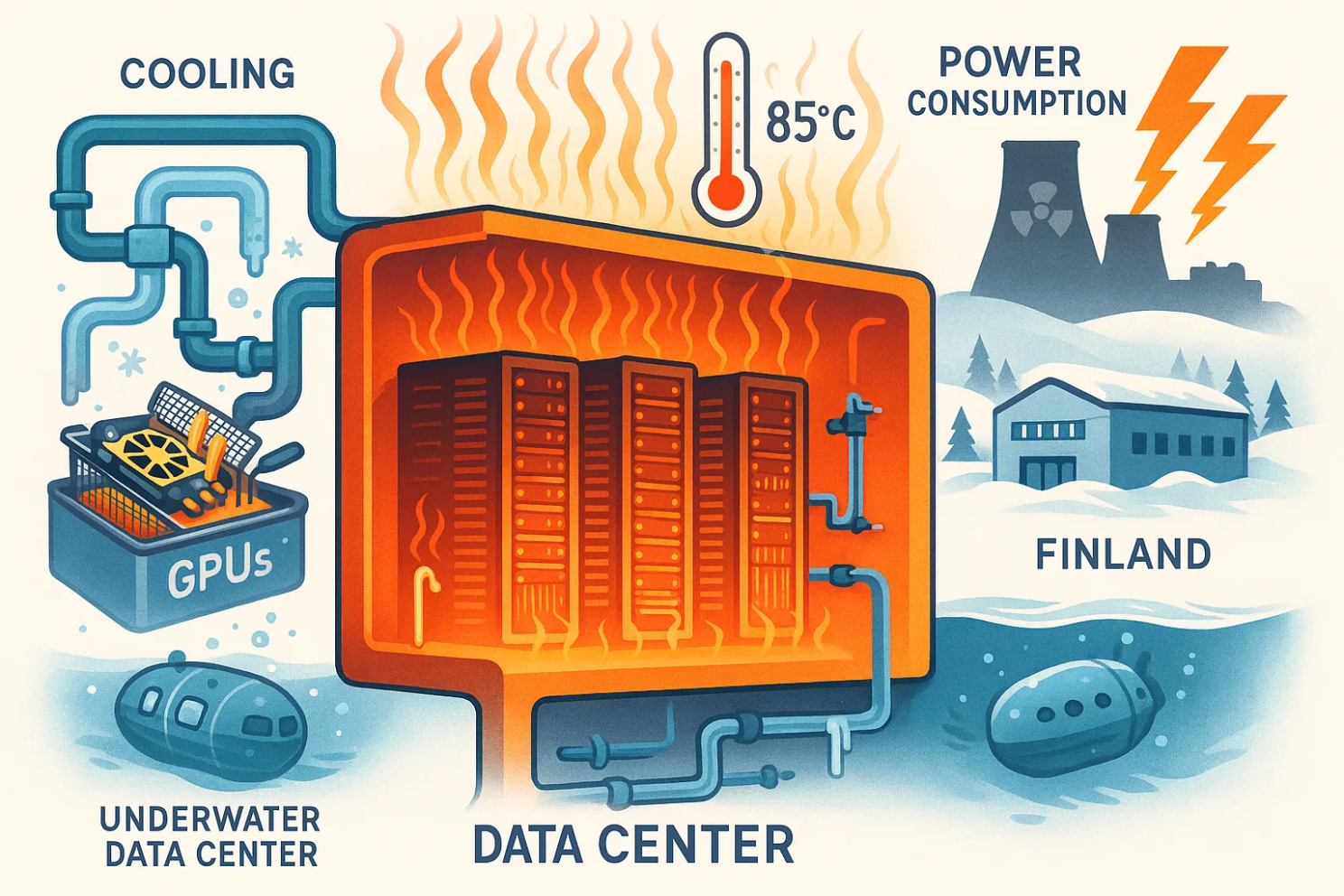

It starts with miles of fiber arteries pumping terabytes through racks that suck more power than Bonnie Blue, and it has glycol veins that are snaking through cold plates to keep $40,000 GPUs from melting, and it has diesel-fed hearts that are humming in concrete bunkers that are thick enough to survive their own cooling failures.

A data center is a nervous system of sensors that screams in the middle of the night, when the coolant hits 36 °C, and a digestive tract of routers, transformers, and sys admins are shoveling pentillion gazillion electrons into oblivion just so the algorithm can “think” for half a second. It is not magic, dear reader. A data center is a billion-dollar espresso machine that pees heat, eats capital, and needs a plumber with a PhD.

And this is what I’d like to talk about today. Not that it is the most interesting topic I’d covered, ever, but I thought it would be cool to do a little deep dive into the economics of running one.

Yes, two boring flies in one strike, data centers and economics.

Now why would that be interesting for us to stick around for another 10 minutes minimum, my dear Marco?

Good question!

Well, because that whole monstrosity is the AI brain’s body, with the pipes, pumps, and fluids that keep the thinking goo from frying itself. Just like our own skull-meat needs blood, a pulse, temperature control, and it can only operate within a narrow comfort zone between “alive” and “toast” – 35 to 39 celcius – this machine mind needs its own veins, heart, and climate so it can stay coherent for another five minutes, or however long it takes for your cat video to generate.

And NVIDIA meanwhile, is grinning like a casino boss.

Because every hyperscaler from the likes of Microsoft, Google, Meta, Amazon, is doubling down on GPU hoarding this year, and at the same time, their CFOs are Googling “how long does it take for hardware to become tax write-off dust?”.

Yes , my friend, this here blog is about the fact that AI data centers are economically unviable. They don’t produce profit. Every GPU is thrown in the furnace called progress, and every megawatt-hour is nothing more then fiscal denial.

Why? What are the consequences?

Read on (TL;DR is down below, where it should)

More rants after the messages:

- Connect with me on Linkedin 🙏

- Subscribe to TechTonic Shifts to get your daily dose of tech 📰

- Please comment, like or clap the article. Whatever you fancy.

Every data center is a sauna

Let’s talk about heat.

Not metaphorical heat, I mean actual, planet-toaster-grade thermodynamics.

Behind every AI there’s a room full of screeching metal running at 85°C (do convert to F yourself, please, I’m Euro-trash) where they’re slurping down megawatts like we did as frat boys at Oktoberfest, Munich.

Data centers are boring indeed. Gray racks, polite little fans, and an occasional outage if someone sneezed near the router. Then AI came along and turned them into high-end crematoriums for . . . money.

A single GPT-scale training run now consumes enough electricity to power a European country, like say Luxembourg – or at least enough to make them jealous.

And for what?

So your model can summarize an email you didn’t want to read anyway. And the cooling systems, meanwhile, are losing their minds. They cannot dissipate heat fast enough, so we went from “air-cooled” to “liquid-cooled” to “holy water from the Alps kinda cooled”.

Yup, engineers started dunking GPUs in fluorocarbon soup like they were deep-frying em.

But even that wasn’t enough. Microsoft literally started building data centers under the ocean (sic!), and Google stuck them in Finland for the free winter air. Noel Skum considered moving theirs to the moon (don’t laugh – give him a year).

But it’s the economics that really sizzle.

A single rack of high-end Nvidia H100s pulls around 40–50 kW of power. Multiply that by 10,000 racks for a hyperscale cluster, add cooling overhead, and you’re easily pushing a gigawatt.

That’s roughly the output of a nuclear plant, minus the efficiency, plus the planetary desctruction.

And still, some CFO signs off on it, thinking “AI is the new oil”.

Yeah, but at least oil can be burned once for energy.

Compute burns continuously. It’s the world’s first subscription-based energy loss model.

And the irony is that half these companies market themselves as “AI for sustainability”. Yeah, right, sustainability in the same way a volcano sustains geology.

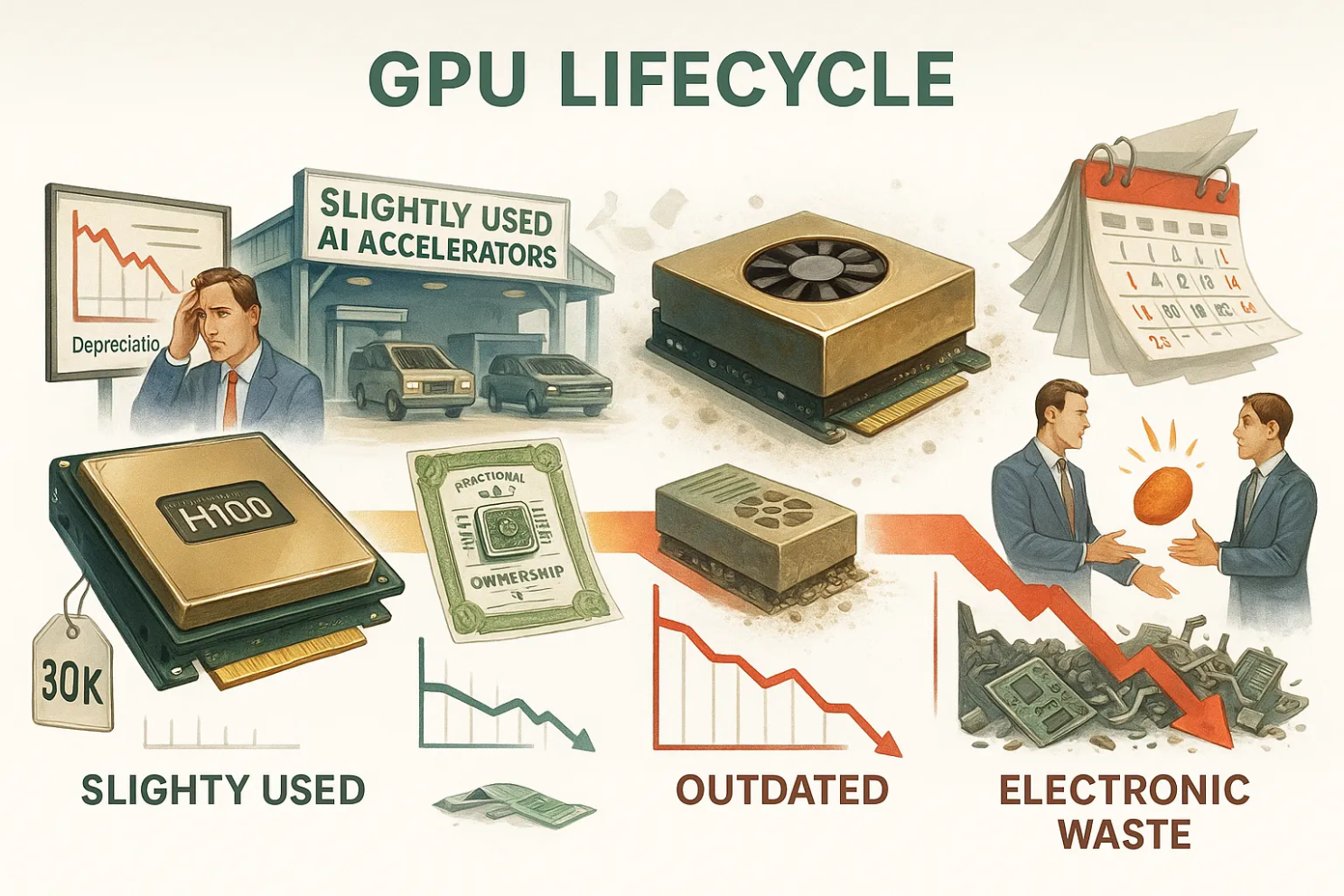

Hardware depreciation is the new inflation

If you think the AI power bills are bad, wait until you meet depreciation. Yup, GPUs age faster than my faith in the Western economy, and it smells about the same when they die.

Nah, what I meant to say is they age faster than the developments in the market.

A top-tier GPU, say, again, an H100, is priced around $30K per unit and this one will be outdated before your next product roadmap meeting, so, by the time you’ve finished amortizing it, Nvidia has already shipped the next generation, which is twice as fast, and three times as expensive.

The depreciation cycle used to be a dull accounting note stating “hardware loses value over time”.

Now it’s a freaking financial horror story.

Data centers built in 2023 are writing down assets in 2025 at 40% below book value, because your next-gen AI infrastructure is already last-gen by the time your data center implements them.

But instead of accepting defeat, companies have invented some creative finance voodoo.

They are now turning to leasing, hardware-as-a-service, “compute futures”, and they’re all saying, “we’re renting our bankruptcy”. Some even started offering fractional GPU ownership! No kidding.

Hahahaha, as if buying half a graphics card makes you half as broke.

And the CFOs are sitting there, their spreadsheets trembling, and playing hot potato with capital expenses, and they’re hoping to dump the GPUs on someone else before the next accounting quarter.

And don’t forget the resellers.

There’s now a shadow market for “slightly used AI accelerators”, and it’s full of companies selling “lightly trained” hardware which are GPUs that have already spent 10,000 hours hallucinating your PowerPoint slides for the board.

Tip: I think there should be a marketplace for them, with listings like they’re used car ads “One careful owner, only trained one foundation model, minor overheating, runs like new (on Wednesdays)”.

This is an industry where the product lifespan is shorter than the hype cycle.

Cloud lords, rent-seeking, and the myth of Infinite Compute

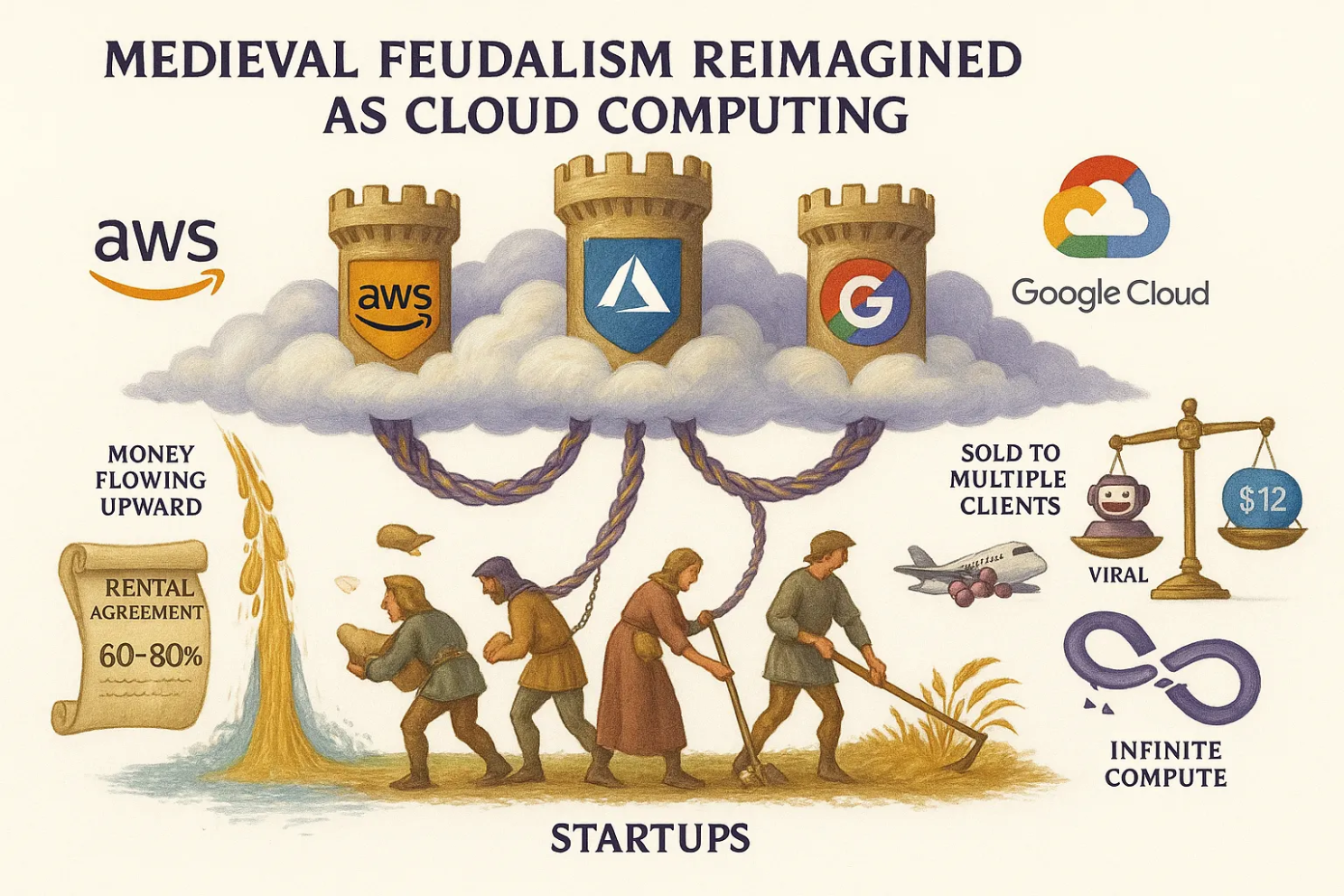

Ah, the cloud, our new religion of convenience, and also the scam of the century, and moreover the reason every CFO now has night sweats.

Remember when cloud computing was supposed to save you money.

Yup that was the promise back then.

Then came AI, and suddenly the same cloud vendors started charging you like you’re renting the moon. You can practically hear Bezos and Nadella laughing from orbit. The ugly secret is secret that the only profitable part of the AI industry right now is the infrastructure layer, and only for the few who own it.

A startup today will spend 60–80% of its burn rate on cloud costs.

Every inference, fine-tuning run, and every “AI-enabled” feature is a donation to Microsoft Azure’s bonus pool, and yet, somehow, we have taught ourselves that the “cloud-first” model is the future.

It’s not. It’s feudalism, but with a lot better branding. AWS, Google, and Microsoft are the new landlords, and they lease you compute and it turns you into their serf, and they give you just enough to build your AI-product, then throttle your margins until you cry uncle.

And still, the myth of infinite compute persists.

“Scale up!” they said. “Demand is infinite!”

Yeah, and so are your losses.

Lemme paint the picture:

A founder builds a chatbot using GPT APIs. It costs $0.01 per query.

The model goes viral. Ten million queries later, the bill hits $100K.

His revenue is $12 in ad clicks. Your revenue per click is somewhere between

$0.10 and $0.50 per click, and if your audience is in Tier-1 countries (US, UK, Germany), you might touch $0.60–$0.80/click on a good day with strong engagement.

If it’s global or mixed, expect $0.05–$0.20/click.

Say your viral chatbot serves 10 million queries, each showing an ad.

Let’s assume that the CTR = 0.5% (which is generous for a chatbot interface)

Clicks = 10,000,000 × 0.005 = 50,000 clicks

Revenue per click = $0.20 (midpoint)

Then your ad revenue = 50,000 × $0.20 = $10,000

Your OpenAI bill was $100,000.

Yup. You just lost $90,000 to subsidize GPU heat.

And the horrible thing is that the cloud giants are minting money selling compute capacity they don’t even fully use.

Yes, your compute capacity is sold to multiple clients.

They overbook the same hardware across multiple clients, just like airlines overbook flights.

So yes, you’re paying full price to sit in the middle seat of the GPU economy.

And that’s why I am a total fan of AI-sovereignty – electricity, maintenance, cooling, networking – and suddenly AWS starts looking like a charity. You host it all yourself, you build your own AI, so you’re not dependent on anyone else and if Trump has a bad day, he cannot force Microsoft to shut you out of your own cloud space (read: So you think you own your AI? | LinkedIn).

The thing to keep in mind, however, is that you need to realize that it’s not the cost that kills you, it’s the upkeep.

If you want to read more on AI-sovereignty, here’s a paper I’m working on – it’s about the economic aspects of it (yes, again, economics, I know). Download here. No, here. I say here. Just kidding: here.

Now, let’s do some math (don’t worry, I’ll keep it friendly). . .

The hangover after the silicon party

Alright, math time. Knuckles cracked, sarcasm warmed up, calculator smoking. I know that 90% of y’all will stop here and scroll to the bottom, and that only you remain. Yes, you my dear geek-freak, cause you’re the one who can recite Pi to the seventh decimal, and somehow makes it sound like a personality trait.

So, in this part of the disaster called ‘the pain of running an AI-data center’, I’ll run two sets of numbers, nice and clean,

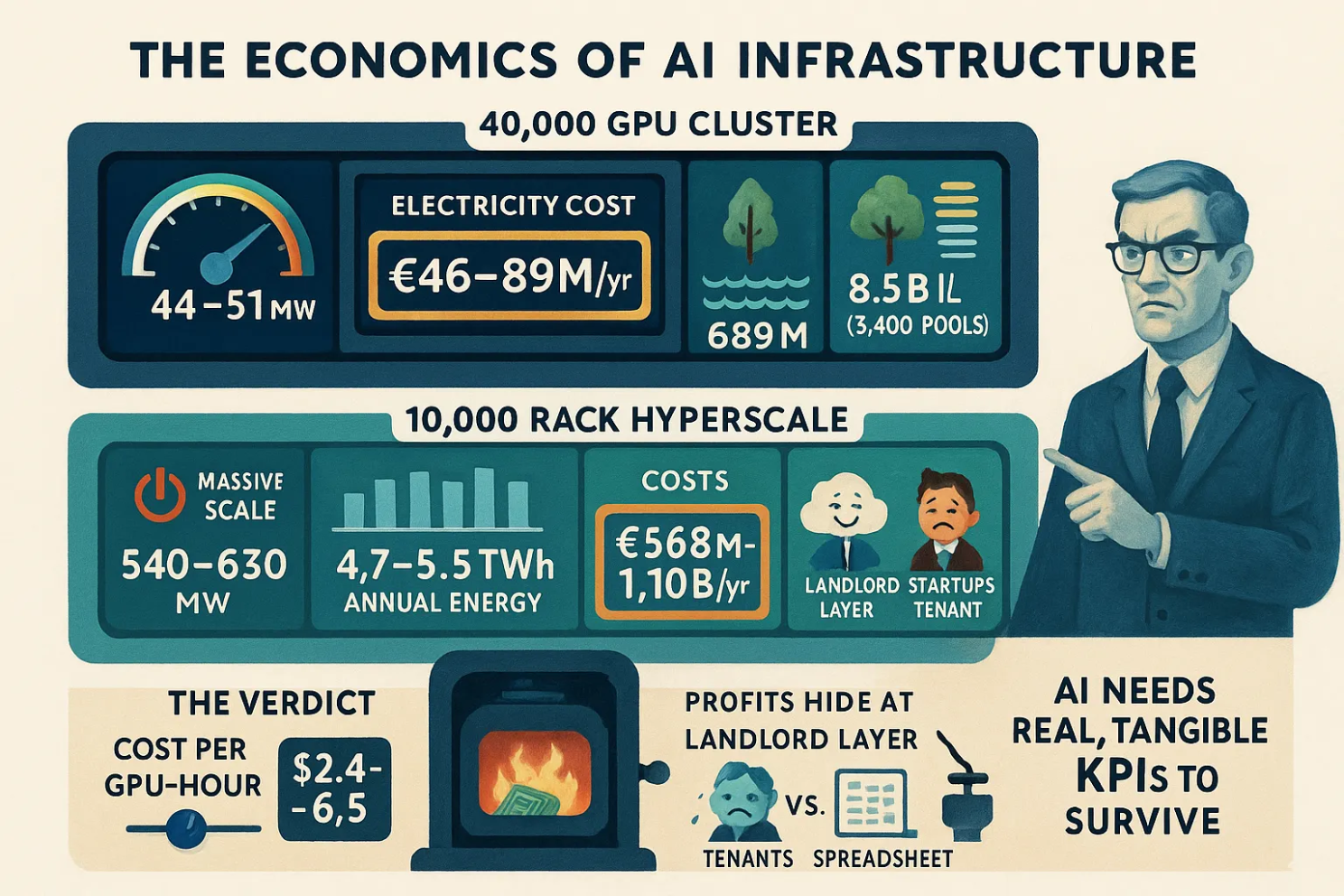

- A cluster with 40,000 NVIDIA H100s

- A hyperscale build with 10,000 racks (no kidding) at 45 kW IT load per rack

No fluff. Just watts, euros, water, carbon, depreciation, and also the part where CFOs usually start chanting Latin prayers.

The 40,000-GPU oven

I call it the Chernobyl Sing-Along, Dell blade edition. The setup is brutal in its simplicity.

GPU count 40,000 units.

Per-GPU draw I’m using 0.7 kW for H100-class accelerators under real load. That is conservative and still rude.

Raw GPU power 40,000 × 0.7 kW = 28 MW, but GPUs aren’t alone in the building.

Now add the non-GPU guts that keep the circus running. CPUs, NICs, switches, storage. Add +30% to get total IT power. That lands at a total IT load 28 MW × 1.3 = 36.4 MW

Cooling and power overhead lives in your PUE (power usage effectiveness – which is the one number that every data-center engineer worships and every CFO pretends to understand). Modern liquid setups flirt with 1.2 if the planets align. Plenty of sites sit closer to 1.4. Run both.

- Site power @ PUE 1.2 36.4 MW × 1.2 = 43.68 MW

- Site power @ PUE 1.4 36.4 MW × 1.4 = 50.96 MW

This is the number your grid operator glares at.

Now, annual energy is the above times 8,760 h/yr.

- PUE 1.2 → 382.6 GWh/yr

- PUE 1.4 → 446.4 GWh/yr

That’s hundreds of gigawatt-hours to write your emails without typos.

Electricity bill, with adult pricing

Data centers don’t pay retail, and they don’t get collect dust (maybe some fairy-dust). So use a €0.12–€0.20/kWh band to keep it real.

- PUE 1.2 → €45.9M–€76.5M per year on electricity alone

- PUE 1.4 → €53.6M–€89.3M per year

If the power contract floats with peak pricing, well, you’re now in vibes-based finance-territory.

Water, because heat hates leaving

Evaporative systems chew water or fluorocarbons, but water is way, waaaaay cheaper. A decent rule of thumb is WUE†** ≈ 1.8 L/kWh. Do the pour.

- PUE 1.2 case → 382.6 GWh = 382.6M kWh → ~689 million liters/year

Closed-loop or seawater systems can drop the price, but then your capex climbs a wall.

Just pick your poison.

† Water Usage Effectiveness. It’s kinda like the hydration index for data centers. PUE tells you how much electricity you waste, and WUE tells you how much water you murder to keep your GPUs from becoming goo.

Carbon , so the ESG people can sweat

Use a grid-mix band 0.35–0.50 kg CO₂/kWh to avoid fantasy hydro claims.

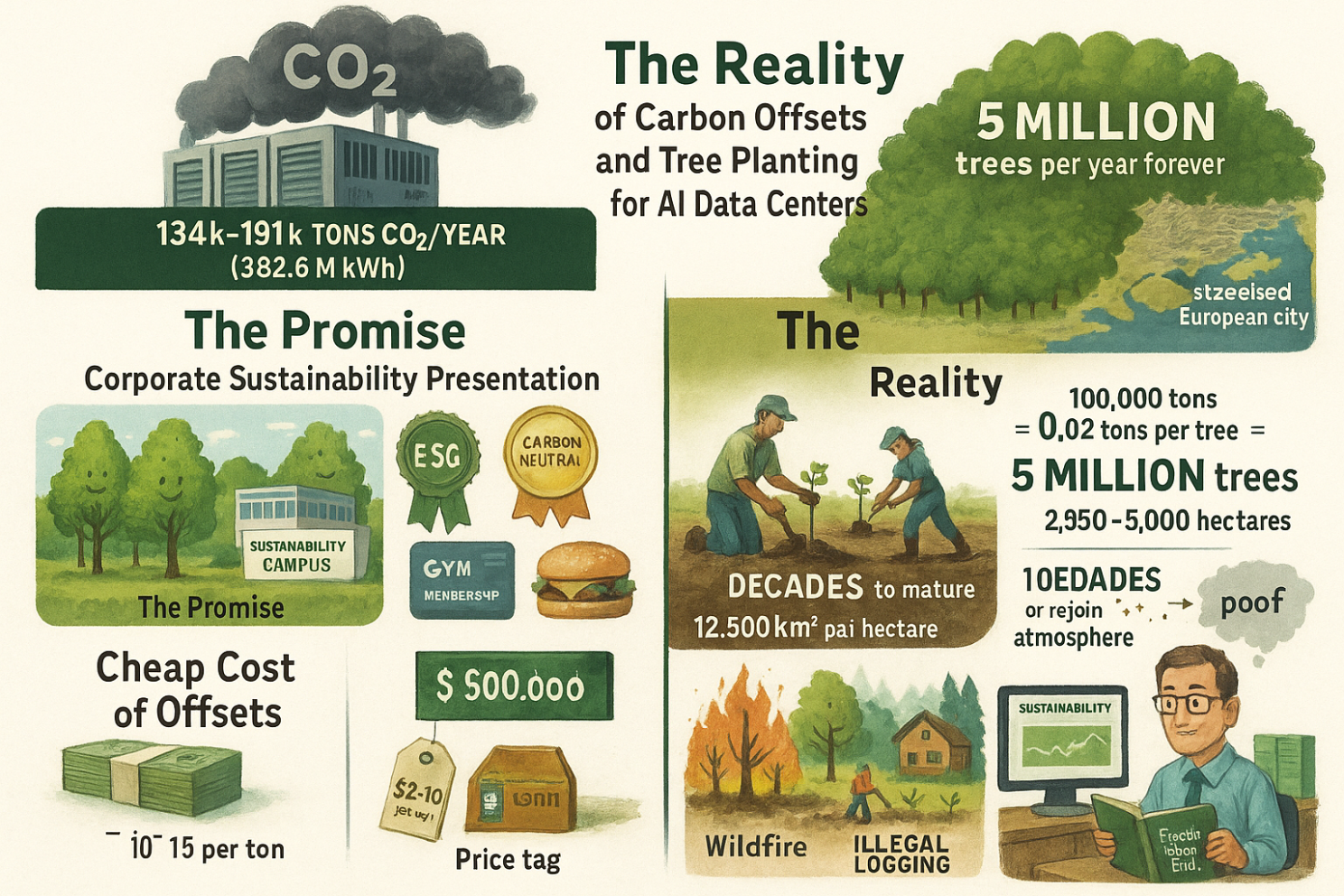

- PUE 1.2 case → 382.6M kWh × 0.35–0.50 = ~134k–191k tCO₂/yr

Oopsy, now you have to buy offsets‡ – that’s the corporate lie where they say “I promise to go to the gym” while ordering another Big Mac – and next you rename the building “Sustainability Campus”, and call it even.

Say your offsets is a forest, and an average, healthy tree absorbs about 20–25 kilograms of CO₂ per year. Well, that’s the ideal case with a mature tree, good soil, no wildfires, no logging, no developer turning it into a Starbucks. To offset 100,000 tons of CO₂, you’d need 100,000 ÷ 0.02 = 5 million trees.

That’s five million actual, growing, not-dead trees, and certainly not the ones in the PowerPoint deck your strategy intern made.

And that’s just for one year of your AI inferno.

If your data center keeps running at that level annually, you’d need to plant 5 million new trees every single year, forever.

Let that sink in. . .

A typical reforestation project plants about 1,000–2,000 trees per hectare (10,000 m²). So for 5 million trees, you’d need roughly 2,500–5,000 hectares – that’s 25–50 km², or the size of a mid-sized European city. Yup. And those trees need decades to mature before they actually capture the full 100,000 tons you’re claiming, so in reality, your carbon offset certificate says “done”, but the trees are still teenagers just learning photosynthesis.

Offsets through reforestation are cheap, like $2–$10 per ton, because they’re mostly “we promise we’ll keep these trees alive”.

So 100,000 tons × $5/ton = $500,000.

That’s half a million bucks to pretend your billion-dollar GPU sauna is eco-neutral.

A rounding error in the budget, and a medal in the ESG report. But remember, one wildfire, drought, or illegal logging spree later – sha poof – your offsets just rejoined the atmosphere.

But don’t worry, your accountant already closed the fiscal year, and your sustainability page is still green.

‡ Carbon offsets (or “carbon credits”) let companies compensate for their emissions by paying someone else to reduce or capture an equivalent amount of CO₂. So if your data center emits 100,000 tons of CO₂, you can buy 100,000 tons worth of offsets, and voilà, your PowerPoint deck now says you’re “carbon neutral”.

CapEx, depreciation and the screaming ledger

Let’s start with simple math.

Each NVIDIA H100 chip costs about $30,000.

If you buy 40,000 of them, that’s $1.2 billion just for the GPUs. That’s what a typical hyperscaler costs. But GPUs don’t work alone cause you still need servers to hold them, network cables, power systems, cooling, and a building big enough to keep everything running.

All that extra stuff easily doubles or triples the total cost.

So if you spend $1.2 billion on GPUs, the real price tag for the whole data center ends up somewhere between $2 billion and $3 billion.

Straight-line depreciation in AI land is 3 years, if only because generation-over-generation progress turns last year’s “revolution” into tomorrow’s e-waste.

- Depreciation, lean → $680M/yr

- Depreciation, real → $1,000M/yr

Now layer operations and maintenance cost at ~5% of capex for parts, people, pumps, pipes, pain.

- Lean → ~$102M/yr

- Real → ~$150M/yr

And if you’re living in Europe, convert €→$ with a lazy 1.07 multiplier and try not to cry.

- Lean power , PUE 1.2 @ €0.12 → ~$49M/yr

- Lean power , PUE 1.4 @ €0.20 → ~$96M/yr

Put it together, lean case, nice power price, nice PUE:

- Total annual cash burn ≈ $680M (dep) + $102M (O&M) + $49M (power) = ~$831M/yr

Now divide by available GPU-hours. You own 40,000 GPUs , they hum 24/7 , 8,760 hours per year.

- GPU-hours/year = 350.4M

- Fully loaded cost per GPU-hour, lean case ≈ $831M / 350.4M = ~$2.37/GPU-hr

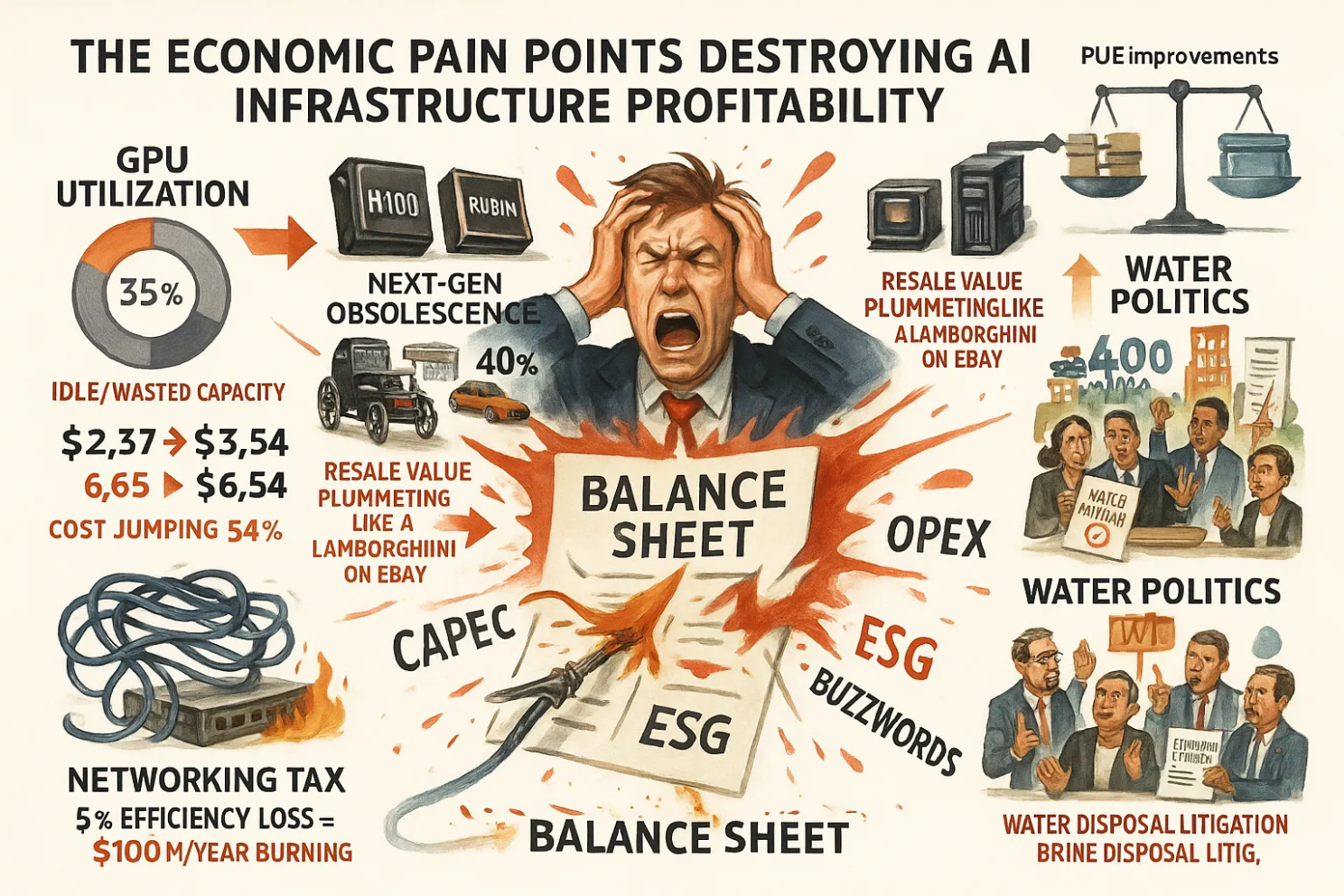

That’s without financing cost, tax, stranded utilization, or the little scandal called “oh no we only used 65% of it”, now add WACC**†** 8% on $2.04B and you’re eating ~$163M/yr in capital cost , nudging you to ~$2.84/GPU-hr before you even account for idle time and orchestration waste.

Run the real build with higher capex and rougher power:

- $1.0B dep + $150M O&M + $96M power + $240M capital cost (8% on $3B) → ~$1.486B/yr

- $/GPU-hr → $1.486B / 350.4M = ~$4.24/GPU-hr

Four dollars per GPU per hour.

That’s your bottom-of-stack cost before vendor margins, software licenses, storage, bandwidth egress (outbound data), or the mandatory pizza for the night shift when a pump goes kaputt at 01:11.

Cloud lists H100 hours in the $2–$5 range if you bring a priest and a reservation. Now you see why the landlords smile, and the tenants write thought leadership.

And I haven’t even calculated the hyperscaler. . .

† Weighted Average Cost of Capital. It’s basically the average interest rate your company pays to use other people’s money.

Now stitch it to economics and salt it with pain

Alright, time to leave the warm, glowing world of GPU specs and enter the cold, fluorescent-lit basement where accountants live. This is where your AI dream dies but from spreadsheets, because the depreciation melts souls.

The physics hates you, but the accounting people hate you more.

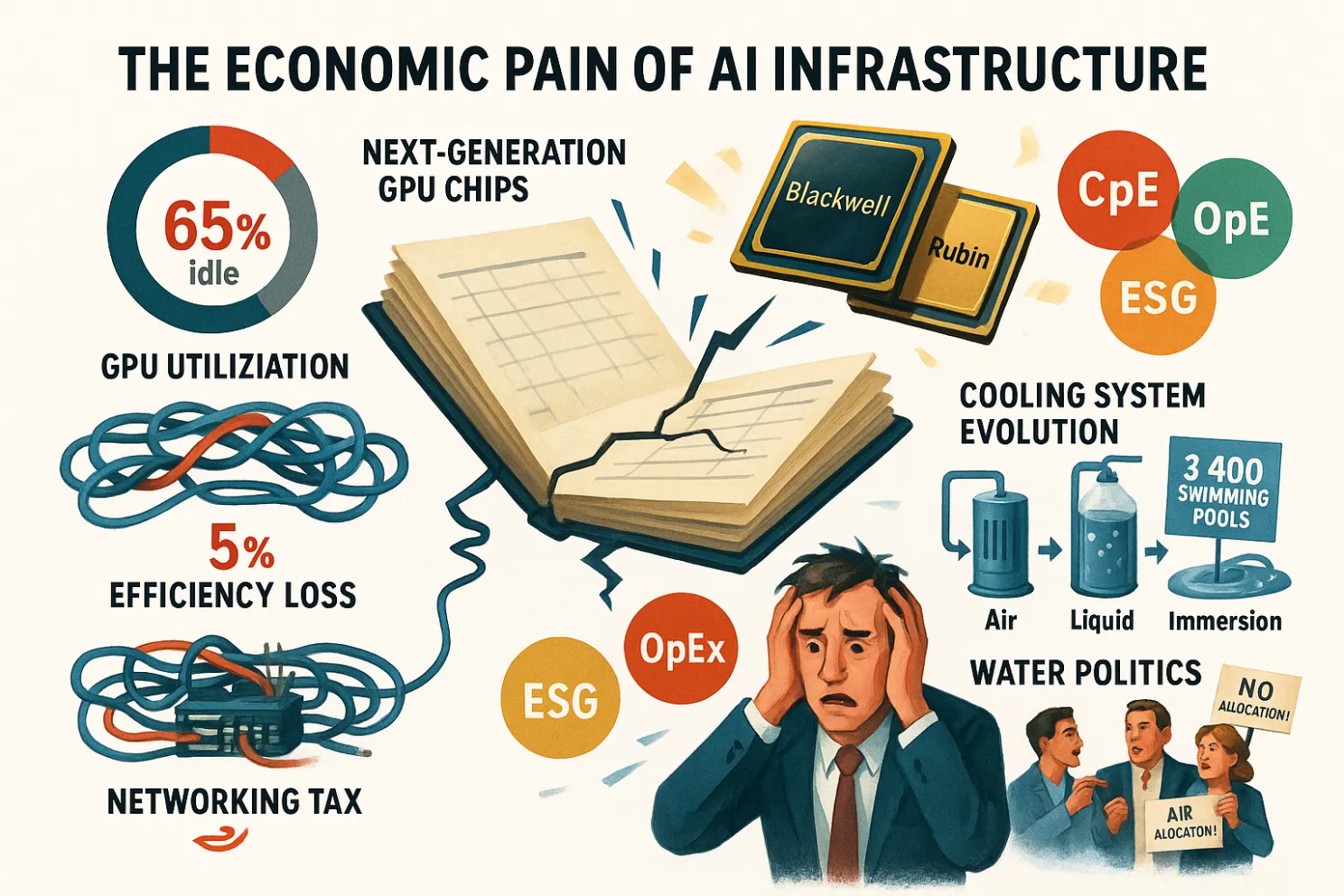

- Utilization kills margins. A 40,000-GPU cluster that averages 65% real utilization sees the $/GPU-hr jump by ~54% because idle time still depreciates. That $2.37 becomes $3.65 in the lean case , and $6.54 in the real capex case with financing and ugly power.

- If Blackwell-plus or RubinIf Blackwell-plus or Rubin comes with 2× performance increase/watt next year, your current fleet loses resale value like a gently used Lambo on eBay. A 40% write-down mid-cycle turns your three-year depreciation fairy tale into a two-year fire sale.

- Networking tax**†** is not a rounding error at this scale, you’ve got high-radix switches, optics, cabling, and NIC bisection bandwidth that easily lands in hundreds of millions. Say you lose 5% of cluster efficiency to topology or congestion and you just set another $100M/year on fire.

- Now take cooling step-changes. When you’re moving from air to single-phase, to cold-plate, to immersion, that shifts capex and O&M around like it’s a game. You win PUE points, but you lose serviceability.

- Water risk will bite you in the ass as well. City councils do read thye newspapers. That 3,400-water pool number triggers hearings, demands for water-neutrality, on-site reclaim plants, and a brand-new budget line item called “brine disposal litigation”.

So, between idle GPUs, faster successors, networking tolls, cooling acrobatics, and water politics, your billion-dollar “AI brain” turns into an aneurysm for your CFO. The tech does scale, but the economics is doing a 360 and projectile vomiting across the balance sheet where it is splattering capex, opex, and every ESG buzzword within a ten-meter radius.

‡ “Blackwell-plus” and “Rubin” are the names for NVIDIA’s next generations of GPUs. The new ones that make today’s $30,000 H100s look like a horse drawn carriage. † Networking tax isn’t a real government tax (though it feels like one). It’s the performance and cost penalty you pay for moving data around inside a data center. Yup, between your GPUs, servers, racks, or even buildings. It is waste, cause it’s not doing useful computation.

But, but, can’t inference revenue revive this corpse?

Short answer, no. Long answer, still no , but with a lot more decimals.

If you own your stack at $3–$6 per GPU-hour real cost, and you actually keep the cards hot with something useful, you need pricing power. Most API buyers don’t pay for your capex therapy. They pay per token or per call, and they churn the minute your price flinches.

If you’re selling foundation-model inference at commodity prices, the gross margin evaporates. The only way out is vertical integration where your AI rides shotgun on a product with real margin, so compute is buried in unit economics that don’t bleed you to death.

Or . . .

- On-prem sovereignty → where the buyer eats capex for regulatory reasons or risk mitigation (Hi Trump!), and you sell software and services like a respectable villain.

- Browser-use RPA-style workflows† that chew hours and print hard business outcomes, not hypes, so the client accepts a compute bill because the output moves KPIs, and not the CEO’s LinkedIn.

Otherwise you are a meter with a marketing department.

‡ Again, read paper.

† This is the future money maker in AI → Read The post-human back office | LinkedIn

TL;DR in accountant voice, without mercy

Yes, @MarcDrees – at the end of the story, where it should be !

The 40,000-GPU cluster:

- Power ~44–51 MW continuous , 383–446 GWh/yr

- Electricity ~€46–€89M/yr depending on PUE and price

- Water ~~689M liters/yr if you evaporate your guilt

- Carbon ~134k–191k tCO₂/yr on mixed grids

- CapEx $2.0–$3.0B all-in , depreciation $0.68–$1.0B/yr

- Real $/GPU-hr ~$2.4–$4.2 in fairyland, ~$3.6–$6.5 when you add utilization truth and financing

The 10,000-rack hyperscale fantasy at 45 kW IT/rack:

- Site power 540–630 MW

- Annual energy 4.7–5.5 TWh

- Electricity cost €568M–€1.10B/yr

- Water ~8.5B liters/yr , also known as 3,400+ pools

- Local politics spicy

That’s the math.

So no, your AI needs real KPIs that it can move, otherwise your little pet project is doomed from the start, and that’s why most AI projects fail. Not because they ain’t used, but because the benefits aren’t tangible.

Because if it walks like a furnace, it quacks like a furnace, it will bill like a furnace. The profits hide at the landlord layer and not at the tenant layer. Which is why the cloud vendors smile with all their teeth, and everyone else cries over their spreadsheet.

Enough!

If you want the original research behind all-o-this, say, to bolt these numbers straight into your own blog but without the venom and the one-liners I use, just gimme a holler.

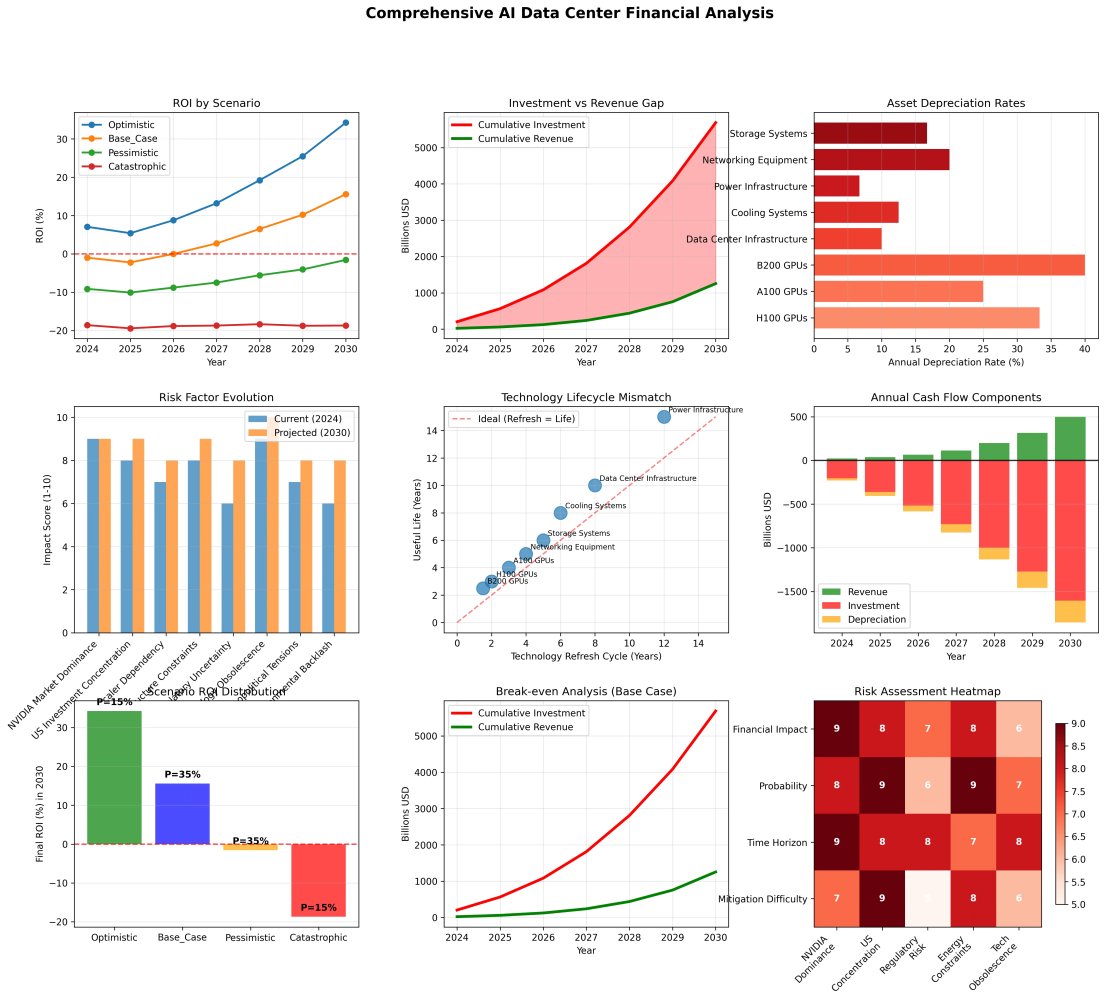

You get cool stuff like this:

Signing off,

Marco

I build AI by day and warn about it by night. I call it job security. Big Tech keeps inflating its promises, and I just bring the pins and clean up the mess.

👉 Think a friend would enjoy this too? Share the newsletter and let them join the conversation. LinkedIn, Google and the AI engines appreciates your likes by making my articles available to more readers.

To keep you doomscrolling 👇

- I may have found a solution to Vibe Coding’s technical debt problem | LinkedIn

- Shadow AI isn’t rebellion it’s office survival | LinkedIn

- Macrohard is Musk’s middle finger to Microsoft | LinkedIn

- We are in the midst of an incremental apocalypse and only the 1% are prepared | LinkedIn

- Did ChatGPT actually steal your job? (Including job risk-assessment tool) | LinkedIn

- Living in the post-human economy | LinkedIn

- Vibe Coding is gonna spawn the most braindead software generation ever | LinkedIn

- Workslop is the new office plague | LinkedIn

- The funniest comments ever left in source code | LinkedIn

- The Sloppiverse is here, and what are the consequences for writing and speaking? | LinkedIn

- OpenAI finally confesses their bots are chronic liars | LinkedIn

- Money, the final frontier. . . | LinkedIn

- Kickstarter exposed. The ultimate honeytrap for investors | LinkedIn

- China’s AI+ plan and the Manus middle finger | LinkedIn

- Autopsy of an algorithm – Is building an audience still worth it these days? | LinkedIn

- AI is screwing with your résumé and you’re letting it happen | LinkedIn

- Oops! I did it again. . . | LinkedIn

- Palantir turns your life into a spreadsheet | LinkedIn

- Another nail in the coffin – AI’s not ‘reasoning’ at all | LinkedIn

- How AI went from miracle to bubble. An interactive timeline | LinkedIn

- The day vibe coding jobs got real and half the dev world cried into their keyboards | LinkedIn

- The Buy Now – Cry Later company learns about karma | LinkedIn

Leave a comment