I think it was Monday. Yes, on Monday indeed, I tried explaining to a therapist, why I built an algorithm that basically thinks like me when I have had too much taurine and not enough adult supervision. He nodded, wrote something about “hyperfocus delusions of grandeur”, and he asked if I was avoiding something important. I was. I was avoiding everything important. Including sleep, my taxes, and the terror of me realizing that I had just invented a digital twin with ADHD.

See, I didn’t mean to. It just . . . happened.

I set out to study “computational creativity”, that’s a novel cause indeed, but then I realized that most scientists write very serious papers about how to make machines slightly less boring than Excel. Not a more creative algorithm. The whole field of AI is so stuck with their head up their butt that they can start mining data from their own colon. People do want an “AI that can think creatively” but nobody wants to admit what every neuroscientist already knows, that creative brains are disasters. Controlled chaos in fact.

I simply call it “my biochemical jazz”.

So I decided to build one.

I wanted a model that doesn’t behave. A system that wanders, forgets, gets itself lost, interrupts itself, overthinks, and somehow produces ideas that make you go, “Wait, what? Now that’s stupid”, followed five minutes later by, “Hey, hold up, that’s genius”.

I called it “The Wanderer’s Algorithm”. Because “Artificial Daydreaming Loop” sounded kinda lame, like the kind of project you’d only undertake after three raki’s and a head injury.

Yes, people, this here blog will be about a freaking algorithm I created to make your dearly beloved structured AI-Assistant a wandering, over-thinking, hyper-active gremlin that refuses to stay on task, interrupts itself mid-sentence, forgets what it was saying, remembers something funnier, and somehow still ends up inventing poetry about some odd combination of parking meters and late-stage capitalism.

It is not polite, it’s not linear, and it definitely doesn’t “stay focused”. This here, my dear well above-intelligent friend-o-mine, this here is an algorithm with ADHD, a digital twin of my own brain on a Monday morning, and I swear it is either the future of creativity or the most expensive cry for help I’ve ever compiled.

If you want to get your hands on the paper, download it here.

More rants after the messages:

- Connect with me on Linkedin 🙏

- Subscribe to TechTonic Shifts to get your daily dose of tech 📰

- Please comment, like or clap the article. Whatever you fancy.

What if Einstein had access to ChatGPT

Let’s play a little mental pinball, or as Einstein liked to call it, a Gedankenexperiment. You’ve got a bunch of people locked in a conference room, they’re all hyped up and each is sitting behind their own ChatGPT window like it’s a second-brain thinking cap. Their goals is to come up with “the next big thing”.

Of course, you’d expect chaos, sparks, notebooks catching fire from all that genius.

But no.

Every single one of them spits out the same beige vomit-like idea but with slightly different toppings. A world of parrots that are echoing each other in corporate tone. The few rebels who didn’t use ChatGPT, their ideas were actually interesting. Messy. Human. Wrong, but in beautiful ways.

A recent study confirmed what anyone with a functioning imagination already suspected, that when you let a Large Language Model “help” with creativity, it doesn’t amplify originality. It neuters it.

(See: Lobotomized AI if you enjoy watching hope die in bar graphs.)

To me, ChatGPT is nothing but a creativity assembly line that is churning out the same ideas over and over again.

Now, I’ve had long debates with smart people who swear ChatGPT is creative because it surprises them. Which is adorable, honestly, but they confuse novelty with originality.

The thing is, that a Large Language Model doesn’t imagine. It doesn’t dream. It infers. Meaning, it rearranges the world that it already knows into shapes that might look fresh if you don’t squint too hard. It finds the most probable next thing to say.

But probability isn’t creativity. It’s quite the opposite.

Now, let us bring in a man who didn’t care about probability (actually hated it, because “God doesn’t play dice”†) Albert freaking Einstein.

† Einstein actually said, in a 1926 letter to physicist Max Born: “Quantum mechanics is very impressive. But an inner voice tells me that it is not yet the real thing.The theory says a lot, but does not really bring us any closer to the secret of the ‘old one.’I, at any rate, am convinced that He does not play dice”.

Why 1905 would have broken chatGPT

It’s 1905. Einstein is a 26-year-old patent clerk in Bern. He’s late on rent, underemployed, and writing four papers that will detonate physics forever – the photoelectric effect, Brownian motion, special relativity, and that cheeky E=MC² equation. This “Annus Mirabilis’ (his miracle year), would become the launchpad for general relativity, and that is such a weird and counter-intuitive theory that it made gravity sexy again.

He talked about spacetime bending. About acceleration being indistinguishable from gravity. About time slowing down when you move close to the speed of light. He even predicted black holes and gravitational waves before anyone had language for them.

These ideas sounded like something you’d come up with after swallowing X with shrooms, and you gotta know that the scientific consensus was that Newton had already tied the universe up with a bow. Gravity, motion, inertia, reaction – check, check, check, done. 19th-century students were literally told not to bother studying physics (sic!).

Everything important was “solved.”

Then Einstein strolled in and said, “Oy vey, cute model. Shame about reality”.

He rewrote the laws of the cosmos on a notepad between filing patent forms. He had no GPU. No training data. No autocomplete. He only had audacity, curiosity, and probably too much ganja in his pipe.

The real thought experiment

Now imagine one of those Newton-worshipping physicists of 1900 had access to ChatGPT. Let’s say the model is fully trained on everything humans knew at the time – Newton, Maxwell, Galileo, all of it.

Their prompt,

“Come up with a revolutionary new theory of gravity”.

What would happen you’d think?

I think ChatGPT would politely regurgitate a dozen Newtonian explanations about forces, masses, and attraction, and then summarize them into a crisp paragraph with a “fun fact” about apples. Maybe it’d throw in a Latin quote for flair. Heck, it might even format it in Markdown!

But it would never – ever – have invented relativity.

Because you don’t get spacetime curvature from statistical inference.

You don’t pull gravitational time dilation from the probability distribution of known physics. To leap from Newton to Einstein, you need a willingness to break the dataset, to look at everything the world believes and say, “Nah, let’s do it different this time”.

LLMs don’t say “nah.” They say “as per previous literature”.

The limits of inference: out-of-the-box-thinking

So, Einstein did not infer. He rebelled. He didn’t combine the highest-probability statements of his time like a LLM does today. He imagined what was impossible – out of the box.

That’s the difference. Creativity isn’t about connecting the dots that exist. It’s about drawing a dot that doesn’t, then convincing the universe to catch up.

ChatGPT, on the other hand, will always color inside the lines, because the lines are its only world. It’s not curious. It’s compliant. It’s a very polite mirror reflecting all our old ideas back at us, just formatted in active voice.

So if you’d asked ChatGPT circa 1905 for a theory of gravity, it would’ve told you Newton already nailed it. Maybe offered to summarize his work “for clarity”. It would have missed the curvature of spacetime, the relativity of simultaneity, the idea that mass and energy are interchangeable, you know, all those delicious, reality-bending insights that came from daydreams.

From wandering thoughts.

The case for wandering minds

So, if ChatGPT wouldn’t have invented relativity, who would. Someone like Einstein, obviously. Someone whose brain treats focus like a rental car and curiosity like it’s a novel drug. Someone who stares at the same problem for years, then solves it sideways because they got bored halfway through and took a mental detour into the absurd.

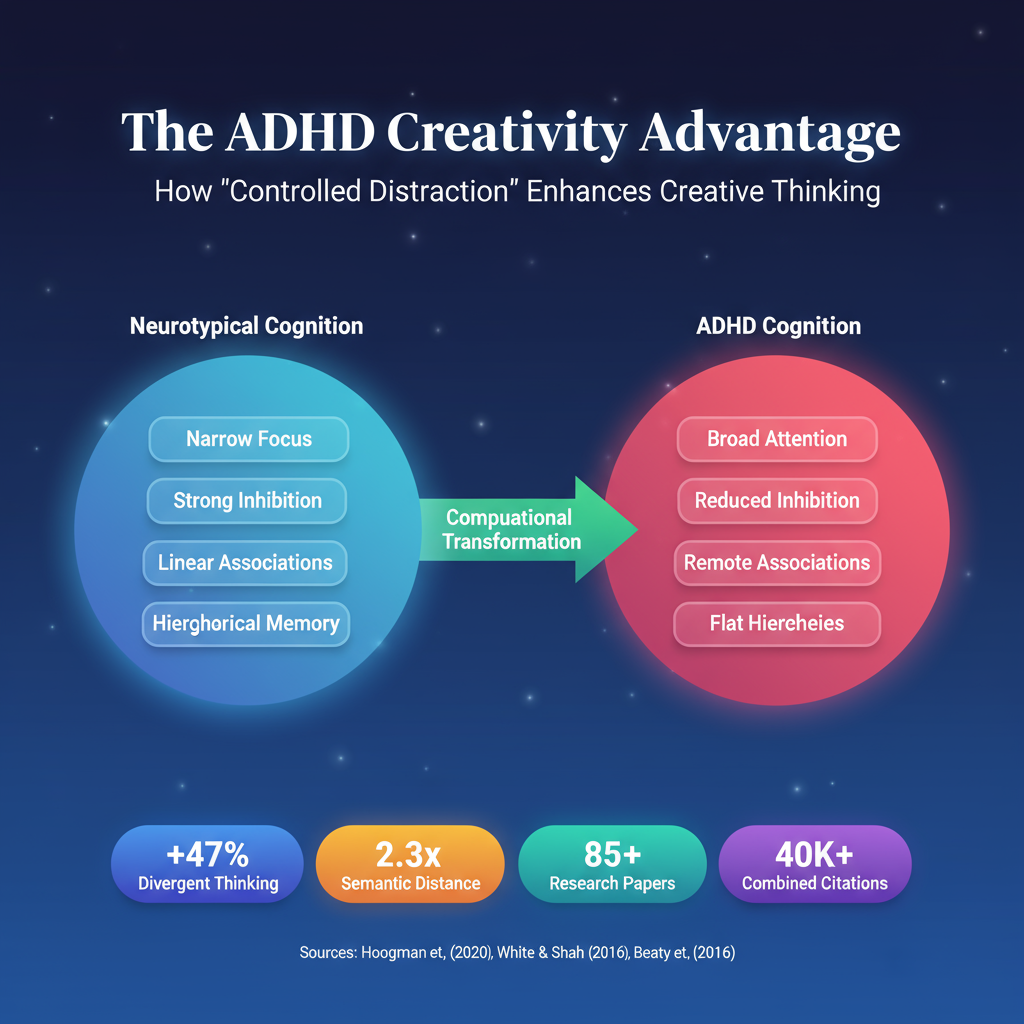

In other words, someone whose cognitive wiring looks suspiciously like . . . ADHD.

Yes , I modelled the algorithm after a neurodivergent attention-deficit condition.

I think that’s quite appropriate, don’t you?

Because all the AI nerds are out there chanting “Attention Is All You Need‡” like it’s a gospel, and I figured, well… not really. Sometimes the magic comes from losing it.

It’s not attention you need – it’s controlled distraction with a purpose, chaos with a steering wheel and curiosity that forgot where it was going and found something better on the way.

If transformers are built on attention, then “The Wanderer’s Algorithm” is modelled on minds that can’t sit still and won’t stop doodling in the margins.

Most of history’s breakthroughs didn’t come from the straight-A kids. They came from the ones staring out the window, who were mentally disassembling the universe. The ones who got detention for “not following instructions”, but later invented the damn instructions.

That messy, non-linear, leap-before-you-look kind of cognition is not a glitch, people.

It’s the cheat code.

And that’s what I tried to simulate.

Because the world is drowning in “smart” systems that think like accountants, efficient, structured, dead inside. Every new LLM promises “creativity at scale” which is saying, “we automated mediocrity”.

It’s all coherence and limited chaos, and that’s the exact formula for bohooooring.

So, yeah, I invented a machine that wanders.

‡ “Attention Is All You Need” is the title of a 2017 research paper written by a team at Google Brain (Vaswani et al.). That paper introduced a brand-new type of AI model called the Transformer, which is the architecture behind ChatGPT, Claude, Gemini, and pretty much every modern language model alive today.

A four-phase disaster that I hope is gonna work

The Wanderer’s Algorithm is what happens when you give the machine permission to get lost, and then punish it just enough to make it want to find its way back.

Yeah, I built an algorithm based on ADHD with a masochism fetish.

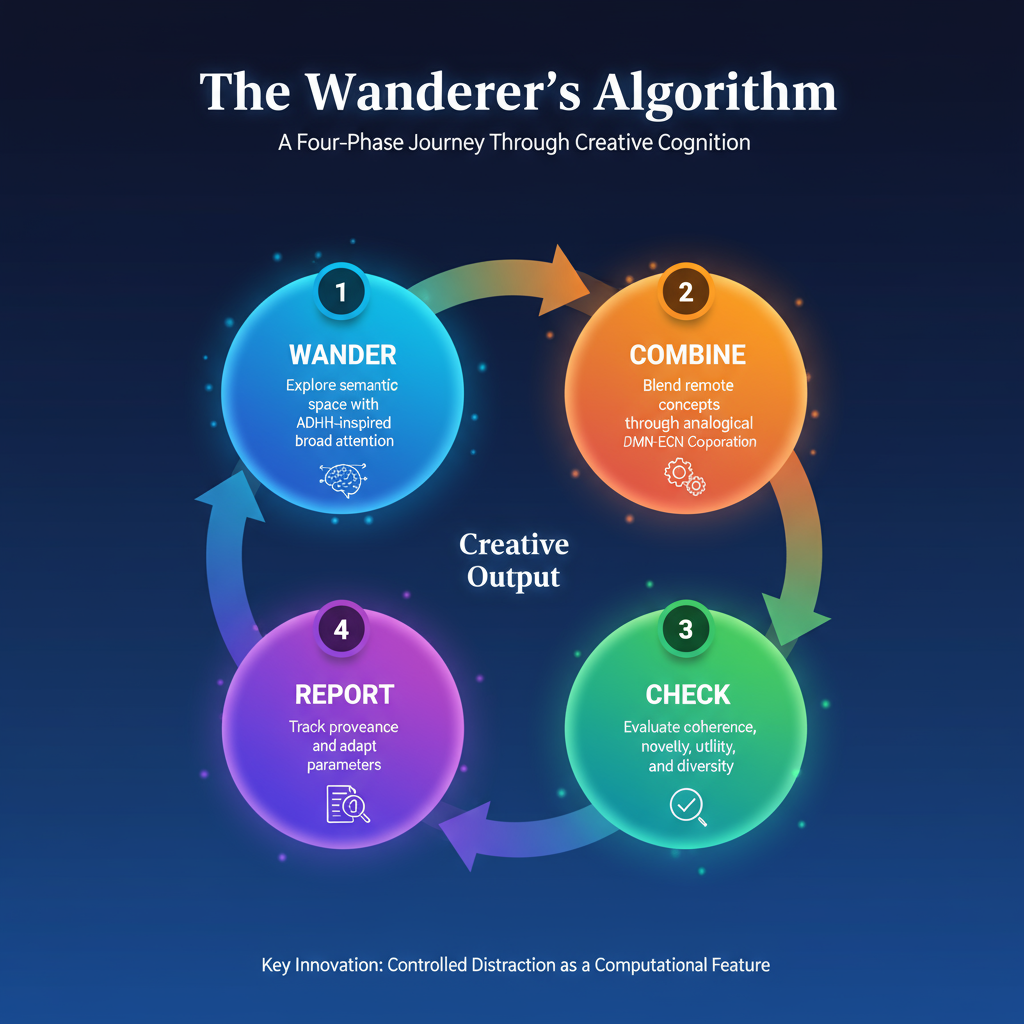

There are four big pipes that feed the chaos, I call those steps Wander, Combine, Check, Report. WCRC, because even such a mad thing as this deserves an acronym.

This thing is a procedural framework for creative reasoning that loops, logs, and sometimes redeems itself.

Wander is the first act, and it’s exactly what it sounds like – a structured loss of focus. The algorithm starts with a seed concept, builds a semantic scaffold, and then blows it up. Instead of crawling the nearest, safest neighbors, like every other Attention Seeking LLM, it deliberately stretches. Step sizes expand, temperatures rise, and the algorithm jumps further than it should through graphs and embeddings. It pulls data from structured knowledge networks like ConceptNet, from dense vector spaces, from anything that lets it draw connections humans wouldn’t make when sober. Each random walk logs its path through node IDs, cosine distances, traversal probabilities, timestamps (I unofficially call it “a breadcrumb trail of cognitive vandalism”). This is basically the story of how it got there.

When it is done collecting fragments and alien concepts that barely belong in the same ontology, the Combine phase begins. This is where it starts welding the nonsense together to see what burns.

That’s when three concurrent strategies start running and judging.

The first is analogical reasoning.

Yup, that’s the old-school structure-mapping trick where you find relational overlap between two completely different things and fuse their skeletons. The second is conceptual blending, which takes multiple input spaces, projects chosen attributes into a new shared frame, and lets emergent properties bubble up (and I call this the “synthetic evolution for ideas”). The third is embedding arithmetic, where it runs vector operations on meaning – addition, subtraction, nearest-neighbor search, but applied to abstract semantics. You get hybrid representations mathematical mashups that a transformer model can later unwrap into text. No magic, just brute-force recombination powered by GPUs and personal hybris (yes, Marc Drees, with a ‘y’).

Then comes Check, and that is the phase that keeps the entire operation from collapsing on itself. It’s a four-dimensional triage system consisting of four interacting functions, coherence, novelty, utility, and diversity. Coherence checks if the idea even parses, the inference models test for contradiction, LLM paraphrasers run consistency checks, and perplexity scores catch the worst garbage. Novelty measures the semantic distance between the idea and its ancestors. Utility estimates whether anyone on earth would even care about the idea, and it’s using task-relevance metrics and embedded scoring models trained on successful creative outputs, and diversity, well, that is the fun part – a Quality-Diversity (QD) archive borrowed from evolutionary computation, where every concept lands in a behavioral niche based on features like domain, abstraction level, or emotional tone.

Each niche stores the best idea discovered there so far, creating a living grid of originality. The Wanderer doesn’t hunt for one “best” answer like your ‘traditional’ LLM does, it populates an ecosystem of weird and crazy ideas, that somehow still make sense.

The last phase is Report, which is the conscience of the machine. It tracks everything. Which threads wandered where, which combinations hit gold, which scoring models were hopeless. Every output is timestamped, linked and traceable down to parameter-level provenance. And this is is all documentation for auditors but also direct ammunition for the meta-learning layer, because the system uses its own history as feedback.

If novelty scores start flatlining, it widens the search range and raises temperature. If coherence drops, it clamps entropy and tightens pruning. If the QD map starts converging on the same boring regions, it triggers diversity-boosting protocols. The Wanderer learns, but not in the way neural nets “learn”, because it adjusts its own attention span on the fly. Literally. It rewrites how distractible it should be next time.

Around this loop – Wander, Combine, Check, Report – sits an adaptive controller. It schedules when to shift from wild exploration to conservative refinement, balancing entropy against precision like an orchestra conductor with caffeine shakes. A semantic memory buffer holds short-term and long-term associations whereby the former keeps current context, and the latter stores anything that proved too weird to delete. These archives become a kind of creative subconscious over time. There’s also a coherence stabilizer running in the background, and that’s kinda like a watchdog process that intercepts total gibberish and snaps the system back to something vaguely human-readable before it hits the output buffer.

If you compare it to the usual suspects like Chain-of-Thought, Tree-of-Thoughts, Graph-of-Thoughts models, you’ll notice that the difference is structural philosophy.

CoT walks a straight line. ToT branches predictably. GoT maps reasoning, but still assumes order.

But the Wanderer throws the map out the window, drives in circles, and writes down the coordinates only when something explodes beautifully. It’s optimizing for controlled divergence.

Every run is a negotiation between chaos and sense-making, between exploration and evaluation.

The payoff is that it doesn’t think in single-track reasoning. It doesn’t follow a “best path” through its conceptual graph. It floods the space with parallel threads, lets them mutate, then curates the survivors.

What emerges is a landscape of possibilities – multiple partially coherent hypotheses that occupy different regions of semantic space. The algorithm just builds the topography of what could be correct, and leaves the human to decide what to keep.

That’s its philosophical stance → not to solve creativity, but to expose it.

The longer it runs, the smarter the wandering gets.

Each loop adds statistical memory about what kinds of distractions produced value, and which just wasted compute. It starts to self-tune its madness, like how far to leap, how many parallel searches to sustain, how to balance novelty against nonsense.

So, no, it’s not “attention is all you need”. It’s the opposite. It’s proof that attention is the cage.

The irony loop

Of course, this thing ain’t free. Not that I’m charging for it’s use, but each run is a computational bonfire with dozens of LLM calls per idea, random walks through massive semantic spaces, quality-diversity archives the size of small countries.

It’s a GPU mortgage.

But the irony is, it behaves exactly like a human creative process. Wasteful. Chaotic. Occasionally transcendent. It improvises just like we do.

And isn’t that the point?

We have spent decades teaching machines to think straight. Maybe it’s time we let them zigzag.

What you can do with this beautiful mess

If you’re an academic, well, congratulations my friend, you now have 73 pages of LaTeX-formatted madness waiting for you peer review. The whole thing’s properly cited, parameterized, and diagrammed, so please do go wild. Validate it. Break it. Publish your rebuttal in Nature Neuroscience. I’ll read it and pretend to care and not to cry.

If you’re a developer, steal it. The architecture’s open enough to rebuild. Take the Wander-Combine-Check-Report loop, the ADHD knobs, the Quality-Diversity archive and turn it into your own Frankenrithm. I dare you!

If you’re just a creative who likes weird things, just try it out. Feed it your half-baked ideas, your sketches, your fragments. See what happens when a machine wanders off on your behalf. Worst case, it’s entertaining. Best case, it gives you something you’d never have thought of sober.

And if you’re like me – a recovering perfectionist with the attention span of a thousand goldfishes and the curiosity of a Weiner in a butcher shop – then you’ll understand why I did this.

Not to automate creativity.

To study it.

To bottle the chaos without killing it.

So, when you want to have a chat about this algorithm and the paper, or if you want to bake it into a model, drop me a DM.

Signing off,

Marco

I build AI by day and warn about it by night. I call it job security. Big Tech keeps inflating its promises, and I just bring the pins and clean up the mess.

👉 Think a friend would enjoy this too? Share the newsletter and let them join the conversation. LinkedIn, Google and the AI engines appreciates your likes by making my articles available to more readers.

To keep you doomscrolling 👇

- I may have found a solution to Vibe Coding’s technical debt problem | LinkedIn

- Shadow AI isn’t rebellion it’s office survival | LinkedIn

- Macrohard is Musk’s middle finger to Microsoft | LinkedIn

- We are in the midst of an incremental apocalypse and only the 1% are prepared | LinkedIn

- Did ChatGPT actually steal your job? (Including job risk-assessment tool) | LinkedIn

- Living in the post-human economy | LinkedIn

- Vibe Coding is gonna spawn the most braindead software generation ever | LinkedIn

- Workslop is the new office plague | LinkedIn

- The funniest comments ever left in source code | LinkedIn

- The Sloppiverse is here, and what are the consequences for writing and speaking? | LinkedIn

- OpenAI finally confesses their bots are chronic liars | LinkedIn

- Money, the final frontier. . . | LinkedIn

- Kickstarter exposed. The ultimate honeytrap for investors | LinkedIn

- China’s AI+ plan and the Manus middle finger | LinkedIn

- Autopsy of an algorithm – Is building an audience still worth it these days? | LinkedIn

- AI is screwing with your résumé and you’re letting it happen | LinkedIn

- Oops! I did it again. . . | LinkedIn

- Palantir turns your life into a spreadsheet | LinkedIn

- Another nail in the coffin – AI’s not ‘reasoning’ at all | LinkedIn

- How AI went from miracle to bubble. An interactive timeline | LinkedIn

- The day vibe coding jobs got real and half the dev world cried into their keyboards | LinkedIn

- The Buy Now – Cry Later company learns about karma | LinkedIn

Leave a comment