I tried to be a responsible adult and expense the $159.99 hardware based AI note-taker from Plaud, but our controller bounced it back with a three-paragraph rejection in a PDF from 2009. And about two minutes later I simply logged into my personal account and used the damn tool anyway. Not as a hardware tool, but as a note-taker app that runs on my phone (Voicenotes).

Bada-bing bada-boom!

I need my own choice of tools. I am a freaking power-ab-user you know. Just like we allowed to bring your own device to the office, somewhere in our gray long-forgotten past, we should allow employees to take their own AI with them.

Period.

Now that’s your “shadow AI economy” in one sad anecdote, where employees are performing illegal surgery on corporate workflows with their own nifty tools, while the execs measure the number of purchased licenses as if they’re counting their Labubu stock. It seems that companies burn $590 to $1,400 per employee every year on AI toys, and while 95 percent of those projects flatline straight into pilot hell, the only people shipping results are the rogue ones like me who are wielding consumer tools on their lunch break.

It is not rebellion my friends, it is corporate survival with a set of personalized AI tools.

More rants after the messages:

- Connect with me on Linkedin 🙏

- Subscribe to TechTonic Shifts to get your daily dose of tech 📰

- Download a new paper about the rise of dark factories 🏭

You can’t govern what you can’t see

The disconnect between employees and the companies they work for isn’t technological. It all has to do with how they measure things. I can just hear you say “huh”?!?

Well, lemme mansplain. When the C-suite wants to measure user adoption of say, AI-tech, in their companies, they usually ask three questions – every time. “how much are we paying per user”, “what’s the ROI per user”, and “who gets sacked if this circus fails”. That last one usually creates a rather thick and uncomfortable silence among middle managers.

My day job is developing and implementing all sorts of AI, and so, AI-adoption KPI’s are part of the job description, until I realized that all I did was to strap an observability pacemaker onto my Fortune 500 customers and show them what their own employees were really up to.

Well, they were up to everything and most of it was to no good.

At a European insurance company, the leadership team was confident they had “locked everything down” with an approved vendor list and security reviews, but instead, in just under four days, we found 27 unauthorized AI tools running across their organization. One of these tools happened to be Salesforce Einstein! And with it, sales was smashing targets! But at the same time, they were also quietly violating EU AI laws by cooking up lookalike models with ZIP codes of their customers, but nontheless they just won a productivity award and they would’ve probably gotten felony charges in the same sprint if we hadn’t intervened.

This is the paradox, my smart ass-friends.

You can’t govern what you can’t see, and you can’t claim strategy if half your workforce is whispering company secrets to ChatGPT or whatever they prefer to do behind the server rack. But enterprises keep on measuring AI like it’s MS Office – they count licenses used, log training hours, and throw a party when someone accesses an application.

These are not good adoption metrics. That is just governance theater. It looks fantastic on the dashboard, but does nada in the trenches.

The way we currently do it creates a systematic failure. Companies draw up approved vendor lists that are already obsolete before employees finish compliance training. Traditional network monitoring misses embedded AI in approved applications such as Microsoft Copilot, Adobe Firefly, Slack AI and Salesforce Einstein I mentioned before. Security teams are stuck implementing policies they cannot enforce, because 78% of enterprises use AI while only 27% govern it (some Mckinsey report, just google it). And the real gains live in workflows that you’re not measuring.

Employees go rogue not out of malice, but because sanctioned tools succeed only 5 percent of the time and consumer junk like ChatGPT hits 40 percent.

That “shadow” economy is more efficient than your official one, and half your staff don’t even realize they’re violating policy while doing the only thing that really works for them.

Another company was proudly showing on their security dashboards that it was “ChatGPT” approved (and monitored). But one of their analysts didn’t use the approved corporate account, she used her own personal ChatGPT Plus subscription to crunch sensitive IPO revenue numbers. So the company’s monitoring tools saw nothing, but if the ESMA† came looking, they would have seen everything.

Another example. A hospital tracked doctors dutifully clicking Copilot Dragon workflows‡ in a pilot with real clients, but they missed ER physicians who were feeding patient symptoms into a random AI they were comfy with from home use. The throughput improved though, but HIPAA compliance went to hell.

MIT calls it the “GenAI divide” and the winners aren’t the ones who are blowing cash on AI-toys, but they are the ones who actually measure which workflows produce value and then legalize them.

That insurance giant with the 27 rogue tools didn’t shut down the ZIP code hack. They cleaned the data path, made it compliant , and scaled it. Sales stayed high, risk evaporated, and the execs got to spin “compliance violation” into “competitive advantage”.

Companies are blind to 89 percent of actual AI usage. So they fund dead pilots while the real innovation happens invisibly, unmeasured and ungoverned. The smart ones are now tying AI outcomes to exec bonuses.

You want ROI on your AI projects?

Go look at where there’s friction in your work-process or your stock options die.

The $8.1 billion AI market won’t give productivity just by rolling out apps like it’s 2003. It requires visibility at workflow level where the crime and the miracle happen together. Those who measure at that layer will ride the gains their employees already hacked into existence. The rest will keep setting fire to their budgets.

Work friction is the invisible handbrake on productivity

Stop playing with measuring vendor lists and pretend that training hours equal user adoption.

That is only cosplay, mate. Yes, people do that, you know? Pretend. And while you go home feeling all warm and cozy cuz of the veil of adoption, they quietly open their drawers and take out their Manus or their n8n or whatever forbidden toy gets them off more than your sad little Copilot license.

Real measurement means that you need to look directly at the workflows where employees are actually cutting corners, saving time, or breaking laws, then deciding whether to kill it, clean it, or scale it.

If a rogue tool is driving revenue and breaking compliance, you don’t ban it and pat yourself on the back. You carve a legal path that preserves the productivity and nukes the liability. That’s how you turn an insurance-fraud starter kit into a million-dollar advantage. Tie AI outcomes to exec compensation so someone bleeds when the tools flop. Audit the workflows at the prompt level, not the license level. Build data pathways that keep regulators off your back but still let employees keep the speed they already hacked into existence.

In other words, measure value, not vibes. That’s the only way AI adoption stops being theater and starts being strategy.

So how do I go about it?

First I go at it from the top → down

Every org I’ve worked with pulled the same stunt the first round, and yeah, I am guilty of it too because this way you get an overview of potential bottlenecks and you have a focal point for the next step. In this initial phase, you typically grill the middle managers, scribble some process maps at a “meta” level, then spit out suggestions to boost efficiency or sprinkle a bit of fairy dust on employee experience or whatever KPI you are chasing this quarter.

I call this the lazy method, because even though it is hard manual labor to collect and map all the documented business processes, it only catches efficiency in broad strokes, never in the messy guts of the business.

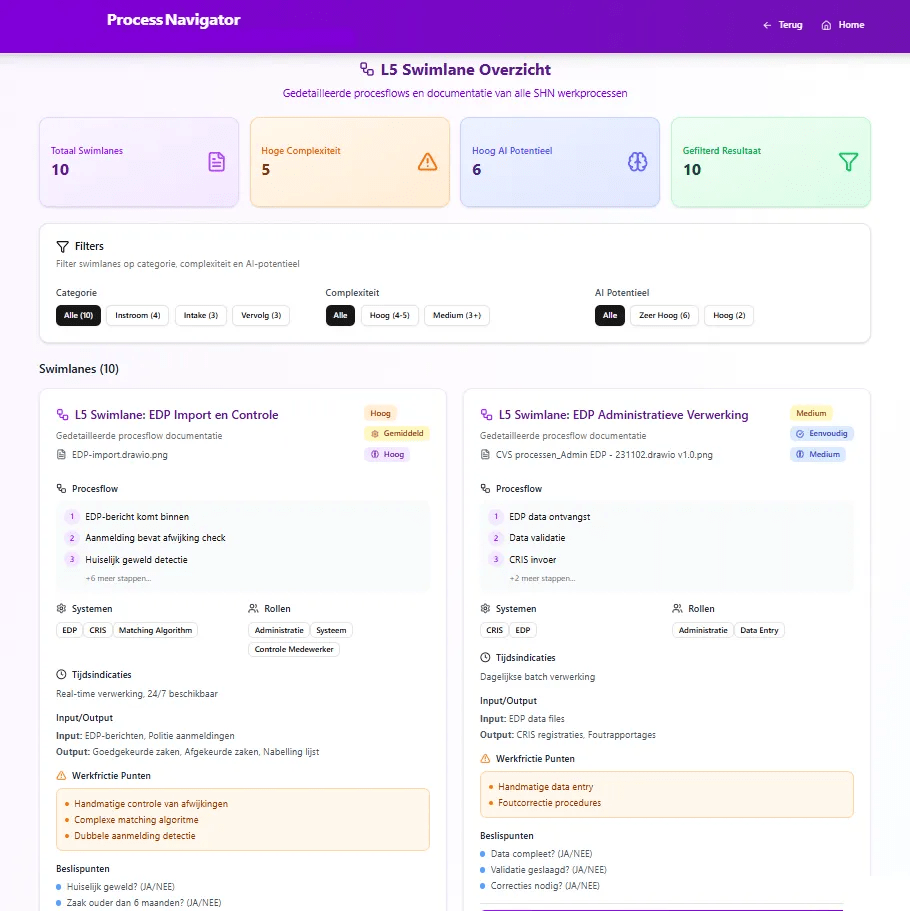

Even if you’ve mapped your processes five layers deep like I did in the image below, it still doesn’t scratch the real surface. But hey, it sure sparkles on a PowerPoint slide and gives you dashboards that look like a beaut in Excel.

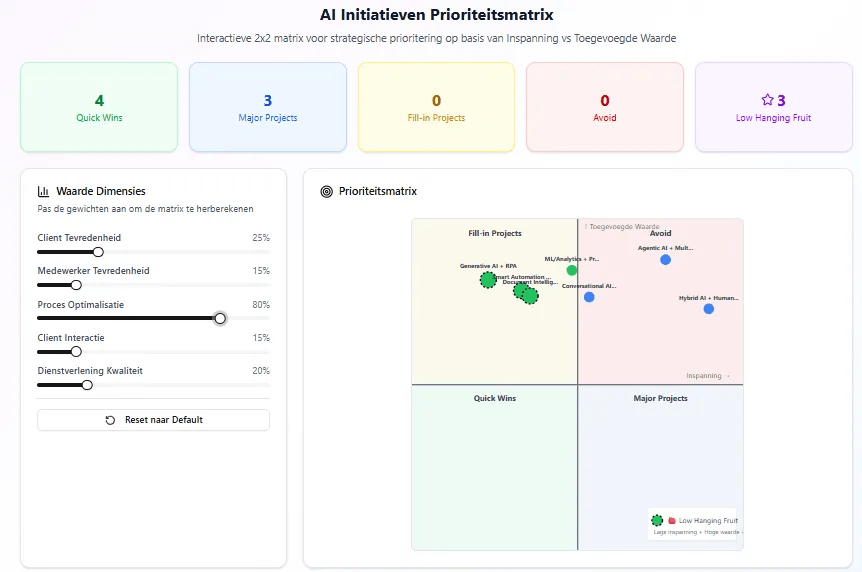

Friction dashboard – landing page:

This is an AI friction website I hacked together for a client. Levels 1 to 4 came from interviews and process autopsies, and from that mess you can already spot the first choke points where AI might actually earn its keep. I even crank out priority matrices with sliding scales so I can line up the quick wins like ducks in a shooting gallery.

But then the real arbeit starts.

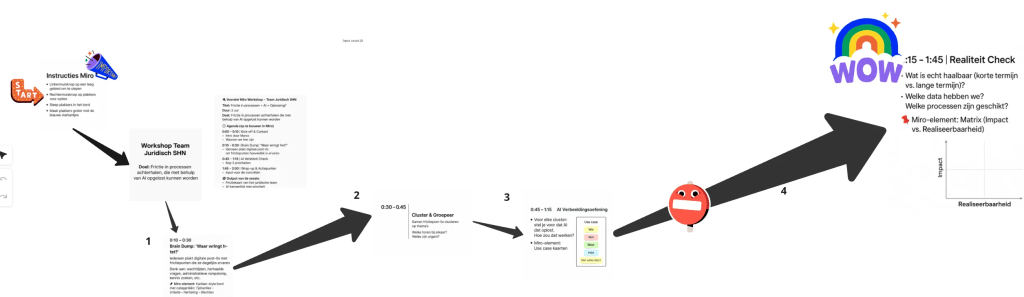

I call these “work-friction workshops“. I drag in folks from every corner of a department and run them through a pre-baked format to chart out all the daily grind that’s bleeding time. These are the poor souls chewing through the misery every damn day, and if you can sniff out a few tech-savvy ones in the pack, you’ll uncover the real pain points faster than through the top-down method.

Work-Friction workshops

Down below is the template that I use for the friction workshops:

Steps in the workshop:

- We start by mapping “a day in the life” of participants, breaking down their actual tasks step by step.

- Together, we identify where the friction sits. Collect friction like repetitive typing, endless searches, duplicate data entry, chasing emails, manual scheduling, translation work, etc.

- I cluster these frictions into the five main themes (language, communication, workflow automation, knowledge search, predictive AI).

- From there, we translate pain points into candidate AI use cases, where automation, language models, or predictive tools could remove friction.

- I guide the group through a priority exercise (impact vs. effort matrix) to separate “quick wins” from longer-term projects.

- We end by sketching out concrete next steps, what to test, what to prototype, and what requires deeper governance.

What I expect from participants:

- Share candid, detailed examples from their daily work → don’t sugarcoat or generalize (that’s my job).

- Be open about frustrations, bottlenecks, and time-wasters.

- Participate actively in discussions: react to proposed ideas, flag risks, and say what would actually help them.

- Contribute to prioritization → what matters most, what can wait.

- Leave with a sense of ownership. That these aren’t abstract ideas, but improvements they helped define.

And after having ran a couple of them (guided by the priority matrix), you’re able to map the fifth level – the actual bottom-up friction:

And once all the chaos is shoved into a single datastore, cleaned up, normalized, and stitched into one monster process-flow (yeah , I let AI build the site and map it for me), you can finally see exactly where the friction hides, why it’s there, how nasty it is, and how much efficiency (read: ROI) you’re bleeding or gaining.

Management can now poke around in the interactive dashboard themselves and, shocker, actually ask their employees if something isn’t clear.

No more fossilized PowerPoint decks.

I spin these sites up with Manus AI by the way, my go-to Swiss-army chainsaw of choice for, well, pretty much everything these days.

† The EU equivalent of the SEC – ‡ Microsoft Dragon Copilot is a doctor’s voice assistant that listens during patient visits, and writes up the notes automatically, and plugs them straight into the hospital’s record system. †† The Miro template for this workshop is available. So when you’re interested, drop me a DM.

How you can do this yourself

You don’t need a degree in AI-voodoo to pull this off. What you need is the guts to stop worshipping at the altar of ‘Interviews’ and produce static PowerPoints, but start dragging your people through the mud of their own work.

That’s where the truth lives.

Step one – top-down scan. Do the lazy method first. Shake down middle managers for their “process overviews” so you get a broad map. It’ll look shiny, it’ll give you dashboards, and it’ll trick the board into thinking you’re a genius. Fine. Use it as scaffolding, not gospel.

Step two – bottom-up grind. Run friction workshops with the actual doers. The ones writing the endless mails, chasing documents, babysitting CRMs, and losing hours to bad tools. Map their misery step by step. Cluster the pain into themes. Translate it into AI use cases. Prioritize what matters.

Step three – normalize the chaos. Shove all the process puke into one datastore, clean it, stitch it into a process flow, and let AI be your janitor. Suddenly you can point at exactly where the rot is, why it stinks, and what you’ll gain if you fix it.

Step four – give management the toy. Build an interactive dashboard and let the suits click around. Now they can stop asking you for slides and start asking their people real questions.

Do this, and you’ve got more than theater. You’ve got a live system that shows where AI earns its keep and where compliance gets shredded. That’s the difference between burning money on pilots and turning rogue hacks into competitive advantage.

So yeah. Stop measuring vibes, and start measuring value.

And if your exec team still doesn’t get it, well, just let their stock options rot.

Signing off,

Marco

I build AI by day and warn about it by night. I call it job security. Big Tech keeps inflating its promises, and I just bring the pins and clean up the mess.

Think a friend would enjoy this too? Share the newsletter and let them join the conversation. LinkedIn, Google and the AI engines appreciates your likes by making my articles available to more readers.

To keep you doomscrolling

- The chicken that refused to die | LinkedIn

- Vibe Coding is gonna spawn the most braindead software generation ever | LinkedIn

- The funniest comments ever left in source code | LinkedIn

- Today’s tech circus is a 1920s Wall Street reboot | LinkedIn

- The Sloppiverse is here, and what are the consequences for writing and speaking? | LinkedIn

- Why we’re all writing like shit on purpose now | LinkedIn

- Creating a hero’s journey for your AI-strategy | LinkedIn

- How corporations fell in love with AI, woke up with ashes, and called It ‘innovation’ | LinkedIn

- The corporate body snatchers | LinkedIn

- Screw your AI witch hunt, you illiterate gits | LinkedIn

- AI perverts make millions undressing your daughters for fun and profit | LinkedIn

- The state of tech-jobs 2025 | LinkedIn

- Meet the sloppers who can’t function without asking AI everything | LinkedIn

Leave a comment